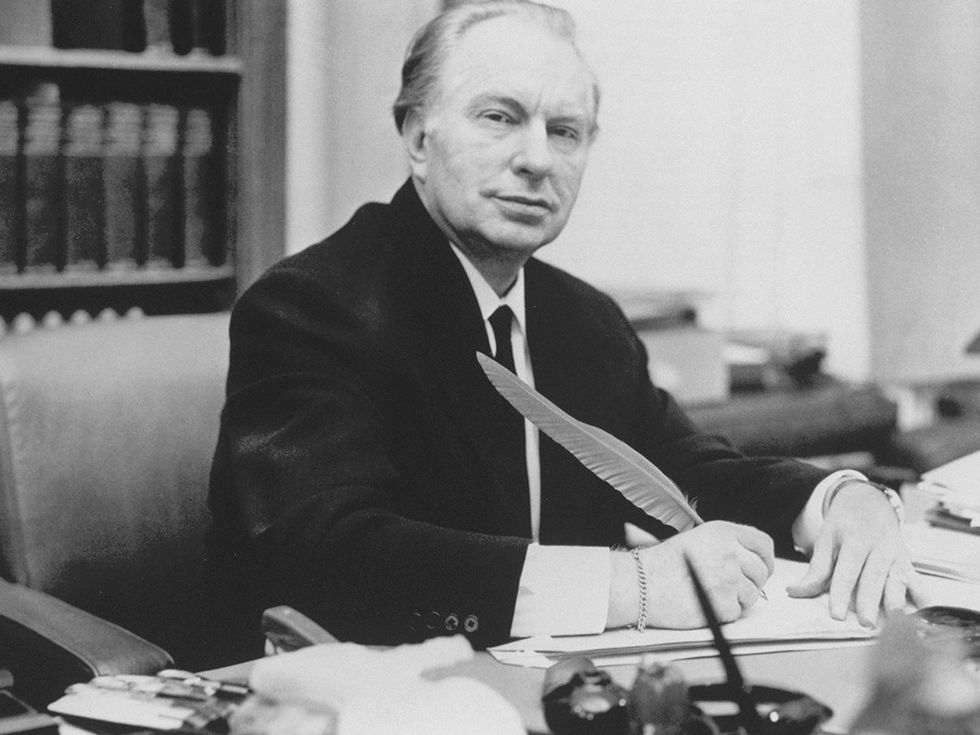

To call L. Ron Hubbard a prolific writer is an extreme understatement. From 1934 to 1940, he regularly penned 70,000 to 100,000 words per month of pulp fiction under 15 different pseudonyms published in various magazines. Not to be constrained by genre, he wrote zombie mysteries, historical fiction, pirate adventure tales, and westerns.

But by the spring of 1938, Hubbard started honing his craft in science fiction. The publishers of Astounding Science Fiction approached Hubbard to write stories that focused on people, rather than robots and machines. His first story, “The Dangerous Dimension,” was a light-hearted tale about a professor who could teleport anywhere in the universe simply by thinking “Equation C.”

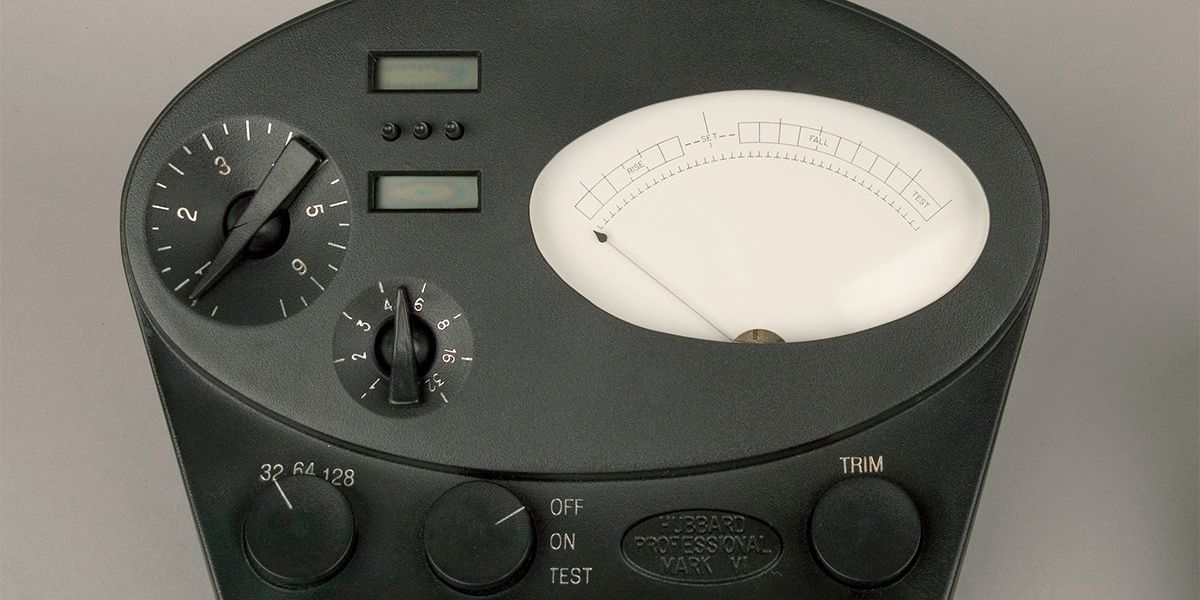

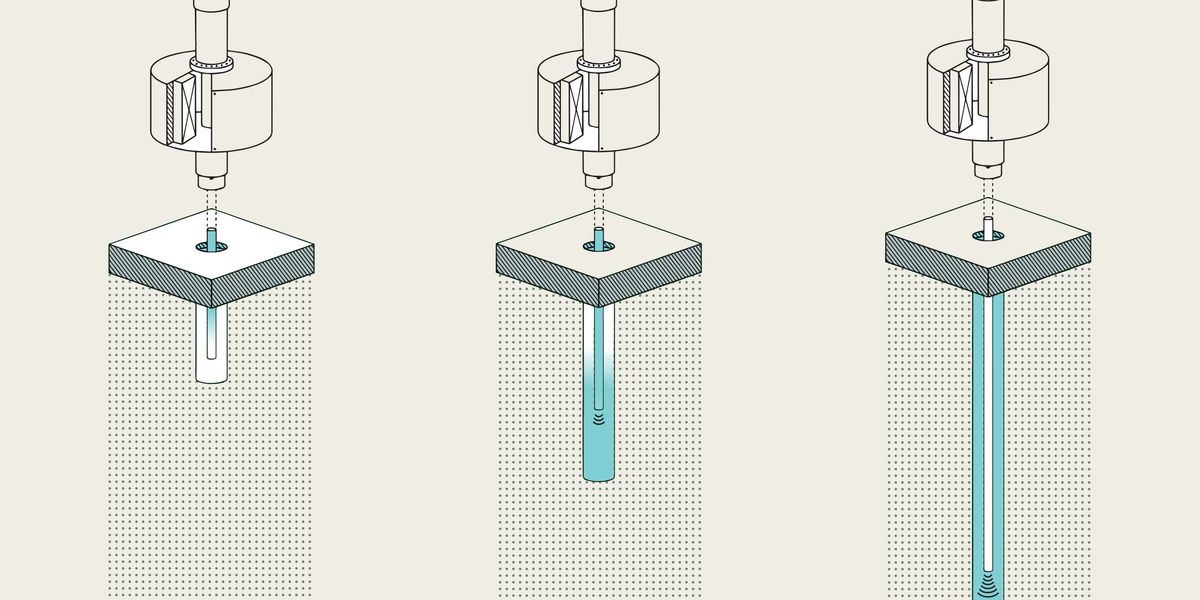

How Scientologists use the E-meter

Twelve years and more than a hundred stories later, Hubbard published a very different essay in the May 1950 issue of Astounding Science Fiction: “Dianetics: The Evolution of a Science.” In the essay, Hubbard recounts his own journey to discover what he called the reactive mind and the “technology” to conquer it. The essay was the companion piece to his simultaneously released book, Dianetics: The Modern Science of Mental Health, which in turn became the foundation for a new religion: the Church of Scientology.

Marrying technology with spirituality, Hubbard introduced the electropsychometer, or E-meter, in the 1950s as a device to help his ministers measure the minds, bodies, and spirits of church members. According to church dogma, the minds of new initiates are impaired by “engrams”—lingering traces of traumas, including those from past lives. An auditor purportedly uses the E-meter to identify and eliminate the engrams, which leads eventually to the person’s reaching a state of being “clear.” Before reaching this desirable state, a church member is known as a “preclear.”

To use the E-meter, a user grasps the metal cylinders while a mild electrical current runs through them. A human auditor interprets the device’s readings.Whipple Museum of the History of Science/University of Cambridge

To use the E-meter, a user grasps the metal cylinders while a mild electrical current runs through them. A human auditor interprets the device’s readings.Whipple Museum of the History of Science/University of Cambridge

During an auditing session, a preclear holds the E-meter’s two metal cylinders, one in each hand, as a small electrical current flows through them. The auditor asks a series of questions while operating two dials on the E-meter. The larger dial adjusts the resistance; the smaller dial controls the amplification of the needle. The auditor doesn’t read specific measurements on the meter but rather interprets the needle’s movement as the preclear responds to the questions.

The church’s 2014 Super Bowl ad, which invites viewers to “imagine science and religion connecting,” offers a glamorized glimpse of an auditing session:

Scientology Spiritual Technology - Super Bowl Commercial 2014 youtu.be

In his writings, Hubbard described the E-meter as a Wheatstone bridge, an electrical circuit designed in 1833 by Samuel Hunter Christie to measure an unknown resistance. (Sir Charles Wheatstone popularized the device about a decade later, and his name stuck.) Technically, the E-meter is a modified ohm meter measuring the galvanic skin response of the user—changes in the skin’s electrical resistance, that is. Galvanic skin response is an example of the sympathetic nervous system in action. It’s how your body automatically responds to various stimuli, such as your heart beating faster when you’re scared.

Rejection of L. Ron Hubbard’s claims

Hubbard was not the first to use electrical devices to measure the sympathetic nervous system and consider it a reflection of the mind. As early as 1906, psychologist Carl Jung was noting changes in skin resistance in response to emotionally charged words. By the 1920s, John Larson was using the polygraph to interrogate police subjects.

Hubbard sought approval for his ideas from the medical establishment, but almost immediately, organizations such as the American Psychological Association rejected his theories as pseudoscience. In fact, several scholars looking to dismiss the validity of the E-meter compared it to lie detectors, which also require human operators to interpret the results—and which have also been categorized as being of dubious value by the American Psychological Association and the U.S. National Academy of Sciences.

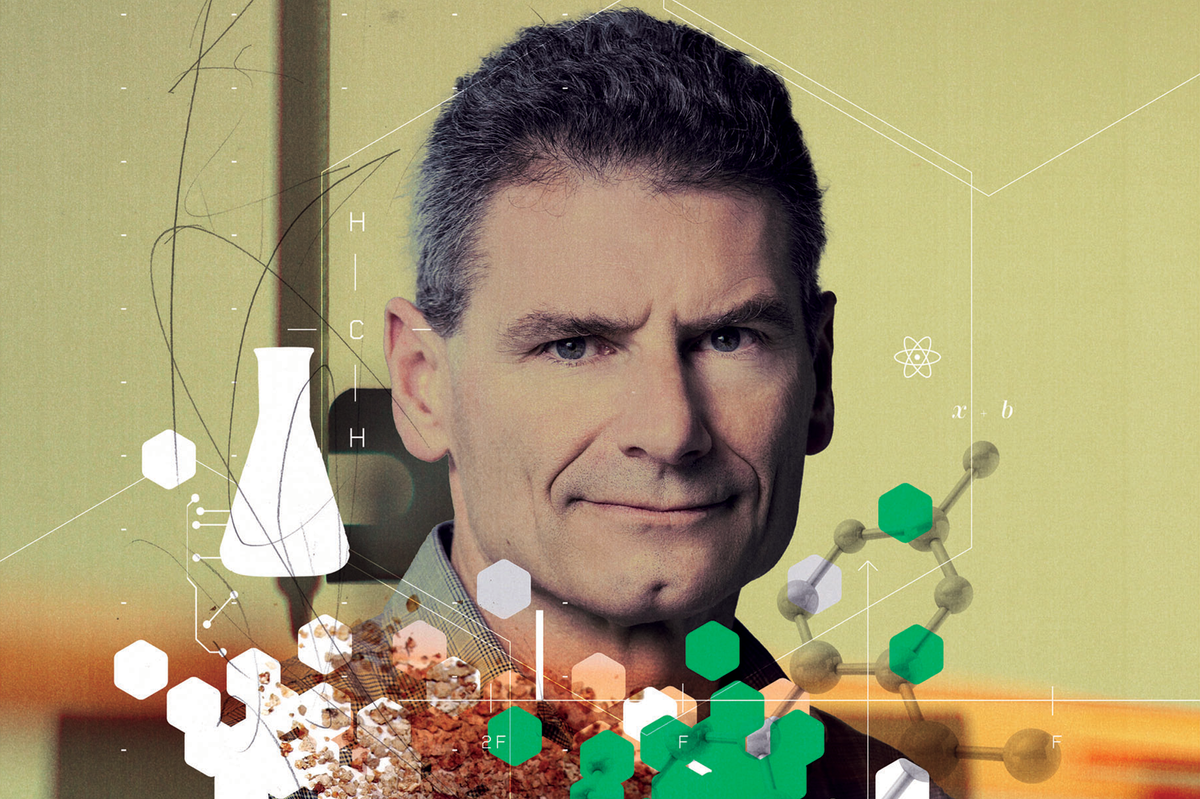

L. Ron Hubbard, shown in 1999, was a prolific sci-fi writer before launching Dianetics and the Church of Scientology.Yves Forestier/Sygma/Getty Images

L. Ron Hubbard, shown in 1999, was a prolific sci-fi writer before launching Dianetics and the Church of Scientology.Yves Forestier/Sygma/Getty Images

Government authorities also condemned the church’s claims. In 1951, for example, the New Jersey State Board of Medical Examiners accused one of Hubbard’s foundations of teaching medicine without a license. A few years later, the Food and Drug Administration seized vitamin supplements that Hubbard claimed protected against radiation.

One of the most dramatic episodes occurred in 1963, when U.S. Marshals raided Hubbard’s headquarters in Washington, D.C., and confiscated more than a hundred E-meters. The FDA had issued a warrant that accused the church of falsely claiming that the devices had both physical and mental therapeutic properties. The lawsuit stretched on for years, and the court initially found against the church. On appeal, a judge ruled that the E-meter could be used for religious purposes as long as it clearly displayed this warning label: “The E-Meter is not medically or scientifically useful for the diagnosis, treatment or prevention of disease. It is not medically or scientifically capable of improving the health or bodily functions of anyone.”

Scientologists modified this warning, instead printing this advisory on their instruments: “The Hubbard Electrometer is a religious artifact. By itself, this meter does nothing. It is for religious use by students and ministers of the church in confessionals and pastoral counseling only.”

The E-meter as a recruitment tool

As Scientology spread outside the United States, attacks on the E-meter and the church continued. In Australia, Kevin Anderson wrote the official “Report of the Board of Enquiry Into Scientology” for the state of Victoria. Published in 1965, it became known as the Anderson Report. He did not mince words: “Scientology is evil; its techniques evil; its practice a serious threat to the community, medically, morally and socially; and its adherents sadly deluded and often mentally ill.”

In 1961, Hubbard wrote of his recent discovery that the E-meter requires the auditor to have “command value” over the person being audited.Keystone Press/Alamy

In 1961, Hubbard wrote of his recent discovery that the E-meter requires the auditor to have “command value” over the person being audited.Keystone Press/Alamy

Chapter 14 of the report is devoted to the E-meter, which Anderson viewed as a powerful enabler of Scientology. After describing its construction and use, the report offers expert witness testimony negating Scientologists’ claims, based on the modern understanding of electrical resistance. It then points out specific claims that stretch credulity, such as how the E-meter supposedly helps preclears recall incidents trillions of years in the past down to the precise second. The report quotes from Hubbard’s written recollection of having received an implant “43,891,832,611,177 years, 344 days, 10 hours, 20 minutes and 40 seconds from 10:02.30 P.M. Daylight Greenwich Time May 9, 1963.”

The report also cites the Hubbard Communications Office Bulletin of 30 November 1961, in which Hubbard admits: “An E-meter has a frailty I have just discovered. It operates only if the auditor has some, even small, command value over the pc [preclear], and operates hardly at all when the auditor has no command value over the pc.” Given this imbalance between the auditor and the preclear, Anderson reasoned, the E-meter is a powerful tool for manipulation. “Fears of its abilities keep [preclears] in constant subjection,” the report states. “Its use can be so manipulated by cunningly phrased questions that almost any desired result can be obtained, and it is used unscrupulously to dominate students and staff alike. All the evil features of scientology are intensified where the E-meter is involved.”

Hubbard’s response? The report was simply a kangaroo court that already knew its conclusions before the first witness was called.

The E-meter patents never mentioned religion

Although Hubbard didn’t invent the E-meter, he inspired its creation, came up with a transistorized, battery-powered unit, and received several patents for later versions.

In his patents (see, for example, U.S. Patent No. 3,290,589, “Device for Measuring and Indicating Changes in Resistance of a Living Body”), Hubbard stuck to technical descriptions of the circuitry. The patents made no claims about reading a person’s thoughts or using the device for religious purposes. But Hubbard’s own writings are chock-full of technobabble, intermingling actual technical terms with statements that are demonstrably false. One of my favorites is his differentiation of the resistances of dead male and female bodies: 12,500 ohms and 5,000 ohms, respectively.

Obviously, from the viewpoint of contemporary science, the claims that the E-meter unlocks past-life traumas cannot be verified or replicated. The instruments themselves are imprecise and unreliable, the readouts depending on things like how the cylinders are grasped. And of course, the auditor can interpret the results any way they choose. Scientists and psychologists routinely denounce Scientology as quackery, yet at the same time, religious scholars find parallels to older, well-established faiths.

Centuries ago, Copernicus and Galileo posited a new science that flew in the face of religious beliefs. L. Ron Hubbard turned that idea on its head and founded a new religion purportedly based on science, and he positioned the E-meter as the device for entangling technology and spirituality.

Part of a continuing series looking at historical artifacts that embrace the boundless potential of technology.

An abridged version of this article appears in the April 2024 print issue as “The Scientology Machine.”

References

In researching the E-meter, I quickly found the literature to be polarizing, divided among the true believers, those set to debunk the science, and scholars trying to make sense of it all.

L. Ron Hubbard was a prolific writer as well as a lecturer, and so there are tons of primary sources. I found Introducing the E-meter to be a great starting place.

David S. Touretzky, a computer science professor at Carnegie Mellon University, maintains an extensive website critiquing the E-meter. He includes numerous Scientology texts, along with his own explanations.

Stefano Bigliardi’s article “New Religious Movements, Technology, and Science: The Conceptualization of the E-meter in Scientology Teachings,” in Zygon: Journal of Religion and Science (September 2016), offered an even-handed discussion of the E-meter, reflecting on its function and symbolic meaning within various Scientology texts.

Reference: https://ift.tt/G9oU7bk

Quaise Energy has developed a prototype portable gyrotron, which they plan to be conducting field tests with later this year.Quaise Energy

Quaise Energy has developed a prototype portable gyrotron, which they plan to be conducting field tests with later this year.Quaise Energy Quaise Energy drilled a hole 254 centimeters (100 inches) deep with a 2.5 cm diameter into a column of basalt, making it 100 times the depth of the team’s original tests, as conducted at MIT.Quaise Energy

Quaise Energy drilled a hole 254 centimeters (100 inches) deep with a 2.5 cm diameter into a column of basalt, making it 100 times the depth of the team’s original tests, as conducted at MIT.Quaise Energy

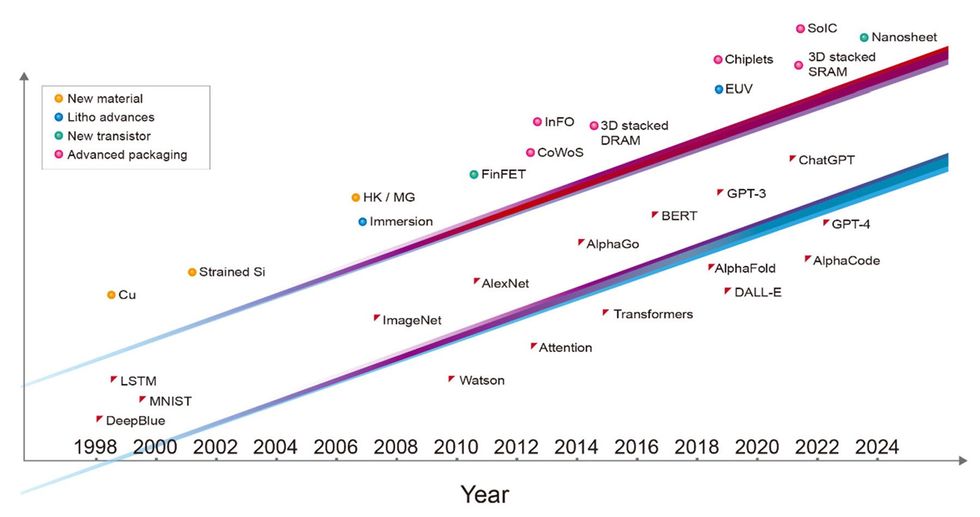

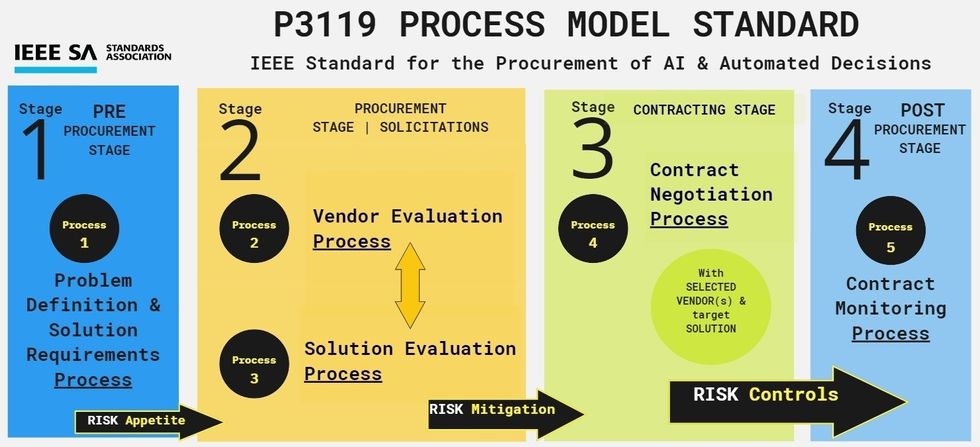

Advances in semiconductor technology [top line]—including new materials, advances in lithography, new types of transistors, and advanced packaging—have driven the development of more capable AI systems [bottom line]

Advances in semiconductor technology [top line]—including new materials, advances in lithography, new types of transistors, and advanced packaging—have driven the development of more capable AI systems [bottom line]

Gisele Waters

Gisele Waters