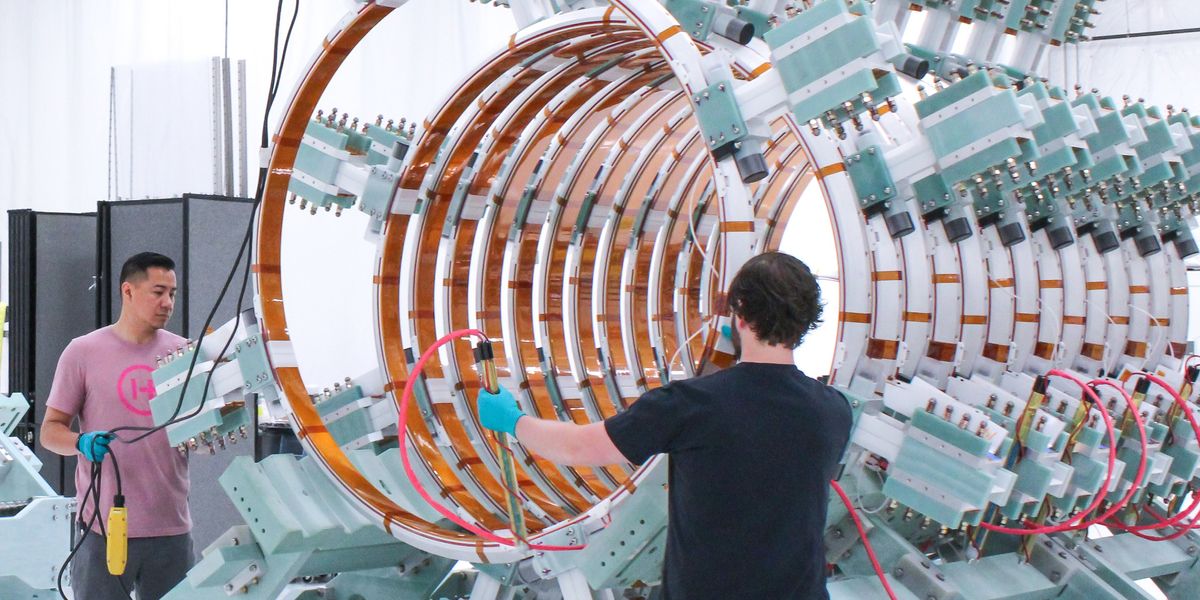

As a child, Alberto Moreira discovered his passion for electronics from the kits for exploring science, technology, engineering, and mathematics that his father bought him every month. The kits taught him not only about electronics but also about chemistry and physics. As he got older, he and his brother began making their own electronic circuits. When Moreira was 15, the duo built high-fidelity amplifiers and control panels for neon signs, selling them to small companies that used such displays to advertise their business. Those early experiences ultimately led to a successful career as director of the German Aerospace Center (DLR)’s Microwaves and Radar Institute, in Oberpfaffenhofen, Bavaria, where the IEEE Fellow developed a space-based interferometric synthetic-aperture radar system. ALBERTO MOREIRA EMPLOYER German Aerospace Center’s Microwaves and Radar Institute, in Oberpfaffenhofen, Bavaria TITLE Director MEMBER GRADE Fellow ALMA MATER SInstituto Tecnológico de Aeronáutica, São José dos Campos, Brazil; and the Technical University of Munich That InSAR system has generated digital elevation maps of the Earth’s surface with unparalleled accuracy and resolution. The models now serve as a standard for many geoscientific, remote sensing, topographical, and commercial applications. Moreira’s technology also helps to track the effects of climate change. For his “leadership and innovative concepts in the design, deployment, and utilization of airborne and space-based radar systems,” Moreira is this year’s recipient of the IEEE Dennis J. Picard Medal for Radar Technologies and Applications. It is sponsored by Raytheon Technologies. Moreira says he’s honored to receive the “most prestigious award in the radar technologies and applications field.” “It recognizes the 20 years of hard work my team and I put into our research,” he says. “What makes the honor more special is that the award is from IEEE.” Using radar to map the Earth’s surface Before Moreira and his team developed their InSAR system in 2010, synthetic-aperture radar systems were the state of the art, he says. Unlike optical imaging systems, ones that use SAR can penetrate through clouds and rain to take high-resolution images of the Earth from space. It can also operate at night. An antenna on an orbiting satellite sends pulsed microwave signals to the Earth’s surface as it passes over the terrain being mapped. The signals are then reflected back to the antenna, allowing the system to measure the distance between the antenna and the point on the Earth’s surface where the signal is reflected. Using data-processing algorithms, the reflected signals are combined in such a way that a computationally generated, synthetic antenna acts as though it were a much larger one—which provides improved resolution. That’s why the approach is called synthetic-aperture radar. “The system is documenting changes taking place on Earth and facilitating the early detection of irreversible damage.” While leading a research team at the DLR in the early 1990s, Moreira saw the potential of using information gathered from such radar satellites to help address societal issues such as sustainable development and the climate crisis. But he wanted to take the technology a step further and use interferometric synthetic-aperture radar, InSAR, which, he realized, would be more powerful. SAR satellites provide 2D images, but InSAR allows for 3D imaging of the Earth’s surface, meaning that you can map topography, not just radar reflectivity. It took Moreira and his team almost 10 years to develop their InSAR system, the first to use two satellites, each with its own antenna. Their approach allows elevation maps to be created. The two satellites, named TerraSAR-X and TanDEM-X, orbit the Earth in almost circular orbits, with the distance between the satellites varying from 150 to 500 meters at any given time. To avoid collisions, Moreira and his team developed a double helix orbit; the satellites travel along an ellipse and corkscrew around each other. The satellites communicate with each other and with ground stations, sending altitude and position data so that their separation can be fine-tuned to help avoid collisions. Each satellite emits microwave pulses and each one receives the backscattered signals. Although the backscattered signals received by each satellite are almost identical, they differ slightly due to the different viewing geometries. And those differences in the received signals depend on the terrain height, allowing the surface elevation to be mapped. By combining measurements of the same area obtained at different times to form interferograms, scientists can determine whether there were subtle changes in elevation in the area, such as rising sea levels or deforestation, during the intervening time period. The InSAR system was used in the DLR’s 2010 TanDEM-X mission. Its goal was to create a topographical map of the Earth with a horizontal pixel spacing of 12 meters. After its launch, the system surveyed the Earth’s surface multiple times in five years and collected more than 3,000 terabytes of data. In September 2016 the first global digital elevation map with a 2-meter height accuracy was produced. It was 30 times more accurate than any previous effort, Moreira says. The satellites are currently being used to monitor environmental effects, specifically deforestation and glacial melting. The hope, Moreira says, is that early detection of irreversible damage can help scientists pinpoint where intervention is needed. He and his team are developing a system that uses more satellites flying in close formation to improve the data available from radar imaging. “By collecting more detailed information, we can better understand, for example, how the forests are changing internally by imaging every layer,” he says, referring to the emergent layer and the canopy, understory, and forest floor. He also is developing a space-based radar system that uses digital beamforming to produce images of the Earth’s surface with higher spatial resolution in less time. It currently takes radar systems about 12 days to produce a global map with a 20-meter resolution, Moreira says, but the new system will be able to do it in six days with a 5-meter resolution. Digital beamforming represents a paradigm shift for spaceborne SAR systems. It consists of an antenna divided in several parts, each of which has its own receiving channel and analog-to-digital converter. The channels are combined in such a way that different antenna beams can be computed a posteriori to increase the imaged swath and the length of the synthetic aperture—which allows for a higher spatial resolution, Moreira says. He says he expects three such systems to be launched within the next five years. A lifelong career at the DLR Moreira earned bachelor’s and master’s degrees in electrical engineering from the Instituto Tecnológico de Aeronáutica, in São José dos Campos, Brazil, in 1984 and 1986. He decided to pursue a doctorate outside the country after his master’s thesis advisor told him there were more research opportunities elsewhere. Moreira earned his Ph.D. in engineering at the Technical University of Munich. As a doctoral student, he conducted research at the DLR on real-time radar processing. For his dissertation, he created algorithms that generated high-resolution images from one of the DLR’s existing airborne radar systems. “Having students and engineers work together on large-scale projects is a dream come true.” After graduating in 1993, he planned to move back to Brazil, but instead he accepted an offer to become a DLR group leader. Moreira led a research team of 10 people working on airborne- and satellite-system design and data processing. In 1996 he was promoted to chief scientist and engineer in the organization’s SAR-technology department. He worked in that position until 2001, when he became director of the Microwaves and Radar Institute. “I selected the right profession,” he says. “I couldn’t imagine doing anything other than research and electronics.” He is also a professor of microwave remote sensing at the Karlsruhe Institute of Technology, in Germany, and has been the doctoral advisor for more than 50 students working on research at DLR facilities. One of his favorite parts about being a director and professor is working with his students, he says: “I spend about 20 percent of my time with them. Having students and engineers work together on large-scale projects is a dream come true. When I first began my career at DLR I was not aware that this collaboration would be so powerful.” The importance of creating an IEEE network It was during his time as a doctoral student that Moreira was introduced to IEEE. He presented his first research paper in 1989 at the International Geoscience and Remote Sensing Symposium, in Vancouver. While attending his second conference, he says, he realized that by not being a member he was “missing out on many important things” such as networking opportunities, so he joined. He says IEEE has played an important role throughout his career. He has presented all his research at IEEE conferences, and he has published papers in the organization’s journals. He is a member of the IEEE Aerospace and Electronic Systems, IEEE Antennas and Propagation, IEEE Geoscience and Remote Sensing (GRSS), IEEE Information Technology, IEEE Microwave Theory and Techniques, and IEEE Signal Processing societies. “I recommend that everyone join not only IEEE but also at least one of its societies,” he says, calling them “the home of your research.” He founded the IEEE GRSS Germany Section in 2003 and served as the society’s 2010 president. An active volunteer, he was a member of the IEEE GRSS administrative committee and served as associate editor from 2003 to 2007 for IEEE Geoscience and Remote Sensing Letters. Since 2005 he has been associate editor for the IEEE Transactions on Geoscience and Remote Sensing. Through his volunteer work and participation in IEEE events, he says, he has connected with other members in different fields including aerospace technology, geoscience, and remote sensing and collaborated with them on projects. He received the IEEE Dennis J. Picard Medal for Radar Technologies and Applications on 5 May during the IEEE Vision, Innovation, and Challenges Summit and Honors Ceremony, held in Atlanta. The event is available on IEEE.tv. Reference: https://ift.tt/8SqtTHL