Imagine a portable computer built from a network of 86 billion switches, capable of general intelligence sophisticated enough to build a spacefaring civilization—but weighing just 1.2 to 1.3 kilograms, consuming just 20 watts of power, and jiggling like Jell-O as it moves. There’s one inside your skull right now. It is a breathtaking achievement of biological evolution. But there are no blueprints.

Now imagine trying to figure out how this wonder of bioelectronics works without a way to observe its microcircuitry in action. That’s like asking a microelectronics engineer to reverse engineer the architecture, microcode, and operating system running on a state-of-the-art processor without the use of a digital logic probe, which would be a virtually impossible task.

So it’s easy to understand why many of the operational details of humans’ brains (and even the brains of mice and much simpler organisms) remain so mysterious, even to neuroscientists. People often think of technology as applied science, but the scientific study of brains is essentially applied sensor technology. Each invention of a new way to measure brain activity—including scalp electrodes, MRIs, and microchips pressed into the surface of the cortex—has unlocked major advances in our understanding of the most complex, and most human, of all our organs.

The brain is essentially an electrical organ, and that fact plus its gelatinous consistency pose a hard technological problem. In 2010, I met with leading neuroscientists at the Howard Hughes Medical Institute (HHMI) to explore how we might use advanced microelectronics to invent a new sensor. Our goal: to listen in on the electrical conversations taking place among thousands of neurons at once in any given thimbleful of brain tissue.

Timothy D. Harris, a senior scientist at HHMI, told me that “we need to record every spike from every neuron” in a localized neural circuit within a freely moving animal. That would mean building a digital probe long enough to reach any part of the thinking organ, but slim enough not to destroy fragile tissues on its way in. The probe would need to be durable enough to stay put and record reliably for weeks or even months as the brain guides the body through complex behaviors.

For an electrical engineer, those requirements add up to a very tall order. But more than a decade of R&D by a global, multidisciplinary team of engineers, neuroscientists, and software designers has at last met the challenge, producing a remarkable new tool that is now being put to use in hundreds of labs around the globe.

As chief scientist at Imec, a leading independent nanoelectronics R&D institute, in Belgium, I saw the opportunity to extend advanced semiconductor technology to serve broad new swaths of biomedicine and brain science. Envisioning and shepherding the technological aspects of this ambitious project has been one of the highlights of my career.

We named the system Neuropixels because it functions like an imaging device, but one that records electrical rather than photonic fields. Early experiments already underway—including some in humans—have helped explore age-old questions about the brain. How do physiological needs produce motivational drives, such as thirst and hunger? What regulates behaviors essential to survival? How does our neural system map the position of an individual within a physical environment?

Successes in these preliminary studies give us confidence that Neuropixels is shifting neuroscience into a higher gear that will deliver faster insights into a wide range of normal behaviors and potentially enable better treatments for brain disorders such as epilepsy and Parkinson’s disease.

Version 2.0 of the system, demonstrated last year, increases the sensor count by about an order of magnitude over that of the initial version produced just four years earlier. It paves the way for future brain-computer interfaces that may enable paralyzed people to communicate at speeds approaching those of normal conversation. With version 3.0 already in early development, we believe that Neuropixels is just at the beginning of a long road of exponential Moore’s Law–like growth in capabilities.

In the 1950s, researchers used a primitive electronic sensor to identify the misfiring neurons that give rise to Parkinson’s disease. During the 70 years since, the technology has come far, as the microelectronics revolution miniaturized all the components that go into a brain probe: from the electrodes that pick up the tiny voltage spikes that neurons emit when they fire, to the amplifiers and digitizers that boost signals and reduce noise, to the thin wires that transmit power into the probe and carry data out.

By the time I started working with HHMI neuroscientists in 2010, the best electrophysiology probes, made by NeuroNexus and Blackrock Neurotech, could record the activity of roughly 100 neurons at a time. But they were able to monitor only cells in the cortical areas near the brain’s surface. The shallow sensors were thus unable to access deep brain regions—such as the hypothalamus, thalamus, basal ganglia, and limbic system—that govern hunger, thirst, sleep, pain, memory, emotions, and other important perceptions and behaviors. Companies such as Plexon make probes that reach deeper into the brain, but they are limited to sampling 10 to 15 neurons simultaneously. We set for ourselves a bold goal of improving on that number by one or two orders of magnitude.

We needed a way to place thousands of micrometer-size electrodes directly in contact with vertical columns of neurons, anywhere in the brain.

To understand how brain circuits work, we really need to record the individual, rapid-fire activity of hundreds of neurons as they exchange information in a living animal. External electrodes on the skull don’t have enough spatial resolution, and functional MRI technology lacks the speed necessary to record fast-changing signals. Eavesdropping on these conversations requires being in the room where it happens: We needed a way to place thousands of micrometer-size electrodes directly in contact with vertical columns of neurons, anywhere in the brain. (Fortuitously, neuroscientists have discovered that when a brain region is active, correlated signals pass through the region both vertically and horizontally.)

These functional goals drove our design toward long, slender silicon shanks packed with electrical sensors. We soon realized, however, that we faced a major materials issue. We would need to use Imec’s CMOS fab to mass-produce complex devices by the thousands to make them affordable to research labs. But CMOS-compatible electronics are rigid when packed at high density.

The brain, in contrast, has the same elasticity as Greek yogurt. Try inserting strands of angel-hair pasta into yogurt and then shaking them a few times, and you’ll see the problem. If the pasta is too wet, it will bend as it goes in or won’t go in at all. Too dry, and it breaks. How would we build shanks that could stay straight going in yet flex enough inside a jiggling brain to remain intact for months without damaging adjacent brain cells?

Experts in brain biology suggested that we use gold or platinum for the electrodes and an organometallic polymer for the shanks. But none of those are compatible with advanced CMOS fabrication. After some research and lots of engineering, my Imec colleague Silke Musa invented a form of titanium nitride—an extremely tough electroceramic—that is compatible with both CMOS fabs and animal brains. The material is also porous, which gives it a low impedance; that quality is very helpful in getting currents in and clean signals out without heating the nearby cells, creating noise, and spoiling the data.

Thanks to an enormous amount of materials-science research and some techniques borrowed from microelectromechanical systems (MEMS), we are now able to control the internal stresses created during the deposition and etching of the silicon shanks and the titanium nitride electrodes so that the shanks consistently come out almost perfectly straight, despite being only 23 micrometers (µm) thick. Each probe consists of four parallel shanks, and each shank is studded with 1,280 electrodes. At 1 centimeter in length, the probes are long enough to reach any spot in a mouse’s brain. Mouse studies published in 2021 showed that Neuropixels 2.0 devices can collect data from the same neurons continuously for over six months as the rodents go about their lives.

The thousandfold difference in elasticity between CMOS-compatible shanks and brain tissue presented us with another major problem during such long-term studies: how to keep track of individual neurons as the probes inevitably shift in position relative to the moving brain. Neurons are 20 to 100 µm in size; each square pixel (as we call the electrodes) is 15 µm across, small enough so that it can record the isolated activity of a single neuron. But over six months of jostling activity, the probe as a whole can move within the brain by up to 500 µm. Any particular pixel might see several neurons come and go during that time.

The 1,280 electrodes on each shank are individually addressable, and the four parallel shanks give us an effectively 2D readout, which is quite analogous to a CMOS camera image, and the inspiration for the name Neuropixels. That similarity made me realize that this problem of neurons shifting relative to pixels is directly analogous to image stabilization. Just like the subject filmed by a shaky camera, neurons in a chunk of brain are correlated in their electrical behavior. We were able to adapt knowledge and algorithms developed years ago for fixing camera shake to solve our problem of probe shake. With the stabilization software active, we are now able to apply automatic corrections when neural circuits move across any or all of the four shanks.

Version 2.0 shrank the headstage—the board that sits outside the skull, controls the implanted probes, and outputs digital data—to the size of a thumbnail. A single headstage and base can now support two probes, each extending four shanks, for a total of 10,240 recording electrodes. Control software and apps written by a fast-growing user base of Neuropixels researchers allow real-time, 30-kilohertz sampling of the firing activity of 768 distinct neurons at once, selected at will from the thousands of neurons touched by the probes. That high sampling rate, which is 500 times as fast as the 60 frames per second typically recorded by CMOS imaging chips, produces a flood of data, but the devices cannot yet capture activity from every neuron contacted. Continued advances in computing will help us ease those bandwidth limitations in future generations of the technology.

In just four years, we have nearly doubled the pixel density, doubled the number of pixels we can record from simultaneously, and increased the overall pixel count more than tenfold, while shrinking the size of the external electronics by half. That Moore’s Law–like pace of progress has been driven in large part by the use of commercial-scale CMOS and MEMS fabrication processes, and we see it continuing.

A next-gen design, Neuropixels 3.0, is already under development and on track for release around 2025, maintaining a four-year cadence. In 3.0, we expect the pixel count to leap again, to allow eavesdropping on perhaps 50,000 to 100,000 neurons. We are also aiming to add probes and to triple or quadruple the output bandwidth, while slimming the base by another factor of two.

That Moore’s Law–like pace of progress has been driven in large part by the use of commercial-scale CMOS fabrication processes.

Just as was true of microchips in the early days of the semiconductor industry, it’s hard to predict all the applications Neuropixels technology will find. Adoption has skyrocketed since 2017. Researchers at more than 650 labs around the world now use Neuropixels devices, and a thriving open-source community has appeared to create apps for them. It has been fascinating to see the projects that have sprung up: For example, the Allen Institute for Brain Science in Seattle recently used Neuropixels to create a database of activity from 100,000-odd neurons involved in visual perception, while a group at Stanford University used the devices to map how the sensation of thirst manifests across 34 different parts of the mouse brain.

We have begun fabricating longer probes of up to 5 cm and have defined a path to probes of 15 cm—big enough to reach the center of a human brain. The first trials of Neuropixels in humans were a success, and soon we expect the devices will be used to better position the implanted stimulators that quiet the tremors caused by Parkinson’s disease, with 10-µm accuracy. Soon, the devices may also help identify which regions are causing seizures in the brains of people with epilepsy, so that corrective surgery eliminates the problematic bits and no more.

The first Neuropixels device [top] had one shank with 966 electrodes. Neuropixels 2.0 [bottom] has four shanks with 1,280 electrodes each. Two probes can be mounted on one headstage.Imec

The first Neuropixels device [top] had one shank with 966 electrodes. Neuropixels 2.0 [bottom] has four shanks with 1,280 electrodes each. Two probes can be mounted on one headstage.Imec

Future generations of the technology could play a key role as sensors that enable people who become “locked in” by neurodegenerative diseases or traumatic injury to communicate at speeds approaching those of typical conversation. Every year, some 64,000 people worldwide develop motor neuron disease, one of the more common causes of such entrapment. Though a great deal more work lies ahead to realize the potential of Neuropixels for this critical application, we believe that fast and practical brain-based communication will require precise monitoring of the activity of large numbers of neurons for long periods of time.

An electrical, analog-to-digital interface from wetware to hardware has been a long time coming. But thanks to a happy confluence of advances in neuroscience and microelectronics engineering, we finally have a tool that will let us begin to reverse engineer the wonders of the brain.

This article appears in the June 2022 print issue as “Eavesdropping on the Brain.”

Reference: https://ift.tt/01UYuRb

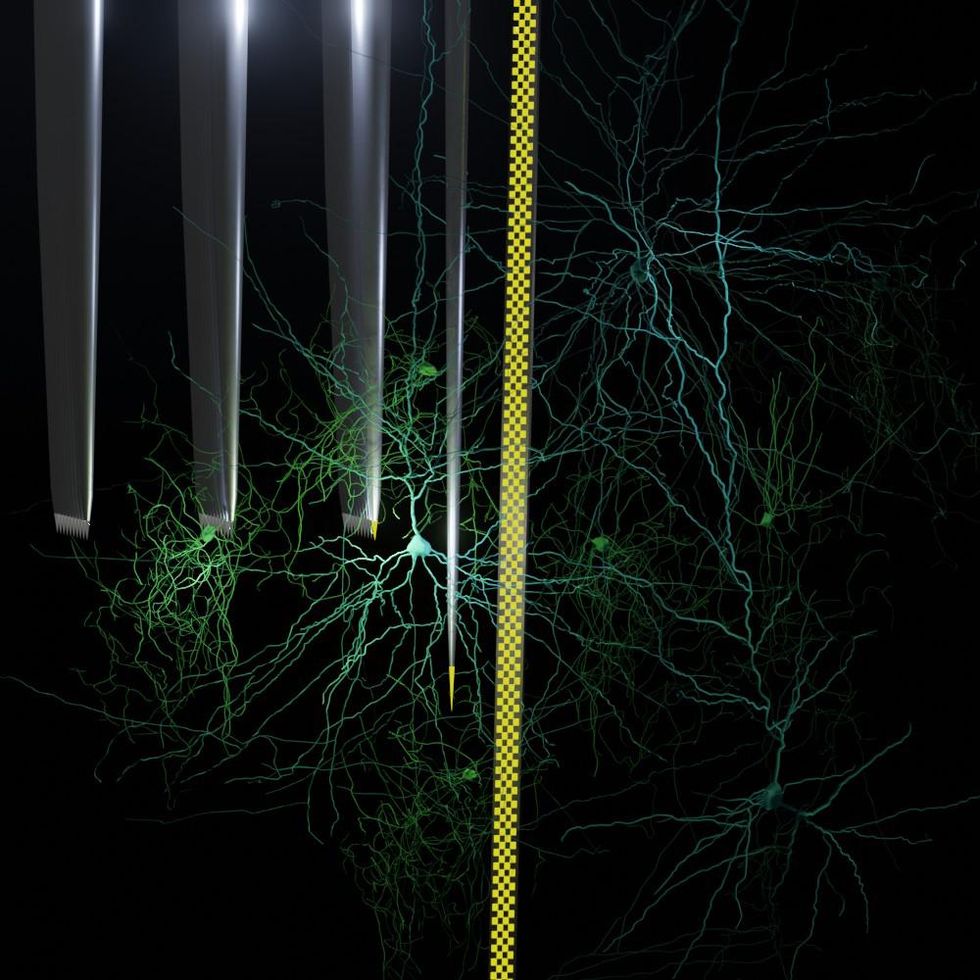

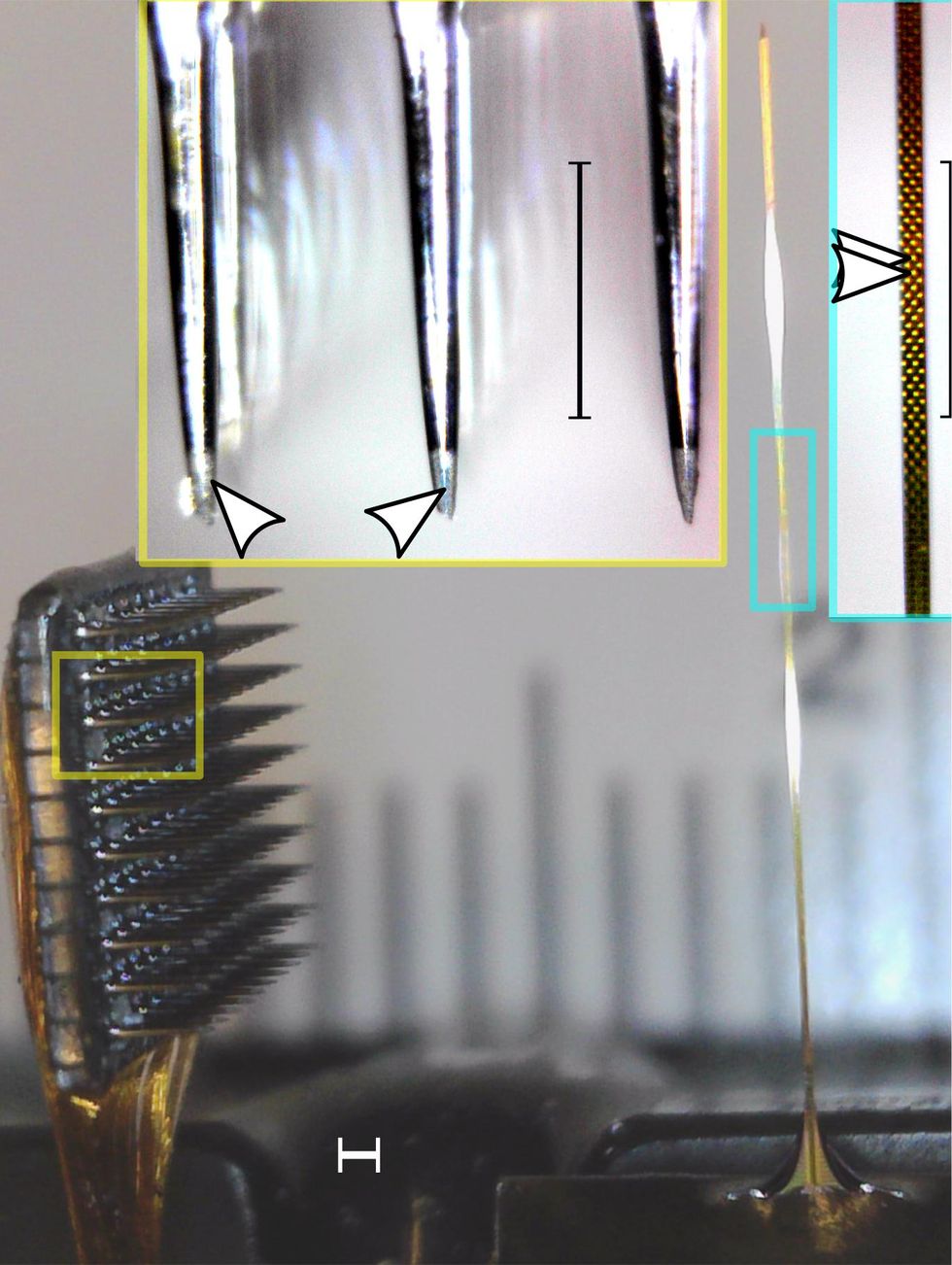

Different kinds of neural probes pick up activity from firing neurons: three tines of a Utah array with one electrode on each tine [left], a single slender tungsten wire electrode [center], and a Neuropixels shank that has electrodes all along its length [checkered pattern, right].Massachusetts General Hospital/Imec/Nature Neuroscience

Different kinds of neural probes pick up activity from firing neurons: three tines of a Utah array with one electrode on each tine [left], a single slender tungsten wire electrode [center], and a Neuropixels shank that has electrodes all along its length [checkered pattern, right].Massachusetts General Hospital/Imec/Nature Neuroscience The team realized that they could mount two Neuropixels 2.0 probes on one headstage, the board that sits outside the skull, providing a total of eight shanks with 10,240 recording electrodes. Imec

The team realized that they could mount two Neuropixels 2.0 probes on one headstage, the board that sits outside the skull, providing a total of eight shanks with 10,240 recording electrodes. Imec

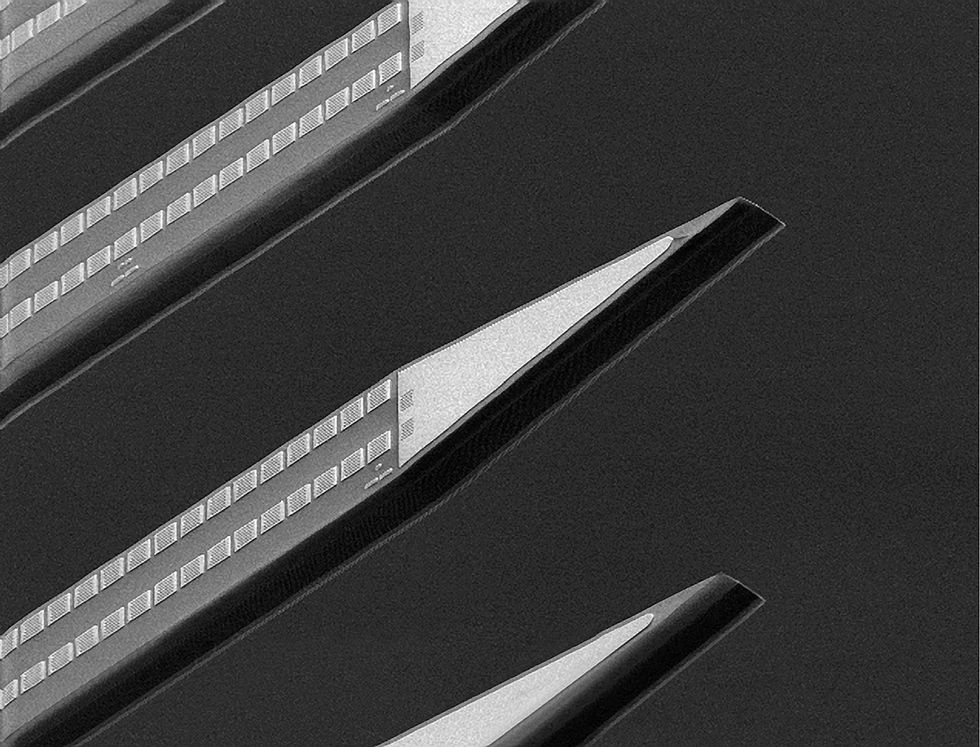

The most common neural recording device today is the Utah array [top image, at left], which has one electrode at the tip of each of its tines. In contrast, a Neuropixels probe [top image, at right] has hundreds of electrodes along each of its long shanks. An image taken by a scanning electron microscope [bottom] magnifies the tips of several Neuropixels shanks.

The most common neural recording device today is the Utah array [top image, at left], which has one electrode at the tip of each of its tines. In contrast, a Neuropixels probe [top image, at right] has hundreds of electrodes along each of its long shanks. An image taken by a scanning electron microscope [bottom] magnifies the tips of several Neuropixels shanks. The first Neuropixels device [top] had one shank with 966 electrodes. Neuropixels 2.0 [bottom] has four shanks with 1,280 electrodes each. Two probes can be mounted on one headstage.Imec

The first Neuropixels device [top] had one shank with 966 electrodes. Neuropixels 2.0 [bottom] has four shanks with 1,280 electrodes each. Two probes can be mounted on one headstage.Imec Author Barun Dutta [above] of Imec brought his semiconductor expertise to the field of neuroscience.Fred Loosen/Imec

Author Barun Dutta [above] of Imec brought his semiconductor expertise to the field of neuroscience.Fred Loosen/Imec

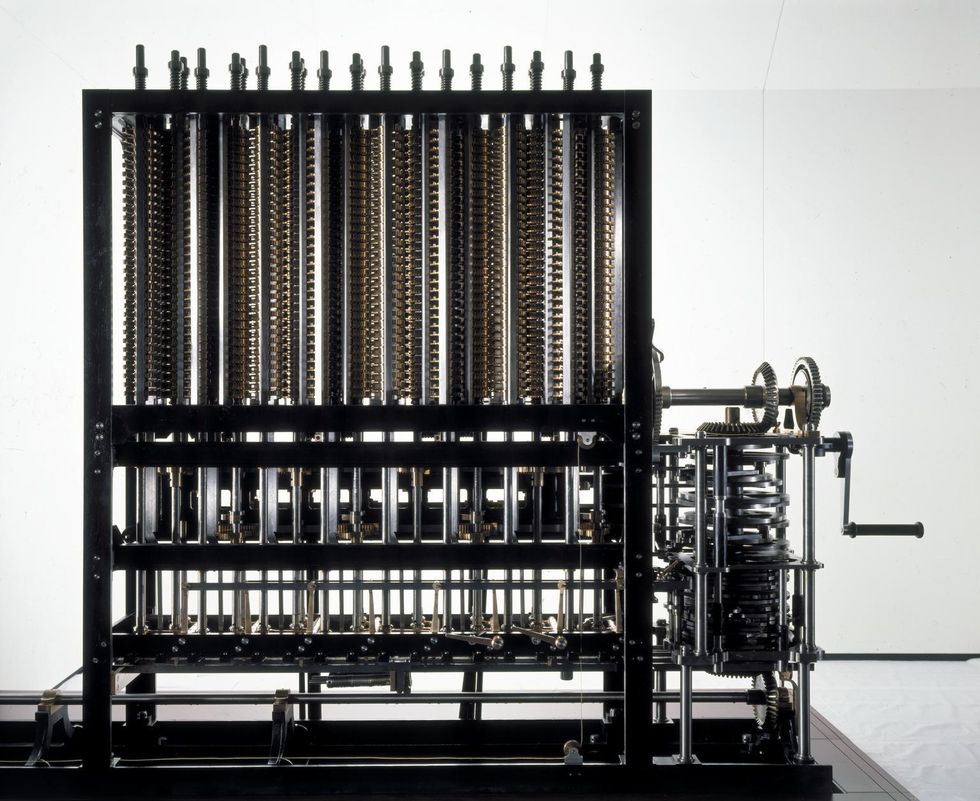

The 19th-century mathematician Charles Babbage’s visionary contributions to computing were rediscovered in the 20th century.The Picture Art Collection/Alamy

The 19th-century mathematician Charles Babbage’s visionary contributions to computing were rediscovered in the 20th century.The Picture Art Collection/Alamy Ada Lovelace championed Charles Babbage’s work by, among other things, writing the first computer algorithm for his unbuilt Analytical Engine.Interim Archives/Getty Images

Ada Lovelace championed Charles Babbage’s work by, among other things, writing the first computer algorithm for his unbuilt Analytical Engine.Interim Archives/Getty Images In 1985, a team at the Science Museum in London set out to build the streamlined Difference Engine No. 2 based on Babbage’s drawings. The 8,000-part machine was finally completed in 2002.Science Museum Group

In 1985, a team at the Science Museum in London set out to build the streamlined Difference Engine No. 2 based on Babbage’s drawings. The 8,000-part machine was finally completed in 2002.Science Museum Group