Tuesday, January 31, 2023

Snap’s Growth Slows Further Amid Tech Downturn

IEEE Medal of Honor Goes to Vint Cerf

IEEE Life Fellow Vinton “Vint” Cerf, widely known as the “Father of the Internet,” is the recipient of the 2023 IEEE Medal of Honor. He is being recognized “for co-creating the Internet architecture and providing sustained leadership in its phenomenal growth in becoming society’s critical infrastructure.”

The IEEE Foundation sponsors the annual award.

While working as a program manager at the U.S. Defense Advanced Research Projects Agency (DARPA) Information Processing Techniques Office in 1974, Cerf and IEEE Life Fellow Robert Kahn designed the Transmission Control Protocol and the Internet Protocol. TCP manages data packets sent over the Internet, making sure they don’t get lost, are received in the proper order, and are reassembled at their destination correctly. IP manages the addressing and forwarding of data to and from its proper destinations. Together they make up the Internet’s core architecture and enable computers to connect and exchange traffic.

“Cerf’s tireless commitment to the Internet’s evolution, improvement, oversight, and evangelism throughout its history has made an indelible impact on the world,” said one of the endorsers of the award. “It is largely due to his efforts that we even have the Internet, which has changed the way society lives.

“The Internet has enabled a large part of the world to receive instant access to news, brought us closer to friends and loved ones, and made it easier to purchase products online,” the endorser said. “It’s improved access to education and scientific discourse, made smartphones useful, and opened the door for social media, cloud computing, video conferencing, and streaming. Cerf also saw early on the importance of decentralized control, with no one company or government completely in charge.”

Since 2005, Cerf has been vice president and chief Internet evangelist at Google in Reston, Va., spreading the word about adopting the Internet in service to public good. He is responsible for identifying new technologies and enabling policies that support the development of advanced, Internet-based products and services.

Enhancing the World Wide Web

Cerf left DARPA in 1982 to join Microwave Communications Inc. (now part of WorldCom), headquartered in Washington, D.C., as vice president of its digital information services division. A year later, he led the development of MCI Mail, the first commercial email service on the Internet.

In 1986 he left the company to become vice president of the newly formed Corporation for National Research Initiatives, also in Reston. He worked alongside Kahn at the not-for-profit organization, developing digital libraries, gigabit speed networks, and knowledge robots (mobile software agents used in computer networks).

He returned to MCI in 1994 and served as a senior vice president for 11 years before joining Google.

“It is largely due to Cerf’s efforts that we even have the Internet.”

Together with Kahn, Cerf founded the nonprofit Internet Society in 1992. The organization helps set technical standards, develops Internet infrastructure, and helps lawmakers set policy.

Cerf served as its president from 1992 to 1995 and was chairman of the board of the Internet Corp. for Assigned Names and Numbers from 2000 to 2007. ICANN works to ensure a stable, secure, and interoperable Internet by managing the assignment of unique IP addresses and domain names. It also maintains tables of registered parameters needed for the protocol standards developed by the Internet Engineering Task Force.

Cerf has received several recognitions for his work, including the 2004 Turing Award from the Association for Computing Machinery. The honor is known as the Nobel Prize of computing. Together with Kahn, he was awarded a 2013 Queen Elizabeth Prize for Engineering, a 2005 U.S. Presidential Medal of Freedom, and a 1997 U.S. National Medal of Technology and Innovation. Reference: https://ift.tt/NhpkSX6Tesla’s Self-Driving Technology Comes Under Justice Dept. Scrutiny

Apple’s Secrecy Violated Workers Rights, NLRB Finds

Transparency Depends on Digital Breadcrumbs

From the moment we wake up and reach for our smartphones and throughout the day as we text each other, upload selfies to social media, shop, commute, work, work out, watch streaming media, pay bills, and travel, and even while we’re sleeping, we spew personal data like jets sketching contrails across the sky.

An astonishing amount of that data is recorded, stored, analyzed, and shared by media companies looking to pitch you content and ads, retailers aiming to sell you more of what you’ve already bought, and potential distant relatives hitting you up on genealogy sites. And sometimes, if you’re suspected of participating in illegal activities, that data can bring you under the scrutiny of law enforcement officials.

Contributing Editor Mark Harris spent months poring over court documents and other records to understand how the U.S. Federal Bureau of Investigation and other agencies exploited vast troves of data to conduct the largest criminal investigation in U.S. history: into the violent overtaking of the Capitol building on 6 January 2021.

The events of that day unfolded on live television watched by millions. But in order for investigators to identify suspects amidst a mob of thousands, it had to cast a very wide net and sought the cooperation of tech giants like Google, Facebook, and Snap and carriers like Verizon and T-Mobile. As Harris painstakingly documents in “How the Police Exploited the Capitol Rioters’ Digital Records,” some of the information the FBI used was intentionally shared by rioters on social media, while other information was gleaned from the kind of data we all heedlessly cast off during the course of the day, like the order for pizza that landed one group of rioters in hot water or the automated license-plate readings that were cited in 20 cases.

“In the eternal struggle between security and privacy, the best that digital-rights activists can hope for is to watch the investigators as closely as they are watching us.” —Mark Harris

The ability to ingest multiple data streams and analyze them to trace rioters’ journeys to, through, and back from the Capitol has led to 950 arrests, with more than half leading to guilty pleas and 40 to guilty verdicts as of this writing. But as the privacy advocates Harris interviewed point out, while these tools helped law enforcement hold some people accountable for their actions that day, those same tools can be used by the state against law-abiding citizens, not just in the United States, but anywhere. And the data we make available (knowingly or not), often for the sake of convenience or as the price of admission, leaves us vulnerable to bad actors, be they governments, corporations, or individuals.

The writer David Brin foretold a version of our current panopticon in his 1998 book The Transparent Society. In it, he acknowledges the risks of surveillance technology but contends that the very ubiquity of that technology is in itself a safeguard against abuse by giving everyone the ability to shine a light on the dark corners of individual and institutional behavior: His stance jibes with Harris’s final observation: “In the eternal struggle between security and privacy, the best that digital-rights activists can hope for is to watch the investigators as closely as they are watching us.”

But as Brin points out, watching the watchers isn’t enough to guarantee a free and open society. As data-driven prosecutions for the 6 January insurrection continue, it’s worth considering the linkage Brin makes between liberty and accountability, which he says, “is the one fundamental ingredient on which liberty thrives. Without the accountability that derives from openness—enforceable upon even the mightiest individuals and institutions—how can freedom survive?”

Reference: https://ift.tt/BR9rbMXMonday, January 30, 2023

RCA’s Lucite Phantom Teleceiver Introduced the Idea of TV

On 20 April 1939, David Sarnoff, president of the Radio Corporation of America, addressed a small crowd outside the RCA pavilion at the New York World’s Fair. “Today we are on the eve of launching a new industry, based on imagination, on scientific research and accomplishment,” he proclaimed. That industry was television.

RCA president David Sarnoff’s speech at the 1939 World’s Fair was broadcast live. www.youtube.com

Sarnoff’s speech was unusual at that time for the United States simply because it was the first time a news event was broadcast live for television. Although television technology had been in development for decades, and the BBC had been airing live programs since 1929 in the United Kingdom, competing technologies and licensing disputes kept the U.S. television market from taking off. With the World’s Fair and its theme of the World of Tomorrow, Sarnoff aimed to change that. Ten days after Sarnoff’s speech, the National Broadcasting Corporation (NBC), a fully owned subsidiary of RCA, began a regular slate of television programming, beginning with President Franklin Delano Roosevelt’s speech officially opening the fair.

RCA’s Phantom Teleceiver was the TV of tomorrow

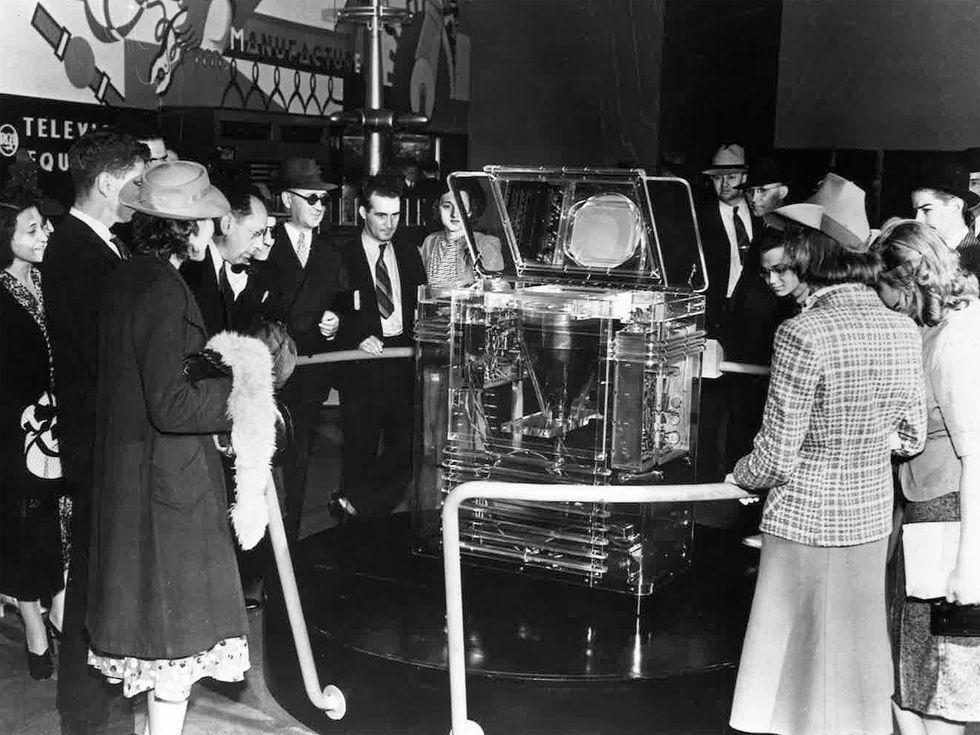

The architecture of RCA’s pavilion at the fair was a nod to the company’s history. Designed by Skidmore & Owens, it was shaped like a radio vacuum tube. But the inside held a vision of the future.

Entering the pavilion, fairgoers encountered the Phantom Teleceiver, RCA’s latest technological wonder. This special model of the TRK-12 television receiver, which today we would call a television set or simply a TV, was housed in a cabinet constructed from DuPont’s new clear plastic, Lucite. The transparent case allowed visitors to inspect the inner workings from all sides.

An unusual aspect of the TRK-12 was its vertically positioned cathode-ray tube, which projected the image upward onto a 30.5-centimeter (12-inch) mirror on the underside of the cabinet lid. Industrial designer John Vassos, who was responsible for creating the shape of RCA’s televisions, found the size of that era’s tubes to be a unique challenge. Had the CRT been positioned horizontally, the television cabinet would have pushed out almost a meter into the room. As it was, the set was a heavyweight, standing 102 cm tall and weighing more than 91 kilograms.The image in the mirror was the reverse of that projected by the CRT, but Vassos must have decided it wasn’t a deal breaker.

According to art historian Danielle Shapiro, the author of John Vassos: Industrial Designer for Modern Life, Vassos drew on the modernist principles of streamlining to design the cabinetry for the TRK-12. In addition to contending with the size of the tube, he had to find a way to dissipate its extreme heat. He chose to integrate vents throughout the cabinet, creating a louver as a design motif. Production sets (meaning all the ones not made out of Lucite for the fair) were crafted from different shades and patterns of walnut with stripes of walnut veneer, so the overall look was of an elegant wooden box.

The Lucite-encased TRK-12 was introduced at the 1939 World’s Fair.RCA

The Lucite-encased TRK-12 was introduced at the 1939 World’s Fair.RCA

(If you want to see the original World’s Fair TV, it now resides at the MZTV Museum of Television, in Toronto. A clever replica, built by the Early Television Museum with an LCD screen instead of a vintage cathode-ray tube, is at the ACMI in Melbourne, Australia.)

The TRK-12 wasn’t just a TV. It was the first multimedia center. The cabinet housed the television as well as a three-band, all-wave radio and a Victrola switch to attach an optional phonograph, the sound from which would play through the radio speaker. A fidelity selector knob allowed users to switch easily among the different entertainment options, and a single knob controlled the power and volume for all settings. On the left-hand side of the console were two radio knobs (range selector and tuning control), and on the right were three dual-control knobs for the television (vertical and horizontal hold; station selection and fine tuning; and contrast and brightness).

In 1939, TV was still so novel that the owner’s manual for the TRK-12 devoted a section to explaining “How You Receive Television Pictures.”

Although the home user could select any of five different television stations and fiddle with the picture quality, a bold-faced warning in the owner’s manual cautioned that only a competent television technician should install the receiver because it had the ability to produce high voltages and electrical shocks. TV was then so novel that the manual devoted a section to explaining “How You Receive Television Pictures”: “Television reception follows the laws governing high frequency wave transmission and reception. Television waves act in many respects like light waves.” So long as you knew how light waves behaved, you were good.

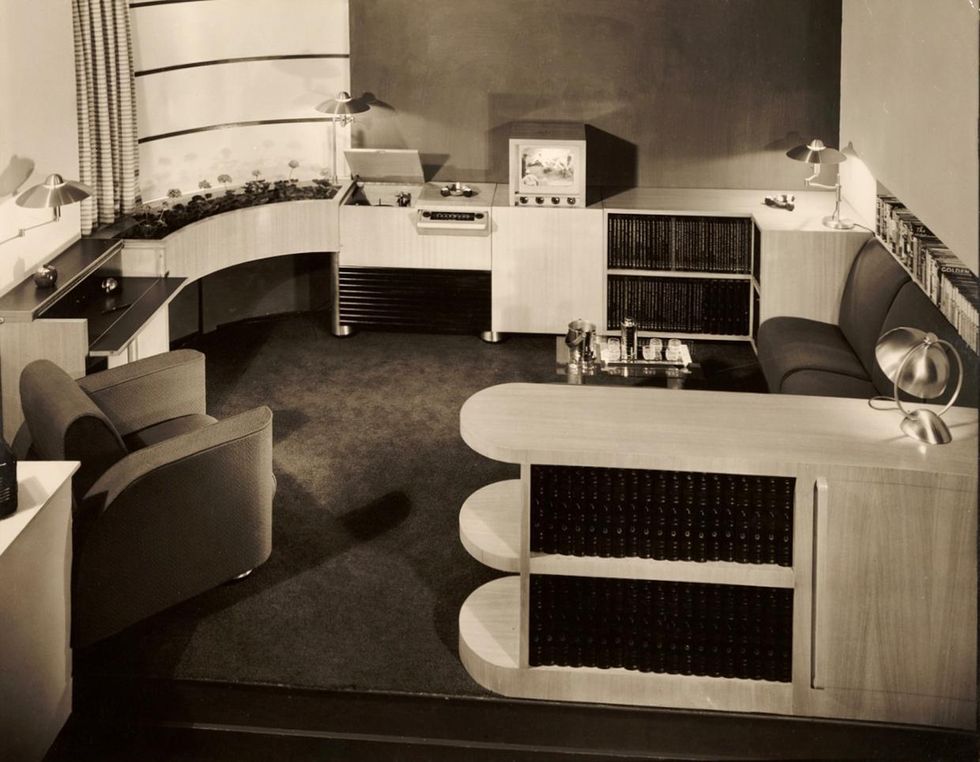

In addition to designing the television sets for the fair, Vassos created two exhibits to help new users envision how these machines could fit into their homes. When David Sarnoff gave his dedication speech, for example, only a few hundred people were able to watch it live simply because so few people owned TV sets. Shapiro argues that Vassos was one of the earliest modern designers to focus on the user experience and try to alleviate the anxiety and frenzy caused by the urban environment. His design for the Radio Living Room of Today blended the latest RCA technology, including a facsimile machine, with contemporary furnishings.

In 1940, Vassos added the Radio Living Room of Tomorrow. This exhibit, dubbed the Musicorner, included dimmable fluorescent lights to allow for ideal television-watching conditions. Foreshadowing cassette recorders and CD burners was a device for recording and producing phonographs. Tasteful modular cabinets concealed the television and radio receivers, not unlike some style trends today.

RCA designer John Vassos’s stylish Musicorner room incorporated cutting-edge technology for watching TV and recording phonographs. Archives of American Art

RCA designer John Vassos’s stylish Musicorner room incorporated cutting-edge technology for watching TV and recording phonographs. Archives of American Art

Each day, thousands of visitors to the RCA pavilion encountered television, often for the first time, and watched programming on 13 TRK-12 receivers. But if television really was going to be the future, RCA had to convince consumers to buy sets. Throughout the fair’s 18-month run, the company arranged to have four models of television receivers, all designed by Vassos, available for sale at various department stores in the New York metropolitan region.

The smallest of these was the TT-5 tabletop television, which only provided a picture. It plugged into an existing radio to receive sound. The TT-5 was considered the “everyman’s version” and had a starting price of $199 ($4,300 today). Next biggest was the TRK-5, then the TRK-9, and finally the TRK-12, which sold for $600 (nearly $13,000 today). Considering that the list price of a modest new automobile in 1939 was $700 and the average annual income was $1,368, even the everyman’s television remained beyond the reach of most families.

Part of a continuing series looking at historical artifacts that embrace the boundless potential of technology.

An abridged version of this article appears in the February 2023 print issue as “Yesterday’s TV of Tomorrow.”

Reference: https://ift.tt/SxIzaAdMassive Yandex code leak reveals Russian search engine’s ranking factors

Enlarge / The Russian logo of Yandex, the country's largest search engine and a tech company with many divisions, inside the company's headquarters. (credit: SOPA Images / Getty Images)

Nearly 45GB of source code files, allegedly stolen by a former employee, have revealed the underpinnings of Russian tech giant Yandex's many apps and services. It also revealed key ranking factors for Yandex's search engine, the kind almost never revealed in public.

The "Yandex git sources" were posted as a torrent file on January 25 and show files seemingly taken in July 2022 and dating back to February 2022. Software engineer Arseniy Shestakov claims that he verified with current and former Yandex employees that some archives "for sure contain modern source code for company services." Yandex told security blog BleepingComputer that "Yandex was not hacked" and that the leak came from a former employee. Yandex stated that it did not "see any threat to user data or platform performance."

The files notably date to February 2022, when Russia began a full-scale invasion of Ukraine. A former executive at Yandex told BleepingComputer that the leak was "political" and noted that the former employee had not tried to sell the code to Yandex competitors. Anti-spam code was also not leaked.

Ford Follows Tesla in Cutting Electric Vehicle Prices

Evolution and Impact of Wi-Fi Technology

The importance of Wi-Fi as the most popular carrier of wireless IP traffic in the emerging era of IoT and 5G continues its legacy, and Wi-Fi standards are continually evolving to help implement next generation applications in the cyberspace for positioning, human computer interfacing, motion, and gesture detection, as well as authentication and security. Wi-Fi is a go-to connectivity technology that is stable, proven, easy to deploy, and desirable by a host of diversified vertical markets, including Medical, Public Safety, Offender Tracking, Industrial, PDA, Security (monitoring), and others.

Register now for this free webinar!

- The evolution of the Wi-Fi technology, standards, and applications

- Key aspects/features of Wi-Fi 6 and Wi-Fi 7

- Wi-Fi market trends and outlook

- Solutions that will help build robust Wi-Fi 6 and Wi-Fi 7 networks

This lecture is based on his recent paper: Pahlavan, K. and Krishnamurthy, P., 2021. Evolution and impact of Wi-Fi technology and applications: a historical perspective. International Journal of Wireless Information Networks, 28(1), pp.3-19.

Reference: https://ift.tt/iRXIfmL‘Recession Resilient’ Climate Start-Ups Shine in Tech Downturn

Sunday, January 29, 2023

Roboticists Want to Give You a Third Arm

What could you do with an extra limb? Consider a surgeon performing a delicate operation, one that needs her expertise and steady hands—all three of them. As her two biological hands manipulate surgical instruments, a third robotic limb that’s attached to her torso plays a supporting role. Or picture a construction worker who is thankful for his extra robotic hand as it braces the heavy beam he’s fastening into place with his other two hands. Imagine wearing an exoskeleton that would let you handle multiple objects simultaneously, like Spiderman’s Dr. Octopus. Or contemplate the out-there music a composer could write for a pianist who has 12 fingers to spread across the keyboard.

Such scenarios may seem like science fiction, but recent progress in robotics and neuroscience makes extra robotic limbs conceivable with today’s technology. Our research groups at Imperial College London and the University of Freiburg, in Germany, together with partners in the European project NIMA, are now working to figure out whether such augmentation can be realized in practice to extend human abilities. The main questions we’re tackling involve both neuroscience and neurotechnology: Is the human brain capable of controlling additional body parts as effectively as it controls biological parts? And if so, what neural signals can be used for this control?

We think that extra robotic limbs could be a new form of human augmentation, improving people’s abilities on tasks they can already perform as well as expanding their ability to do things they simply cannot do with their natural human bodies. If humans could easily add and control a third arm, or a third leg, or a few more fingers, they would likely use them in tasks and performances that went beyond the scenarios mentioned here, discovering new behaviors that we can’t yet even imagine.

Levels of human augmentation

Robotic limbs have come a long way in recent decades, and some are already used by people to enhance their abilities. Most are operated via a joystick or other hand controls. For example, that’s how workers on manufacturing lines wield mechanical limbs that hold and manipulate components of a product. Similarly, surgeons who perform robotic surgery sit at a console across the room from the patient. While the surgical robot may have four arms tipped with different tools, the surgeon’s hands can control only two of them at a time. Could we give these surgeons the ability to control four tools simultaneously?

Robotic limbs are also used by people who have amputations or paralysis. That includes people in powered wheelchairs controlling a robotic arm with the chair’s joystick and those who are missing limbs controlling a prosthetic by the actions of their remaining muscles. But a truly mind-controlled prosthesis is a rarity.

If humans could easily add and control a third arm, they would likely use them in new behaviors that we can’t yet even imagine.

The pioneers in brain-controlled prosthetics are people with tetraplegia, who are often paralyzed from the neck down. Some of these people have boldly volunteered for clinical trials of brain implants that enable them to control a robotic limb by thought alone, issuing mental commands that cause a robot arm to lift a drink to their lips or help with other tasks of daily life. These systems fall under the category of brain-machine interfaces (BMI). Other volunteers have used BMI technologies to control computer cursors, enabling them to type out messages, browse the Internet, and more. But most of these BMI systems require brain surgery to insert the neural implant and include hardware that protrudes from the skull, making them suitable only for use in the lab.

Augmentation of the human body can be thought of as having three levels. The first level increases an existing characteristic, in the way that, say, a powered exoskeleton can give the wearer super strength. The second level gives a person a new degree of freedom, such as the ability to move a third arm or a sixth finger, but at a cost—if the extra appendage is controlled by a foot pedal, for example, the user sacrifices normal mobility of the foot to operate the control system. The third level of augmentation, and the least mature technologically, gives a user an extra degree of freedom without taking mobility away from any other body part. Such a system would allow people to use their bodies normally by harnessing some unused neural signals to control the robotic limb. That’s the level that we’re exploring in our research.

Deciphering electrical signals from muscles

Third-level human augmentation can be achieved with invasive BMI implants, but for everyday use, we need a noninvasive way to pick up brain commands from outside the skull. For many research groups, that means relying on tried-and-true electroencephalography (EEG) technology, which uses scalp electrodes to pick up brain signals. Our groups are working on that approach, but we are also exploring another method: using electromyography (EMG) signals produced by muscles. We’ve spent more than a decade investigating how EMG electrodes on the skin’s surface can detect electrical signals from the muscles that we can then decode to reveal the commands sent by spinal neurons.

Electrical signals are the language of the nervous system. Throughout the brain and the peripheral nerves, a neuron “fires” when a certain voltage—some tens of millivolts—builds up within the cell and causes an action potential to travel down its axon, releasing neurotransmitters at junctions, or synapses, with other neurons, and potentially triggering those neurons to fire in turn. When such electrical pulses are generated by a motor neuron in the spinal cord, they travel along an axon that reaches all the way to the target muscle, where they cross special synapses to individual muscle fibers and cause them to contract. We can record these electrical signals, which encode the user’s intentions, and use them for a variety of control purposes.

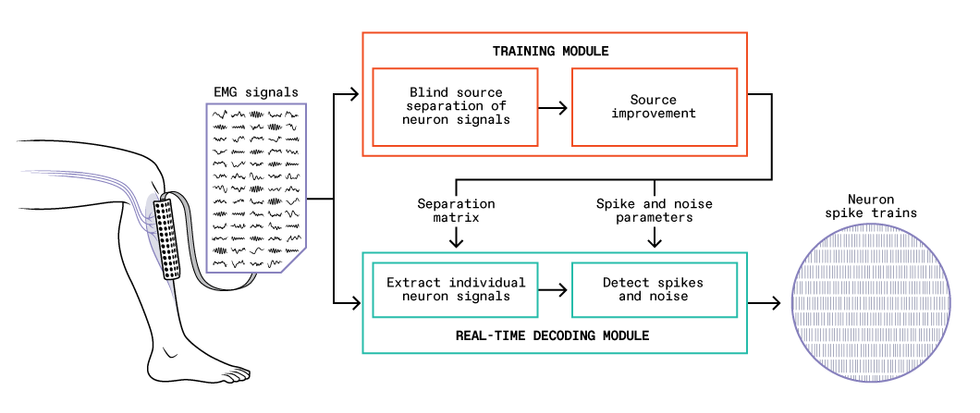

How the Neural Signals Are Decoded

A training module [orange] takes an initial batch of EMG signals read by the electrode array [left], determines how to extract signals of individual neurons, and summarizes the process mathematically as a separation matrix and other parameters. With these tools, the real-time decoding module [green] can efficiently extract individual neurons’ sequences of spikes, or “spike trains” [right], from an ongoing stream of EMG signals. Chris Philpot

A training module [orange] takes an initial batch of EMG signals read by the electrode array [left], determines how to extract signals of individual neurons, and summarizes the process mathematically as a separation matrix and other parameters. With these tools, the real-time decoding module [green] can efficiently extract individual neurons’ sequences of spikes, or “spike trains” [right], from an ongoing stream of EMG signals. Chris Philpot

Deciphering the individual neural signals based on what can be read by surface EMG, however, is not a simple task. A typical muscle receives signals from hundreds of spinal neurons. Moreover, each axon branches at the muscle and may connect with a hundred or more individual muscle fibers distributed throughout the muscle. A surface EMG electrode picks up a sampling of this cacophony of pulses.

A breakthrough in noninvasive neural interfaces came with the discovery in 2010 that the signals picked up by high-density EMG, in which tens to hundreds of electrodes are fastened to the skin, can be disentangled, providing information about the commands sent by individual motor neurons in the spine. Such information had previously been obtained only with invasive electrodes in muscles or nerves. Our high-density surface electrodes provide good sampling over multiple locations, enabling us to identify and decode the activity of a relatively large proportion of the spinal motor neurons involved in a task. And we can now do it in real time, which suggests that we can develop noninvasive BMI systems based on signals from the spinal cord.

A typical muscle receives signals from hundreds of spinal neurons.

The current version of our system consists of two parts: a training module and a real-time decoding module. To begin, with the EMG electrode grid attached to their skin, the user performs gentle muscle contractions, and we feed the recorded EMG signals into the training module. This module performs the difficult task of identifying the individual motor neuron pulses (also called spikes) that make up the EMG signals. The module analyzes how the EMG signals and the inferred neural spikes are related, which it summarizes in a set of parameters that can then be used with a much simpler mathematical prescription to translate the EMG signals into sequences of spikes from individual neurons.

With these parameters in hand, the decoding module can take new EMG signals and extract the individual motor neuron activity in real time. The training module requires a lot of computation and would be too slow to perform real-time control itself, but it usually has to be run only once each time the EMG electrode grid is fixed in place on a user. By contrast, the decoding algorithm is very efficient, with latencies as low as a few milliseconds, which bodes well for possible self-contained wearable BMI systems. We validated the accuracy of our system by comparing its results with signals obtained concurrently by two invasive EMG electrodes inserted into the user’s muscle.

Exploiting extra bandwidth in neural signals

Developing this real-time method to extract signals from spinal motor neurons was the key to our present work on controlling extra robotic limbs. While studying these neural signals, we noticed that they have, essentially, extra bandwidth. The low-frequency part of the signal (below about 7 hertz) is converted into muscular force, but the signal also has components at higher frequencies, such as those in the beta band at 13 to 30 Hz, which are too high to control a muscle and seem to go unused. We don’t know why the spinal neurons send these higher-frequency signals; perhaps the redundancy is a buffer in case of new conditions that require adaptation. Whatever the reason, humans evolved a nervous system in which the signal that comes out of the spinal cord has much richer information than is needed to command a muscle.

That discovery set us thinking about what could be done with the spare frequencies. In particular, we wondered if we could take that extraneous neural information and use it to control a robotic limb. But we didn’t know if people would be able to voluntarily control this part of the signal separately from the part they used to control their muscles. So we designed an experiment to find out.

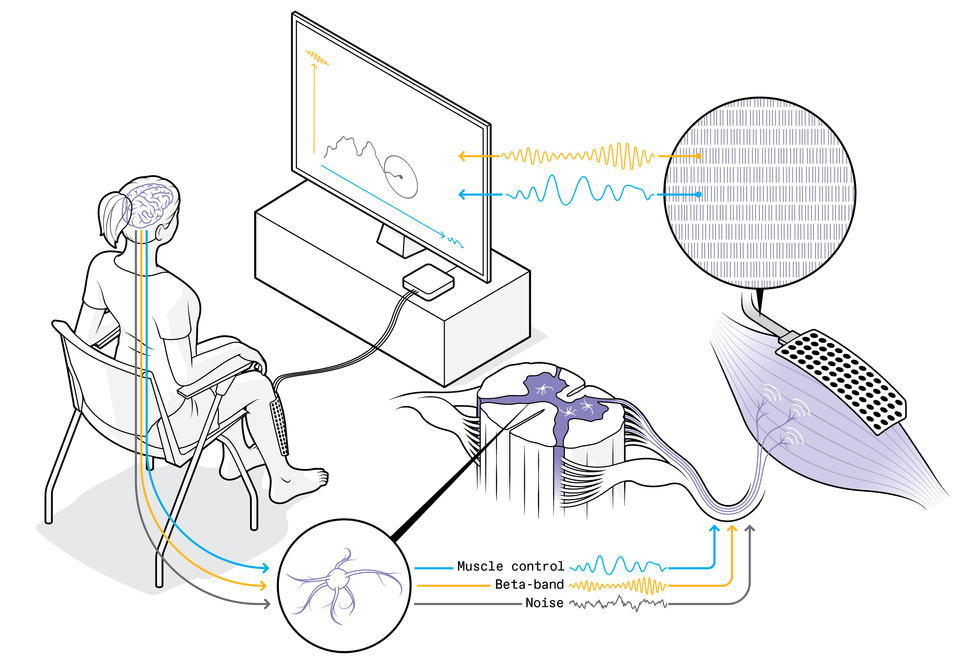

Neural Control Demonstrated

A volunteer exploits unused neural bandwidth to direct the motion of a cursor on the screen in front of her. Neural signals pass from her brain, through spinal neurons, to the muscle in her shin, where they are read by an electromyography (EMG) electrode array on her leg and deciphered in real time. These signals include low-frequency components [blue] that control muscle contractions, higher frequencies [beta band, yellow] with no known biological purpose, and noise [gray]. Chris Philpot; Source: M. Bräcklein et al., Journal of Neural Engineering

A volunteer exploits unused neural bandwidth to direct the motion of a cursor on the screen in front of her. Neural signals pass from her brain, through spinal neurons, to the muscle in her shin, where they are read by an electromyography (EMG) electrode array on her leg and deciphered in real time. These signals include low-frequency components [blue] that control muscle contractions, higher frequencies [beta band, yellow] with no known biological purpose, and noise [gray]. Chris Philpot; Source: M. Bräcklein et al., Journal of Neural Engineering

In our first proof-of-concept experiment, volunteers tried to use their spare neural capacity to control computer cursors. The setup was simple, though the neural mechanism and the algorithms involved were sophisticated. Each volunteer sat in front of a screen, and we placed an EMG system on their leg, with 64 electrodes in a 4-by-10-centimeter patch stuck to their shin over the tibialis anterior muscle, which flexes the foot upward when it contracts. The tibialis has been a workhorse for our experiments: It occupies a large area close to the skin, and its muscle fibers are oriented along the leg, which together make it ideal for decoding the activity of spinal motor neurons that innervate it.

These are some results from the experiment in which low- and high-frequency neural signals, respectively, controlled horizontal and vertical motion of a computer cursor. Colored ellipses (with plus signs at centers) show the target areas. The top three diagrams show the trajectories (each one starting at the lower left) achieved for each target across three trials by one user. At bottom, dots indicate the positions achieved across many trials and users. Colored crosses mark the mean positions and the range of results for each target.Source: M. Bräcklein et al., Journal of Neural Engineering

These are some results from the experiment in which low- and high-frequency neural signals, respectively, controlled horizontal and vertical motion of a computer cursor. Colored ellipses (with plus signs at centers) show the target areas. The top three diagrams show the trajectories (each one starting at the lower left) achieved for each target across three trials by one user. At bottom, dots indicate the positions achieved across many trials and users. Colored crosses mark the mean positions and the range of results for each target.Source: M. Bräcklein et al., Journal of Neural Engineering

We asked our volunteers to steadily contract the tibialis, essentially holding it tense, and throughout the experiment we looked at the variations within the extracted neural signals. We separated these signals into the low frequencies that controlled the muscle contraction and spare frequencies at about 20 Hz in the beta band, and we linked these two components respectively to the horizontal and vertical control of a cursor on a computer screen. We asked the volunteers to try to move the cursor around the screen, reaching all parts of the space, but we didn’t, and indeed couldn’t, explain to them how to do that. They had to rely on the visual feedback of the cursor’s position and let their brains figure out how to make it move.

Remarkably, without knowing exactly what they were doing, these volunteers mastered the task within minutes, zipping the cursor around the screen, albeit shakily. Beginning with one neural command signal—contract the tibialis anterior muscle—they were learning to develop a second signal to control the computer cursor’s vertical motion, independently from the muscle control (which directed the cursor’s horizontal motion). We were surprised and excited by how easily they achieved this big first step toward finding a neural control channel separate from natural motor tasks. But we also saw that the control was not accurate enough for practical use. Our next step will be to see if more accurate signals can be obtained and if people can use them to control a robotic limb while also performing independent natural movements.

We are also interested in understanding more about how the brain performs feats like the cursor control. In a recent study using a variation of the cursor task, we concurrently used EEG to see what was happening in the user’s brain, particularly in the area associated with the voluntary control of movements. We were excited to discover that the changes happening to the extra beta-band neural signals arriving at the muscles were tightly related to similar changes at the brain level. As mentioned, the beta neural signals remain something of a mystery since they play no known role in controlling muscles, and it isn’t even clear where they originate. Our result suggests that our volunteers were learning to modulate brain activity that was sent down to the muscles as beta signals. This important finding is helping us unravel the potential mechanisms behind these beta signals.

Meanwhile, at Imperial College London we have set up a system for testing these new technologies with extra robotic limbs, which we call the MUlti-limb Virtual Environment, or MUVE. Among other capabilities, MUVE will enable users to work with as many as four lightweight wearable robotic arms in scenarios simulated by virtual reality. We plan to make the system open for use by other researchers worldwide.

Next steps in human augmentation

Connecting our control technology to a robotic arm or other external device is a natural next step, and we’re actively pursuing that goal. The real challenge, however, will not be attaching the hardware, but rather identifying multiple sources of control that are accurate enough to perform complex and precise actions with the robotic body parts.

We are also investigating how the technology will affect the neural processes of the people who use it. For example, what will happen after someone has six months of experience using an extra robotic arm? Would the natural plasticity of the brain enable them to adapt and gain a more intuitive kind of control? A person born with six-fingered hands can have fully developed brain regions dedicated to controlling the extra digits, leading to exceptional abilities of manipulation. Could a user of our system develop comparable dexterity over time? We’re also wondering how much cognitive load will be involved in controlling an extra limb. If people can direct such a limb only when they’re focusing intently on it in a lab setting, this technology may not be useful. However, if a user can casually employ an extra hand while doing an everyday task like making a sandwich, then that would mean the technology is suited for routine use.

Whatever the reason, humans evolved a nervous system in which the signal that comes out of the spinal cord has much richer information than is needed to command a muscle.

Other research groups are pursuing the same neuroscience questions. Some are experimenting with control mechanisms involving either scalp-based EEG or neural implants, while others are working on muscle signals. It is early days for movement augmentation, and researchers around the world have just begun to address the most fundamental questions of this emerging field.

Two practical questions stand out: Can we achieve neural control of extra robotic limbs concurrently with natural movement, and can the system work without the user’s exclusive concentration? If the answer to either of these questions is no, we won’t have a practical technology, but we’ll still have an interesting new tool for research into the neuroscience of motor control. If the answer to both questions is yes, we may be ready to enter a new era of human augmentation. For now, our (biological) fingers are crossed.

Reference: https://ift.tt/KIS1kEpSaturday, January 28, 2023

Why EVs Aren't a Climate Change Panacea

“Electric cars will not save the climate. It is completely wrong,” Fatih Birol, Executive Director of the International Energy Agency (IEA), has stated.

If Birol were from Maine, he might have simply observed, “You can’t get there from here.”

This is not to imply in any way that electric vehicles are worthless. Analysis by the International Council on Clean Transportation (ICCT) argues that EVs are the quickest means to decarbonize motorized transport. However, EVs are not by themselves in any way going to achieve the goal of net zero by 2050.

There are two major reasons for this: first, EVs are not going to reach the numbers required by 2050 to hit their needed contribution to net zero goals, and even if they did, a host of other personal, social and economic activities must be modified to reach the total net zero mark.

For instance, Alexandre Milovanoff at the University of Toronto and his colleagues’ research (which is described in depth in a recent Spectrum article) demonstrates the U.S. must have 90 percent of its vehicles, or some 350 million EVs, on the road by 2050 in order to hit its emission targets. The likelihood of this occurring is infinitesimal. Some estimates indicate that about 40 percent of vehicles on US roads will be ICE vehicles in 2050, while others are less than half that figure.

For the U.S. to hit the 90 percent EV target, sales of all new ICE vehicles across the U.S. must cease by 2038 at the latest, according to research company BloombergNEF (BNEF). Greenpeace, on the other hand, argues that sales of all diesel and petrol vehicles, including hybrids, must end by 2030 to meet such a target. However, achieving either goal would likely require governments offering hundreds of billions of dollars, if not trillions, in EV subsidies to ICE owners over the next decade, not to mention significant investments in EV charging infrastructure and the electrical grid. ICE vehicle households would also have to be convinced that they would not be giving activities up by becoming EV-only households.

As a reality check, current estimates for the number of ICE vehicles still on the road worldwide in 2050 range from a low of 1.25 billion to more than 2 billion.

Even assuming that the required EV targets were met in the U.S. and elsewhere, it still will not be sufficient to meet net zero 2050 emission targets. Transportation accounts for only 27 percent of greenhouse gas emissions (GHG) in the U.S.; the sources of the other 73 percent of GHG emissions must be reduced as well. Even in the transportation sector, more than 15 percent of the GHG emissions are created by air and rail travel and shipping. These will also have to be decarbonized.

Nevertheless, for EVs themselves to become true zero emission vehicles, everything in their supply chain from mining to electricity production must be nearly net-zero emission as well. Today, depending on the EV model, where it charges, and assuming it is a battery electric and not a hybrid vehicle, it may need to be driven anywhere from 8,400 to 13,500 miles, or controversially, significantly more to generate less GHG emissions than an ICE vehicle. This is due to the 30 to 40 percent increase in emissions EVs create in comparison to manufacturing an ICE vehicle, mainly from its battery production.

In states (or countries) with a high proportion of coal-generated electricity, the miles needed to break-even climb more. In Poland and China, for example, an EV would need to be driven 78,700 miles to break-even. Just accounting for miles driven, however, BEVs cars and trucks appear cleaner than ICE equivalents nearly everywhere in the U.S. today. As electricity increasingly comes from renewables, total electric vehicle GHG emissions will continue downward, but that will take at least a decade or more to happen everywhere across the U.S. (assuming policy roadblocks disappear), and even longer elsewhere.

If EVs aren’t enough, what else is needed?

Given that EVs, let alone the rest of the transportation sector, likely won’t hit net zero 2050 targets, what additional actions are being advanced to reduce GHG emissions?

A high priority, says IEA’s Birol, is investment in across-the-board energy-related technology research and development and their placement into practice. According to Birol, “IEA analysis shows that about half the reductions to get to net zero emissions in 2050 will need to come from technologies that are not yet ready for market.”

Many of these new technologies will be aimed at improving the efficient use of fossil fuels, which will not be disappearing anytime soon. The IEA expects that energy efficiency improvement, such as the increased use of variable speed electric motors, will lead to a 40 percent reduction in energy-related GHG emissions over the next twenty years.

But even if these hoped for technological improvements arrive, and most certainly if they do not, the public and businesses are expected to take more energy conscious decisions to close what the United Nations says is the expected 2050 “emissions gap.” Environmental groups foresee the public needing to use electrified mass transit, reduce long-haul flights for business as well as pleasure), increase telework, walk and cycle to work or stores, change their diet to eat more vegetables, or if absolutely needed, drive only small EVs. Another expectation is that homeowners and businesses will become “fully electrified” by replacing oil, propane and gas furnaces with heat pumps along with gas fired stoves as well as installing solar power and battery systems.

Dronning Louise’s Bro (Queen Louise’s Bridge) connects inner Copenhagen and Nørrebro and is frequented by many cyclists and pedestrians every day.Frédéric Soltan/Corbis/Getty Images

Dronning Louise’s Bro (Queen Louise’s Bridge) connects inner Copenhagen and Nørrebro and is frequented by many cyclists and pedestrians every day.Frédéric Soltan/Corbis/Getty Images

Underpinning the behavioral changes being urged (or encouraged by legislation) is the notion of rejecting the current car-centric culture and completely rethinking what personal mobility means. For example, researchers at University of Oxford in the U.K. argue that, “Focusing solely on electric vehicles is slowing down the race to zero emissions.” Their study found “emissions from cycling can be more than 30 times lower for each trip than driving a fossil fuel car, and about ten times lower than driving an electric one.” If just one out of five urban residents in Europe permanently changed from driving to cycling, emissions from automobiles would be cut by 8 percent, the study reports.

Even then, Oxford researchers concede, breaking the car’s mental grip on people is not going to be easy, given the generally poor state of public transportation across much of the globe.

Behavioral change is hard

How willing are people to break their car dependency and other energy-related behaviors to address climate change? The answer is perhaps some, but maybe not too much. A Pew Research Center survey taken in late 2021 of seventeen countries with advanced economies indicated that 80 percent of those surveyed were willing to alter how then live and work to combat climate change.

However, a Kanter Public survey of ten of the same countries taken at about the same time gives a less positive view, with only 51 percent of those polled stating they would alter their lifestyles. In fact, some 74 percent of those polled indicated they were already “proud of what [they are] currently doing” to combat climate change.

What both polls failed to explore are what behaviors specifically would respondents being willing to permanently change or give up in their lives to combat climate change?

For instance, how many urban dwellers, if told that they must forever give up their cars and instead walk, cycle or take public transportation, would willingly agree to doing so? And how many of those who agreed, would also consent to go vegetarian, telework, and forsake trips abroad for vacation?

It is one thing to answer a poll indicating a willingness to change, and quite another to “walk the talk” especially if there are personal, social or economic inconveniences or costs involved. For instance, recent U.S. survey information shows that while 22 percent of new car buyers expressed interest in a battery electric vehicle (BEV), only 5 percent actually bought one.

Granted, there are several cities where living without a vehicle is doable, like Utrecht in the Netherlands where in 2019 48 percent of resident trips were done by cycling or London, where nearly two-thirds of all trips taken that same year were are made by walking, cycling or public transportation. Even a few US cities it might be livable without a car.

The world’s largest bike parking facility, Stationsplein Bicycle Parking near Utrecht Central Station in Utrecht, Netherlands has 12,500 parking places.Abdullah Asiran/Anadolu Agency/Getty Images

The world’s largest bike parking facility, Stationsplein Bicycle Parking near Utrecht Central Station in Utrecht, Netherlands has 12,500 parking places.Abdullah Asiran/Anadolu Agency/Getty Images

However, in countless other urban areas, especially across most of the U.S., even those wishing to forsake owning a car would find it very difficult to do so without a massive influx of investment into all forms of public transport and personal mobility to eliminate the scores of US transit deserts.

As Tony Dutzik of the environmental advocacy group Frontier Group has written that in the U.S. “the price of admission to jobs, education and recreation is owning a car.” That’s especially true if you are a poor urbanite. Owning a reliable automobile has long been one of the only successful means of getting out of poverty.

Massive investment in new public transportation in the U.S. in unlikely, given its unpopularity with politicians and the public alike. This unpopularity has translated into aging and poorly-maintained bus, train and transit systems that few look forward to using. The American Society of Civil Engineers gives the current state of American public transportation a grade of D- and says today’s $176 billion investment backlog is expected to grow to $250 billion through 2029.

While the $89 billion targeted to public transportation in the recently passed Infrastructure Investment and Jobs Act will help, it also contains more than $351 billion for highways over the next five years. Hundreds of billions in annual investment are needed not only to fix the current public transport system but to build new ones to significantly reduce car dependency in America. Doing so would still take decades to complete.

Yet, even if such an investment were made in public transportation, unless its service is competitive with an EV or ICE vehicle in terms of cost, reliability and convenience, it will not be used. With EVs costing less to operate than ICE vehicles, the competitive hurdle will increase, despite the moves to offer free transit rides. Then there is the social stigma attached riding public transportation that needs to be overcome as well.

A few experts proclaim that ride-sharing using autonomous vehicles will separate people from their cars. Some even claim such AV sharing signals the both the end of individual car ownership as well as the need to invest in public transportation. Both outcomes are far from likely.

Other suggestions include redesigning cities to be more compact and more electrified, which would eliminate most of the need for personal vehicles to meet basic transportation needs. Again, this would take decades and untold billions of dollars to do so at the scale needed. The San Diego, California region has decided to spend $160 billion as a way to meet California’s net zero objectives to create “a collection of walkable villages serviced by bustling (fee-free) train stations and on-demand shuttles” by 2050. However, there has been public pushback over how to pay for the plan and its push to decrease personal driving by imposing a mileage tax.

According to University of Michigan public policy expert John Leslie King, the challenge of getting to net zero by 2050 is that each decarbonization proposal being made is only part of the overall solution. He notes, “You must achieve all the goals, or you don’t win. The cost of doing each is daunting, and the total cost goes up as you concatenate them.”

Concatenated costs also include changing multiple personal behaviors. It is unlikely that automakers, having committed more than a trillion dollars so far to EVs and charging infrastructure, are going to support depriving the public of the activities they enjoy today as a price they pay to shift to EVs. A war on EVs will be hard fought.

Should Policies Nudge or Shove?

The cost concatenation problem arises not only at a national level, but at countless local levels as well. Massachusetts’ new governor Maura Healey, for example, has set ambitious goals of having at least 1 million EVs on the road, converting 1 million fossil-fuel burning furnaces in homes and buildings to heat-pump systems, and the state achieving a 100 percent clean electricity supply by 2030.

The number of Massachusetts households that can afford or are willing to buy an EV and or convert their homes to a heat pump system in the next eight years, even with a current state median household income of $89,000 and subsidies, is likely significantly smaller than the targets set. So, what happens if by 2030, the numbers are well below target, not only in Massachusetts, but other states like California, New York, or Illinois that also have aggressive GHG emission reduction targets?

Will governments move from encouraging behavioral changes to combat climate change or, in frustration or desperation, begin mandating them? And if they do, will there be a tipping point that spurs massive social resistance?

For example, dairy farmers in the Netherlands have been protesting plans by the government to force them to cut their nitrogen emissions. This will require dairy farms to reduce their livestock, which will make it difficult or impossible to stay in business. The Dutch government estimates 11,200 farms must close, and another 17,600 to reduce their livestock numbers. The government says farmers who do not comply will have their farms taken away by forced buyouts starting in 2023.

California admits getting to a zero-carbon transportation system by 2045 means car owners must travel 25 percent below 1990 levels by 2030 and even more by 2045. If drivers fail to do so, will California impose weekly or monthly driving quotas, or punitive per mile driving taxes, along with mandating mileage data from vehicles ever-more connected to the Internet? The San Diego backlash over a mileage tax may be just the beginning.

“EVs,” notes King, “pull an invisible trailer filled with required major lifestyle changes that the public is not yet aware of.”

When it does, do not expect the public to acquiesce quietly.

In the final article of the series, we explore potential unanticipated consequences of transitioning to EVs at scale.

Reference: https://ift.tt/dXnVMzeHere’s How Apptronik Is Making Their Humanoid Robot

Apptronik, a Texas-based robotics company with its roots in the Human Centered Robotics Lab at University of Texas at Austin, has spent the last few years working towards a practical, general purpose humanoid robot. By designing their robot (called Apollo) completely from the ground up, including electronics and actuators, Apptronik is hoping that they’ll be able to deliver something affordable, reliable, and broadly useful. But at the moment, the most successful robots are not generalized systems—they’re uni-taskers, robots that can do one specific task very well but more or less nothing else. A general purpose robot, especially one in a human form factor, would have enormous potential. But the challenge is enormous, too.

So why does Apptronik believe that they have the answer to general purpose humanoid robots with Apollo? To find out, we spoke with Apptronik’s founders, CEO Jeff Cardenas and CTO Nick Paine.

IEEE Spectrum: Why are you developing a general purpose robot when the most successful robots in the supply chain focus on specific tasks?

Nick Paine: It’s about our level of ambition. A specialized tool is always going to beat a general tool at one task, but if you’re trying to solve ten tasks, or 100 tasks, or 1000 tasks, it’s more logical to put your effort into a single versatile hardware platform with specialized software that solves a myriad of different problems.

How do you know that you’ve reached an inflection point where building a general purpose commercial humanoid is now realistic, when it wasn’t before?

Paine: There are a number of different things. For one, Moore’s Law has slowed down, but computers are evolving in a way that has helped advance the complexity of algorithms that can be deployed on mobile systems. Also, there are new algorithms that have been developed recently that have enabled advancements in legged locomotion, machine vision, and manipulation. And along with algorithmic improvements, there have been sensing improvements. All of this has influenced the ability to design these types of legged systems for unstructured environments.

John Cardenas: I think it’s taken decades for it to be the right time. After many many iterations as a company, we’ve gotten to the point where we’ve said, “Okay, we see all the pieces to where we believe we can build a robust, capable, affordable system that can really go out and do work.” It’s still the beginning, but we’re now at an inflection point where there’s demand from the market, and we can get these out into the world.

The reason that I got into robotics is that I was sick of seeing robots just dancing all the time. I really wanted to make robots that could be useful in the world.

—Nick Paine, CTO Apptronik

Why did you need to develop and test 30 different actuators for Apollo, and how did you know that the 30th actuator was the right one?

Paine: The reason for the variety was that we take a first-principles approach to designing robotic systems. The way you control the system really impacts how you design the system, and that goes all the way down to the actuators. A certain type of actuator is not always the silver bullet: every actuator has its strengths and weaknesses, and we’ve explored that space to understand the limitations of physics to guide us toward the right solutions.

With your focus on making a system that’s affordable, how much are you relying on software to help you minimize hardware costs?

Paine: Some groups have tried masking the deficiencies of cheap, low-quality hardware with software. That’s not at all the approach we’re taking. We are leaning on our experience building these kinds of systems over the years from a first principles approach. Building from the core requirements for this type of system, we’ve found a solution that hits our performance targets while also being far more mass producible compared to anything we’ve seen in this space previously. We’re really excited about the solution that we’ve found.

How much effort are you putting into software at this stage? How will you teach Apollo to do useful things?

Paine: There are some basic applications that we need to solve for Apollo to be fundamentally useful. It needs to be able to walk around, to use its upper body and its arms to interact with the environment. Those are the core capabilities that we’re working on, and once those are at a certain level of maturity, that’s where we can open up the platform for third party application developers to build on top of that.

Cardenas: If you look at Willow Garage with the PR2, they had a similar approach, which was to build a solid hardware platform, create a powerful API, and then let others build applications on it. But then you’re really putting your destiny in the hands of other developers. One of the things that we learned from that is if you want to enable that future, you have to prove that initial utility. So what we’re doing is handling the full stack development on the initial applications, which will be targeting supply chain and logistics.

NASA officials have expressed their interest in Apptronik developing “technology and talent that will sustain us through the Artemis program and looking forward to Mars.”

NASA officials have expressed their interest in Apptronik developing “technology and talent that will sustain us through the Artemis program and looking forward to Mars.”

“In robotics, seeing is believing. You can say whatever you want, but you really have to prove what you can do, and that’s been our focus. We want to show versus tell.”

—John Cardenas, CEO Apptronik

Apptronik plans for the alpha version of Apollo to be ready in March, in time for a sneak peak for a small audience at SXSW. From there, the alpha Apollos will go through pilots as Apptronik collects feedback to develop a beta version that will begin larger deployments. The company expects these programs to lead to full a gamma version and full production runs by the end of 2024.

Reference: https://ift.tt/CUQADg2Most criminal cryptocurrency is funneled through just 5 exchanges

Enlarge (credit: Eugene Mymrin/Getty Images)

For years, the cryptocurrency economy has been rife with black market sales, theft, ransomware, and money laundering—despite the strange fact that in that economy, practically every transaction is written into a blockchain’s permanent, unchangeable ledger. But new evidence suggests that years of advancements in blockchain tracing and crackdowns on that illicit underworld may be having an effect—if not reducing the overall volume of crime, then at least cutting down on the number of laundering outlets, leaving the crypto black market with fewer options to cash out its proceeds than it’s had in a decade.

In a portion of its annual crime report focused on money laundering that was published today, cryptocurrency-tracing firm Chainalysis points to a new consolidation in crypto criminal cash-out services over the past year. It counted just 915 of those services used in 2022, the fewest it’s seen since 2012 and the latest sign of a steady drop-off in the number of those services since 2018. Chainalysis says an even smaller number of exchanges now enable the money-laundering trade of cryptocurrency for actual dollars, euros, and yen: It found that just five cryptocurrency exchanges now handle nearly 68 percent of all black market cash-outs.

Friday, January 27, 2023

The Costly Impact of Non-Strategic Patents

The five largest auto manufacturers will face massive U.S. patent fees within the next five years. This report examines auto industry lapse trends and how a company’s decisions on keeping, selling or pruning patents can greatly impact its cost savings and revenue generation opportunities.

Patent lapse strategies can help companies in any industry out-maneuver the competition. Volume 2 of the U.S. Patent Lapse Series highlights how such decisions, especially during uncertain economic times, can impact the bottom line exponentially within a few years.

Download the report to find out:

- What is the patent lapse strategy leading Honda to save millions each year?

- How has Toyota’s patent lapse rate changed in the last 10-years?

- How do the patent lapse rate and associated costs vary between top OEMs?

- Where are the opportunities for portfolio optimization during uncertain economic times?

Get the insights.

Reference: https://ift.tt/OwgnrImPivot to ChatGPT? BuzzFeed preps for AI-written content while CNET fumbles

Enlarge / An AI-generated image of a robot typewriter-journalist hard at work. (credit: Ars Technica)

On Thursday, an internal memo obtained by The Wall Street Journal revealed that BuzzFeed is planning to use ChatGPT-style text synthesis technology from OpenAI to create individualized quizzes and potentially other content in the future. After the news hit, BuzzFeed's stock rose 200 percent. On Friday, BuzzFeed formally announced the move in a post on its site.

"In 2023, you'll see AI inspired content move from an R&D stage to part of our core business, enhancing the quiz experience, informing our brainstorming, and personalizing our content for our audience," BuzzFeed CEO Jonah Peretti wrote in a memo to employees, according to Reuters. A similar statement appeared on the BuzzFeed site.

The move comes as the buzz around OpenAI's ChatGPT language model reaches a fever pitch in the tech sector, inspiring more investment from Microsoft and reactive moves from Google. ChatGPT's underlying model, GPT-3, uses its statistical "knowledge" of millions of books and articles to generate coherent text in numerous styles, with results that read very close to human writing, depending on the topic. GPT-3 works by attempting to predict the most likely next words in a sequence (called a "prompt") provided by the user.

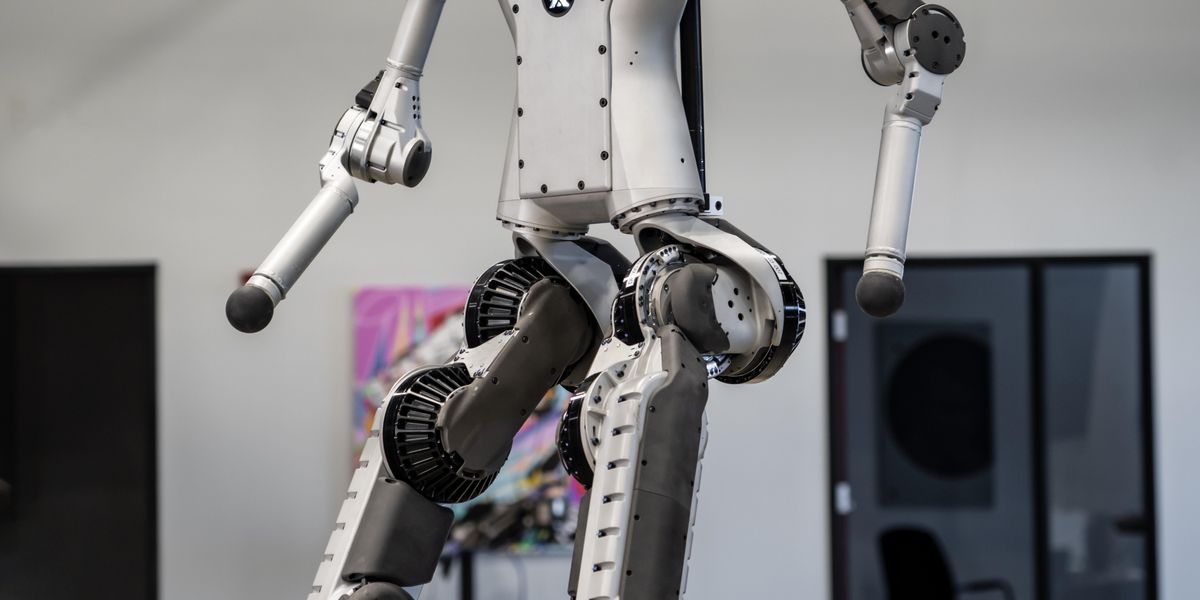

Apptronik Developing General-Purpose Humanoid Robot

There’s a handful of robotics companies currently working on what could be called general-purpose humanoid robots. That is, human-size, human-shaped robots with legs for mobility and arms for manipulation that can (or, may one day be able to) perform useful tasks in environments designed primarily for humans. The value proposition is obvious—drop-in replacement of humans for dull, dirty, or dangerous tasks. This sounds a little ominous, but the fact is that people don’t want to be doing the jobs that these robots are intended to do in the short term, and there just aren’t enough people to do these jobs as it is.

We tend to look at claims of commercializable general-purpose humanoid robots with some skepticism, because humanoids are really, really hard. They’re still really hard in a research context, which is usually where things have to get easier before anyone starts thinking about commercialization. There are certainly companies out there doing some amazing work toward practical legged systems, but at this point, “practical” is more about not falling over than it is about performance or cost effectiveness. The overall approach toward solving humanoids in this way tends to be to build something complex and expensive that does what you want, with the goal of cost reduction over time to get it to a point where it’s affordable enough to be a practical solution to a real problem.

Apptronik, based in Austin, Texas, is the latest company to attempt to figure out how to make a practical general-purpose robot. Its approach is to focus on things like cost and reliability from the start, developing (for example) its own actuators from scratch in a way that it can be sure will be cost effective and supply-chain friendly. Apptronik’s goal is to develop a platform that costs well under US $100,000 of which it hopes to be able to deliver a million by 2030, although the plan is to demonstrate a prototype early this year. Based on what we’ve seen of commercial humanoid robots recently, this seems like a huge challenge. And in part two of this story (to be posted tomorrow

), we will be talking in depth to Apptronik’s cofounders to learn more about how they’re going to make general-purpose humanoids happen.

First, though, some company history. Apptronik spun out from the Human Centered Robotics Lab at the University of Texas at Austin in 2016, but the company traces its robotics history back a little farther, to 2015’s DARPA Robotics Challenge. Apptronik’s CTO and cofounder, Nick Paine, was on the NASA-JSC Valkyrie DRC team, and Apptronik’s first contract was to work on next-gen actuation and controls for NASA. Since then, the company has been working on robotics projects for a variety of large companies. In particular, Apptronik developed Astra, a humanoid upper body for dexterous bimanual manipulation that’s currently being tested for supply-chain use.

But Apptronik has by no means abandoned its NASA roots. In 2019, NASA had plans for what was essentially going to be a Valkyrie 2, which was to be a ground-up redesign of the Valkyrie platform. As with many of the coolest NASA projects, the potential new humanoid didn’t survive budget prioritization for very long, but even at the time it wasn’t clear to us why NASA wanted to build its own humanoid rather than asking someone else to build one for it considering how much progress we’ve seen with humanoid robots over the last decade. Ultimately, NASA decided to move forward with more of a partnership model, which is where Apptronik fits in—a partnership between Apptronik and NASA will help accelerate commercialization of Apollo.

“We recognize that Apptronik is building a production robot that’s designed for terrestrial use,” says NASA’s Shaun Azimi, who leads the Dexterous Robotics Team at NASA’s Johnson Space Center. “From NASA’s perspective, what we’re aiming to do with this partnership is to encourage the development of technology and talent that will sustain us through the Artemis program and looking forward to Mars.”

Apptronik is positioning Apollo as a high-performance, easy-to-use, and versatile system. It is imagining an “iPhone of robots.”

“Apollo is the robot that we always wanted to build,” says Jeff Cardenas, Apptronik cofounder and CEO. This new humanoid is the culmination of an astonishing amount of R&D, all the way down to the actuator level. “As a company, we’ve built more than 30 unique electric actuators,” Cardenas explains. “You name it, we’ve tried it. Liquid cooling, cable driven, series elastic, parallel elastic, quasi-direct drive…. And we’ve now honed our approach and are applying it to commercial humanoids.”

Apptronik’s emphasis on commercialization gives it a much different perspective on robotics development than you get when focusing on pure research the way that NASA does. To build a commercial product rather than a handful of totally cool but extremely complex bespoke humanoids, you need to consider things like minimizing part count, maximizing maintainability and robustness, and keeping the overall cost manageable. “Our starting point was figuring out what the minimum viable humanoid robot looked like,” explains Apptronik CTO Nick Paine. “Iteration is then necessary to add complexity as needed to solve particular problems.”

This robot is called Astra. It’s only an upper body, and it’s Apptronik’s first product, but (not having any legs) it’s designed for manipulation rather than dynamic locomotion. Astra is force controlled, with series-elastic torque-controlled actuators, giving it the compliance necessary to work in dynamic environments (and particularly around humans). “Astra is pretty unique,” says Paine. “What we were trying to do with the system is to approach and achieve human-level capability in terms of manipulation workspace and payload. This robot taught us a lot about manipulation and actually doing useful work in the world, so that’s why it’s where we wanted to start.”

While Astra is currently out in the world doing pilot projects with clients (mostly in the logistics space), internally Apptronik has moved on to robots with legs. The following video, which Apptronik is sharing publicly for the first time, shows a robot that the company is calling its Quick Development Humanoid, or QDH:

QDH builds on Astra by adding legs, along with a few extra degrees of freedom in the upper body to help with mobility and balance while simplifying the upper body for more basic manipulation capability. It uses only three different types of actuators, and everything (from structure to actuators to electronics to software) has been designed and built by Apptronik. “With QDH, we’re approaching minimum viable product from a usefulness standpoint,” says Paine, “and this is really what’s driving our development, both in software and hardware.”

“What people have done in humanoid robotics is to basically take the same sort of architectures that have been used in industrial robotics and apply those to building what is in essence a multi-degree-of-freedom industrial robot,” adds Cardenas. “We’re thinking of new ways to build these systems, leveraging mass manufacturing techniques to allow us to develop a high-degree-of-freedom robot that’s as affordable as many industrial robots that are out there today.”

Cardenas explains that a major driver for the cost of humanoid robots is the number of different parts, the precision machining of some specific parts, and the resulting time and effort it then takes to put these robots together. As an internal-controls test bed, QDH has helped Apptronik to explore how it can switch to less complex parts and lower the total part count. The plan for Apollo is to not use any high-precision or proprietary components at all, which mitigates many supply-chain issues and will help Apptronik reach its target price point for the robot.

Apollo will be a completely new robot, based around the lessons Apptronik has learned from QDH. It’ll be average human size: about 1.75 meters tall, weighing around 75 kilograms, with the ability to lift 25 kg. It’s designed to operate untethered, either indoors or outdoors. Broadly, Apptronik is positioning Apollo as a high-performance, easy-to-use, and versatile robot that can do a bunch of different things. It is imagining an “iPhone of robots,” where apps can be created for the robot to perform specific tasks. To extend the iPhone metaphor, Apptronik itself will make sure that Apollo can do all of the basics (such as locomotion and manipulation) so that it has fundamental value, but the company sees versatility as the way to get to large-scale deployments and the cost savings that come with them.

“I see the Apollo robot as a spiritual successor to Valkyrie. It’s not Valkyrie 2—Apollo is its own platform, but we’re working with Apptronik to adapt it as much as we can to space use cases.”

—Shaun Azimi, NASA Johnson Space Center

The challenge with this app approach is that there’s a critical mass that’s required to get it to work—after all, the primary motivation to develop an iPhone app is that there are a bajillion iPhones out there already. Apptronik is hoping that there are enough basic manipulation tasks in the supply-chain space that Apollo can leverage to scale to that critical-mass point. “This is a huge opportunity where the tasks that you need a robot to do are pretty straightforward,” Cardenas tells us. “Picking single items, moving things with two hands, and other manipulation tasks where industrial automation only gets you to a certain point. These companies have a huge labor challenge—they’re missing labor across every part of their business.”

While Apptronik’s goal is for Apollo to be autonomous, in the short to medium term, its approach will be hybrid autonomy, with a human overseeing first a few and eventually a lot of Apollos with the ability to step in and provide direct guidance through teleoperation when necessary. “That’s really where there’s a lot of business opportunity,” says Paine. Cardenas agrees. “I came into this thinking that we’d need to make Rosie the robot before we could have a successful commercial product. But I think the bar is much lower than that. There are fairly simple tasks that we can enter the market with, and then as we mature our controls and software, we can graduate to more complicated tasks.”

Apptronik is still keeping details about Apollo’s design under wraps, for now. We were shown renderings of the robot, but Apptronik is understandably hesitant to make those public, since the design of the robot may change. It does have a firm date for unveiling Apollo for the first time: SXSW, which takes place in Austin in March.

Reference: https://ift.tt/ObCaLceThe Sneaky Standard

A version of this post originally appeared on Tedium , Ernie Smith’s newsletter, which hunts for the end of the long tail. Personal c...

-

Neuralink, the neurotechnology company founded by Elon Musk , is at best having a rough initial go-round with the Food and Drug Administr...

-

Nobel Laureate John B. Goodenough , one of the inventors of the lithium-ion battery, died on 25 June at age 100. Goodenough, a professor...

-

Enlarge (credit: Aurich Lawson / Ars Technica ) Miscreants are actively exploiting two new zero-day vulnerabilities to wrangle route...