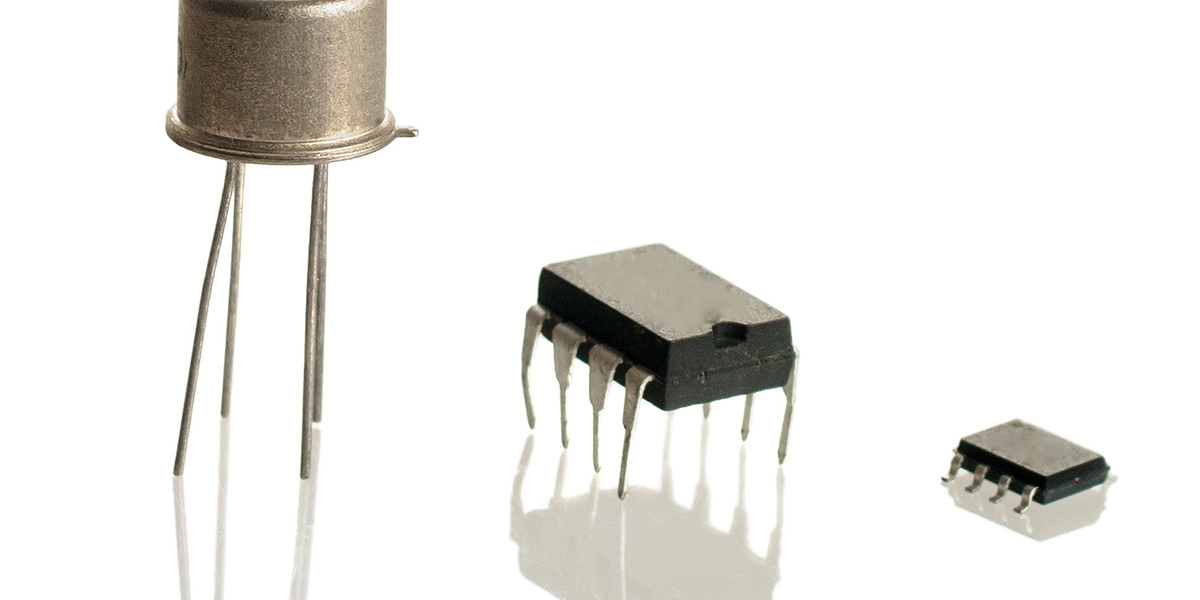

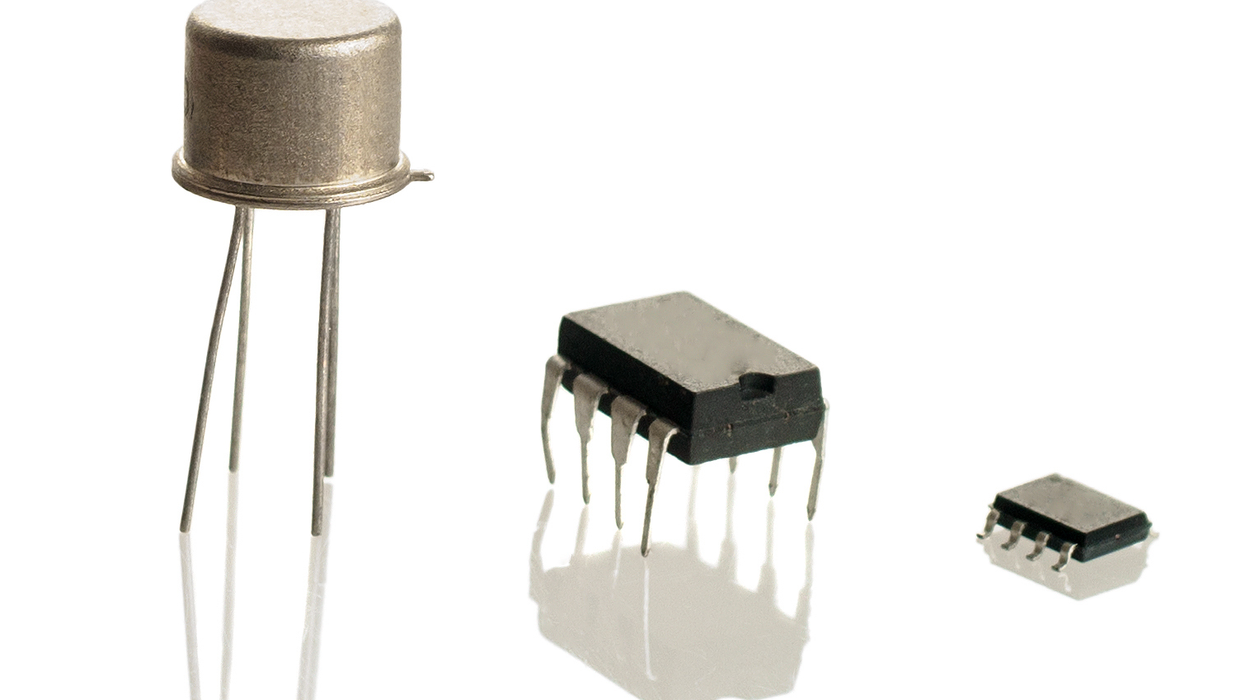

On 16 December 1947, after months of work and refinement, the Bell Labs physicists John Bardeen and Walter Brattain completed their critical experiment proving the effectiveness of the point-contact transistor. Six months later, Bell Labs gave a demonstration to officials from the U.S. military, who chose not to classify the technology because of its potentially broad applications. The following week, news of the transistor was released to the press. The New York Herald Tribune predicted that it would cause a revolution in the electronics industry. It did.

How John Bardeen got his music box

In 1949 an engineer at Bell Labs built three music boxes to show off the new transistors. Each Transistor Oscillator-Amplifier Box contained an oscillator-amplifier circuit and two point-contact transistors powered by a B-type battery. It electronically produced five distinct tones, although the sounds were not exactly melodious delights to the ear. The box’s design was a simple LC circuit, consisting of a capacitor and an inductor. The capacitance was selectable using the switch bank, which Bardeen “played” when he demonstrated the box.

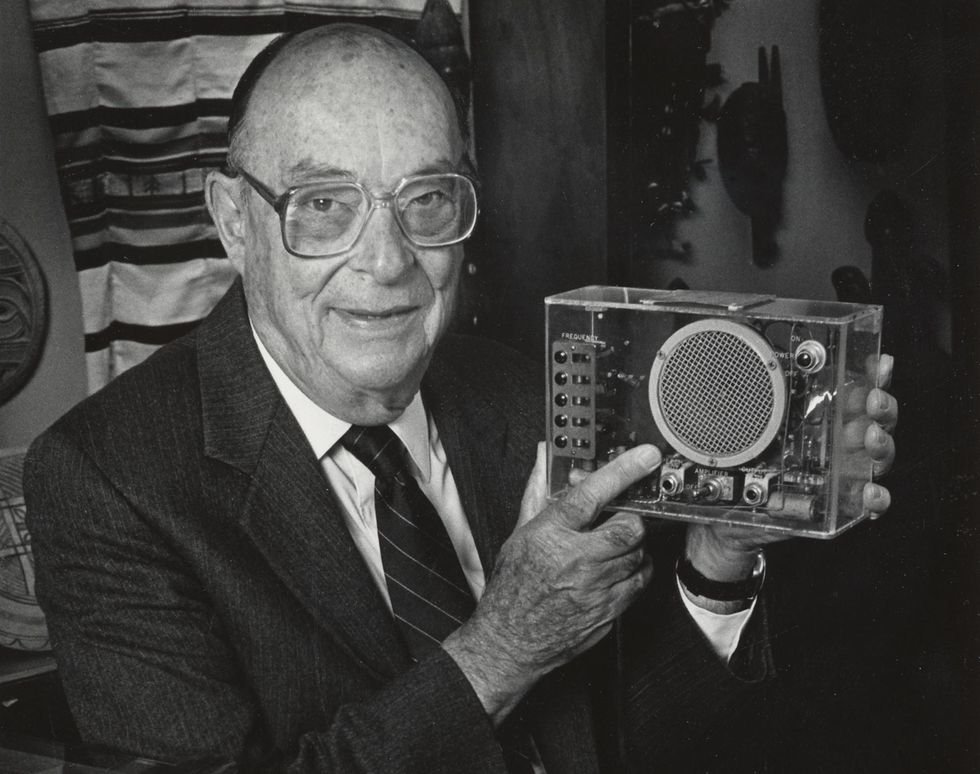

John Bardeen, co-inventor of the point-contact transistor, liked to play the tune “How Dry I Am” on his music box. The Spurlock Museum/University of Illinois at Urbana-Champaign

John Bardeen, co-inventor of the point-contact transistor, liked to play the tune “How Dry I Am” on his music box. The Spurlock Museum/University of Illinois at Urbana-Champaign

Bell Labs used one of the boxes to demonstrate the transistor’s portability. In early demonstrations, the instantaneous response of the circuits wowed witnesses, who were accustomed to having to wait for vacuum tubes to warm up. The other two music boxes went to Bardeen and Brattain. Only Bardeen’s survives.

Bardeen brought his box to the University of Illinois at Urbana-Champaign, when he joined the faculty in 1951. Despite his groundbreaking work at Bell Labs, he was relieved to move. Shortly after the invention of the transistor, Bardeen’s work environment began to deteriorate. William Shockley, Bardeen’s notoriously difficult boss, prevented him from further involvement in transistors, and Bell Labs refused to allow Bardeen to set up another research group that focused on theory.

Frederick Seitz recruited Bardeen to Illinois with a joint appointment in electrical engineering and physics, and he spent the rest of his career there. Although Bardeen earned a reputation as an unexceptional instructor—an opinion his student Nick Holonyak Jr. would argue was unwarranted—he often got a laugh from students when he used the music box to play the Prohibition-era song “How Dry I Am.” He had a key to the sequence of notes taped to the top of the box.

In 1956, Bardeen, Brattain, and Shockley shared the Nobel Prize in Physics for their “research on semiconductors and their discovery of the transistor effect.” That same year, Bardeen collaborated with postdoc Leon Cooper and grad student J. Robert Schrieffer on the work that led to their April 1957 publication in Physical Review of “Microscopic Theory of Superconductivity.” The trio won a Nobel Prize in 1972 for the development of the BCS model of superconductivity (named after their initials). Bardeen was the first person to win two Nobels in the same field and remains the only double laureate in physics. He died in 1991.

Overcoming the “inherent vice” of Bardeen’s music box

Curators at the Smithsonian Institution expressed interest in the box, but Bardeen instead offered it on a long-term loan to the World Heritage Museum (predecessor to the Spurlock Museum) at the University of Illinois. That way he could still occasionally borrow it for use in a demonstration.

In general, though, museums frown upon allowing donors—or really anyone—to operate objects in their collections. It’s a sensible policy. After all, the purpose of preserving objects in a museum is so that future generations have access to them, and any additional use can cause deterioration or damage. (Rest assured, once the music box became part of the accessioned collections after Bardeen’s death, few people were allowed to handle it other than for approved research.) But musical instruments, and by extension music boxes, are functional objects: Much of their value comes from the sound they produce. So curators have to strike a balance between use and preservation.

As it happens, Bardeen’s music box worked up until the 1990s. That’s when “inherent vice” set in. In the lexicon of museum practice, inherent vice refers to the natural tendency for certain materials to decay despite preservation specialists’ best attempts to store the items at the ideal temperature, humidity, and light levels. Nitrate film, highly acidic paper, and natural rubber are classic examples. Some objects decay quickly because the mixture of materials in them creates unstable chemical reactions. Inherent vice is a headache for any curator trying to keep electronics in working order.

The museum asked John Dallesasse, a professor of electrical engineering at Illinois, to take a look at the box, hoping that it just needed a new battery. Dallesasse’s mentor at Illinois was Holoynak, whose mentor was Bardeen. So Dallesasse considered himself Bardeen’s academic grandson.

It soon became clear that one of the original point-contact transistors had failed, and several of the wax capacitors had degraded, Dallesasse told me recently. But returning the music box to operable status was not as simple as replacing those parts. Most professional conservators abide by a code of ethics that limits their intervention; they make only changes that can be easily reversed.

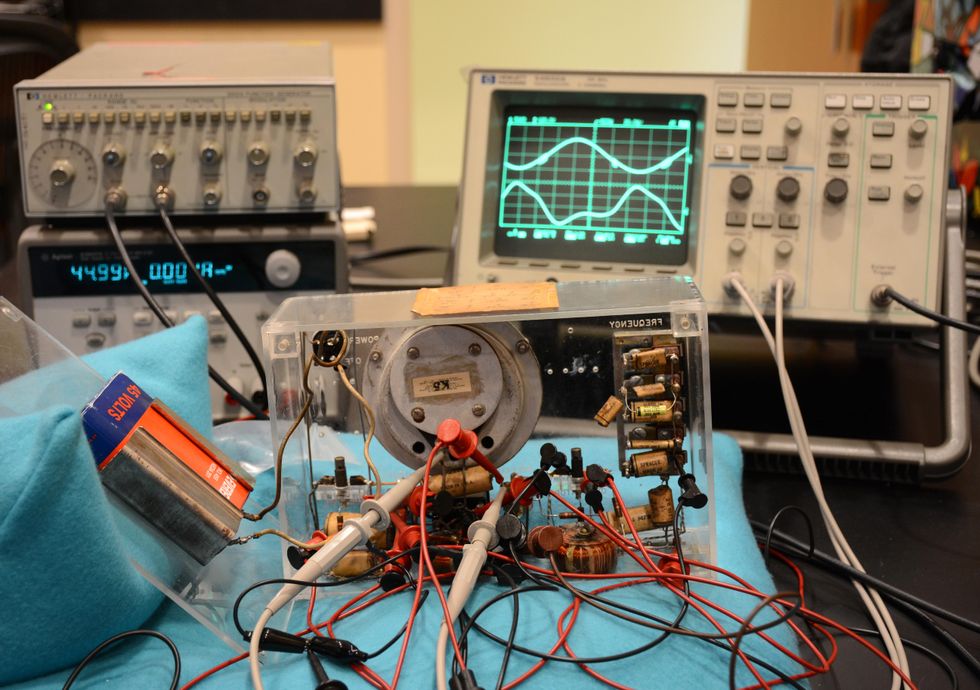

In 2019, University of Illinois professor John Dallesasse carefully restored Bardeen’s music box.The Spurlock Museum/University of Illinois at Urbana-Champaign

In 2019, University of Illinois professor John Dallesasse carefully restored Bardeen’s music box.The Spurlock Museum/University of Illinois at Urbana-Champaign

The museum was lucky in one respect: The point-contact transistor had failed as an open circuit instead of a short. This allowed Dallesasse to jumper in replacement parts, running wires from the music box to an external breadboard to bypass the failed components, instead of undoing any of the original soldering. He made sure to use time-period appropriate parts, including a working point-contact transistor borrowed from John’s son Bill Bardeen, even though that technology had been superseded by bipolar junction transistors.

Despite Dallesasse’s best efforts, the rewired box emitted a slight hum at about 30 kilohertz that wasn’t present in the original. He concluded that it was likely due to the extra wiring. He adjusted some of the capacitor values to tune the tones closer to the box’s original sounds. Dallesasse and others recalled that the first tone had been lower. Unfortunately, the frequency could not be reduced any further because it was at the edge of performance for the oscillator.

“Restoring the Bardeen Music Box” www.youtube.com

From a preservation perspective, one of the most important things Dallesasse did was to document the restoration process. Bardeen had received the box as a gift without any documentation from the original designer, so Dallesasse mapped out the circuit, which helped him with the troubleshooting. Also, documentary filmmaker Amy Young and multimedia producer Jack Brighton recorded a short video of Dallesasse explaining his approach and technique. Now future historians have resources about the second life of the music box, and we can all hear a transistor-generated rendition of “How Dry I Am.”

Part of a continuing series looking at historical artifacts that embrace the boundless potential of technology.

An abridged version of this article appears in the December 2022 print issue as “John Bardeen’s Marvelous Music Box.”

Reference: https://ift.tt/8gDZ9BE

Each evolution of the MOSFET structure has been aimed at producing better control over charge in the silicon by the gate [pink]. Dielectric [yellow] prevents charge from moving from the gate into the silicon body [blue].

Each evolution of the MOSFET structure has been aimed at producing better control over charge in the silicon by the gate [pink]. Dielectric [yellow] prevents charge from moving from the gate into the silicon body [blue].