Optimization problems—such as scheduling the hundreds of National Football League (NFL) games while attempting to abide by the league’s many, many rules—can take huge computing resources. Some such problems are impractical even for today’s supercomputers. Inspired by quantum phenomena and other physics-based ways of computing, researchers have been trying to develop dedicated computers that can solve these thorny problems faster and more efficiently.

In the latest such effort, engineers at University of Minnesota have come up with way to encode these problems onto a chip built using standard CMOS circuits. Like other so-called Ising machines, it models an interconnected network of magnetic spins. But unlike others, it manages to connect all 48 spins to each other. In the past few years, such all-to-all connections have proven to be key to quickly tackling many problems.

“48 all-to-all connections is a non-trivial milestone.”

—Peter McMahon, Cornell University

Ising models turn optimization problems into a collection of interconnected magnetic moments, or spins, which can be either “up” or “down”. These spins are connected to each other, and neighboring spins want to have opposite directions. The optimization problem is mapped to the strength and polarity of these connections. The whole collection is then allowed to relax into a state where it gets as close as it can to all the spins getting what they want; the total energy of the system is minimized, and that’s the answer to the optimization problem.

Doing this in software or even in digital hardware designed to speed Ising algorithms has had some success, but it’s been limited. The new approach “uses nature to solve the problem,” says Chris Kim, the University of Minnesota professor of electrical engineer who led the research. “Nature wants to settle down to a lower energy state.”

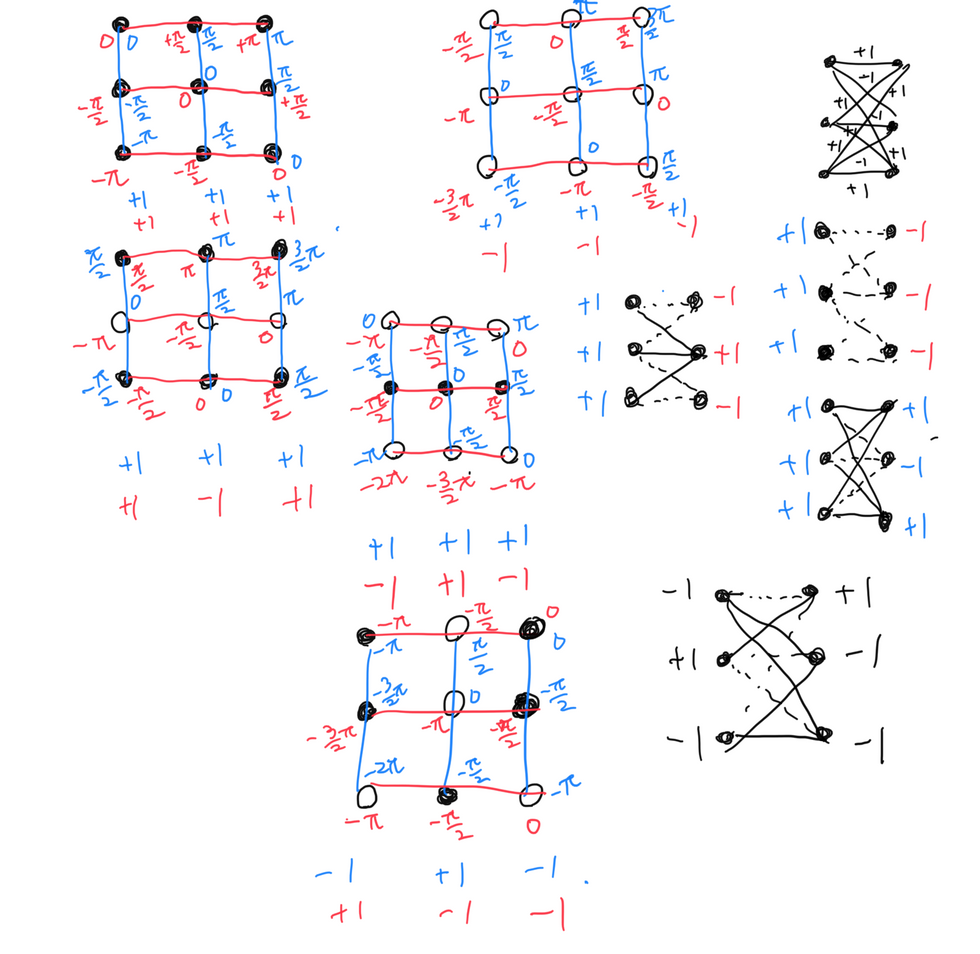

The heart of the chip is an array of interconnected inverter circuits. Chaining one inverter after another produces an oscillator circuit. The array is basically 48 oscillators in both the horizontal and vertical directions. Where each horizontal and vertical oscillator meet is a weighted connection representing the strength of the link between two spins. In that way every spin is connected to every other.

The oscillations interact in a way that mimics an Ising model moving to a lower energy state. After a few microseconds, a circuit reads the phase of the oscillations at different points, delivering the answer.

The first chip was made in a 65-nm process, which uses planar transistors. Kim hopes to make a version in a more advanced technology that uses FinFETs to prove that it works even when scaled down.

His team also plans to develop a block of circuits that would rapidly check the quality of the solution that the Ising circuits come up with. Optimization accelerators can get stuck at a solution that works but isn’t the best one possible. To get it unstuck, the quality checker would perturb the solution, run the model again, compare the answers, and possibly loop through the process again. These little nudges can eventually deliver the optimal answer.

The research, published in Nature Electronics last month, was the first to emerge from $6.8 million grant from the DARPA Quantum-Inspired Classical Computing (QuICC) program. The goal is to point a path to a 500-fold performance improvement in the amount of energy needed to solve big optimization problems relevant to the U.S. Department of Defense. Kim’s test chip consumed 105 milliwatts for the most densely connected problem it solved, but problems with sparse connections took as little as 16 milliwatts. The Minnesota group collaborated with researchers at Intel on the testing.

The size of optimization problems

The biggest stumbling block toward the Ising chip’s having a big impact, according to Kim, is that it’s unlikely that this technology can deliver the much larger all-to-all connections needed for industrially relevant problems. Researchers will have to find a way to leverage hundreds or even thousands of these arrays to solve large problems, in the way that many GPUs are used to train large AIs.

Nevertheless, even getting to 48 is an accomplishment.

“48 all-to-all connections is a non-trivial milestone,” says Peter McMahon, an assistant professor of applied engineering and physics at Cornell University who is part of a competing team in DARPA’s quest for Ising technology. “The headline result sounds really impressive, and there’s really some novelty in the way they achieved this.”

McMahon is a pioneer in optical Ising machines, which rely on pulses of light, a technology Microsoft Research has been developing. But in the DARPA program, he’s part of a team working on an Ising chip based on superconducting circuits.

McMahon agrees with Kim that a big problem facing these technologies is that few interesting problems fit into 48-spins that can’t already be solved efficiently on a CPU.

The new chip started as a hand-drawn sketch.Chris Kim

The new chip started as a hand-drawn sketch.Chris Kim

But researchers at Princeton University have found one problem that does. 5G and future 6G wireless relies on the use of so-called massive multiple input multiple output (MIMO) antenna systems. Such systems send and receive signals on multiple antennas at once to increase the data rate. However, interference is inevitable with so many antennas active at once. There are algorithms to untangle the signals, but they are currently too cumbersome for base station computers to complete in the few milliseconds they have.

The solution today is to have way, way more antennas available at the base station than there are cellular users in the area, which is inefficient to say the least. The Princeton team, which includes McMahon and is led by Kyle Jamieson, came up with an Ising model solution that doubles throughput compared to the industry standard and could fit in the chip-scale systems DARPA is developing. Kim’s group has begun a collaborating with the Jamieson’s team.

Reference: https://ift.tt/8lUDeic

No comments:

Post a Comment