There's a general consensus that we won't be able to consistently perform sophisticated quantum calculations without the development of error-corrected quantum computing, which is unlikely to arrive until the end of the decade. It's still an open question, however, whether we could perform limited but useful calculations at an earlier point. IBM is one of the companies that's betting the answer is yes, and on Wednesday, it announced a series of developments aimed at making that possible.

On their own, none of the changes being announced are revolutionary. But collectively, changes across the hardware and software stacks have produced much more efficient and less error-prone operations. The net result is a system that supports the most complicated calculations yet on IBM's hardware, leaving the company optimistic that its users will find some calculations where quantum hardware provides an advantage.

Better hardware and software

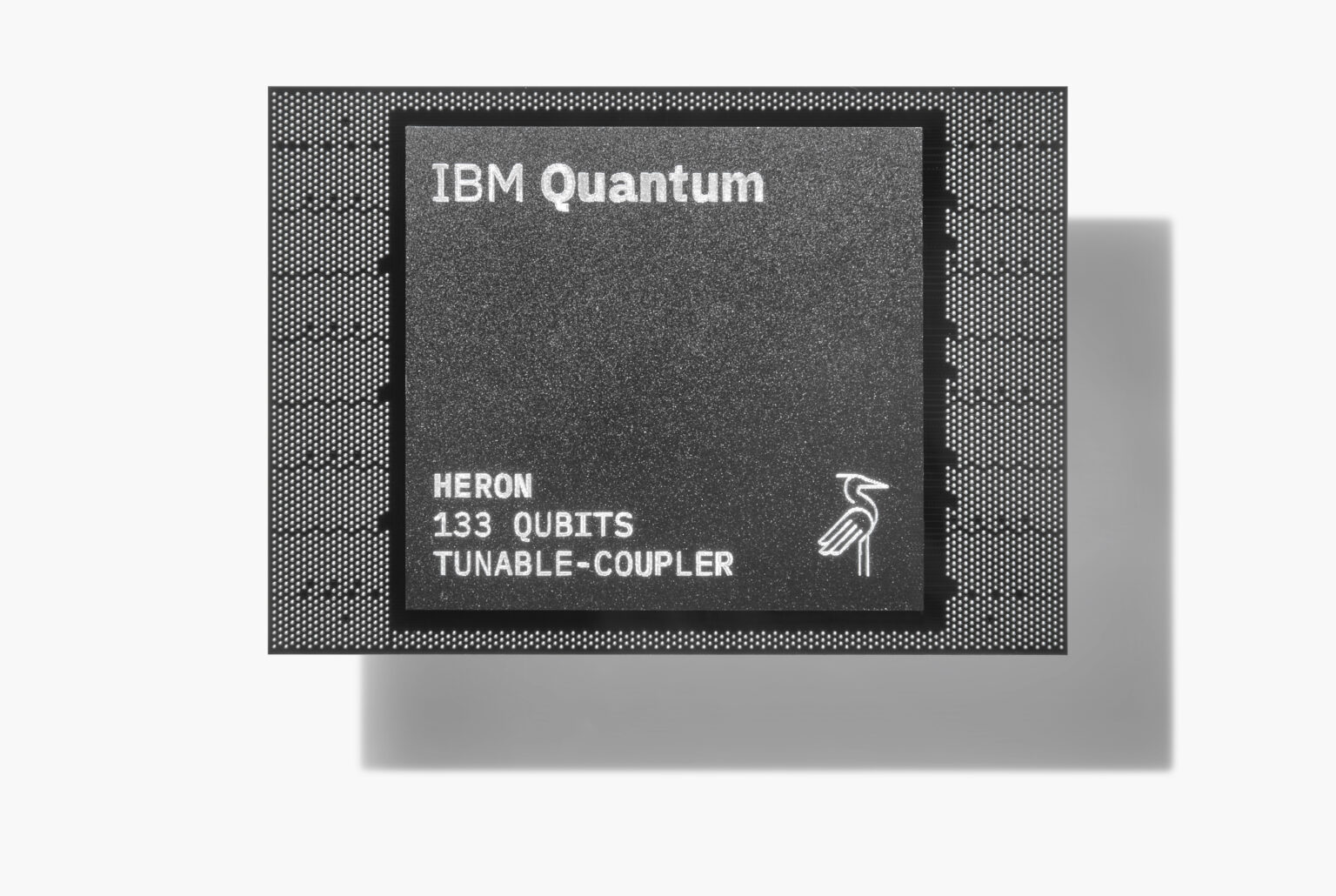

IBM's early efforts in the quantum computing space saw it ramp up the qubit count rapidly, being one of the first companies to reach the 1,000 qubit count. However, each of those qubits had an error rate that ensured that any algorithms that tried to use all of these qubits in a single calculation would inevitably trigger one. Since then, the company's focus has been on improving the performance of smaller processors. Wednesday's announcement was based on the introduction of the second version of its Heron processor, which has 133 qubits. That's still beyond the capability of simulations on classical computers, should it be able to operate with sufficiently low errors.

Reference : https://ift.tt/lWYr6hm

No comments:

Post a Comment