Editor’s note: This article is adapted from the author’s book War Virtually: The Quest to Automate Conflict, Militarize Data, and Predict the Future (University of California Press, published in paperback April 2024).

The blistering late-afternoon wind ripped across Camp Taji, a sprawling U.S. military base just north of Baghdad. In a desolate corner of the outpost, where the feared Iraqi Republican Guard had once manufactured mustard gas, nerve agents, and other chemical weapons, a group of American soldiers and Marines were solemnly gathered around an open grave, dripping sweat in the 114-degree heat. They were paying their final respects to Boomer, a fallen comrade who had been an indispensable part of their team for years. Just days earlier, he had been blown apart by a roadside bomb.

As a bugle mournfully sounded the last few notes of “Taps,” a soldier raised his rifle and fired a long series of volleys—a 21-gun salute. The troops, which included members of an elite army unit specializing in explosive ordnance disposal (EOD), had decorated Boomer posthumously with a Bronze Star and a Purple Heart. With the help of human operators, the diminutive remote-controlled robot had protected American military personnel from harm by finding and disarming hidden explosives.

Boomer was a Multi-function Agile Remote-Controlled robot, or MARCbot, manufactured by a Silicon Valley company called Exponent. Weighing in at just over 30 pounds, MARCbots look like a cross between a Hollywood camera dolly and an oversized Tonka truck. Despite their toylike appearance, the devices often leave a lasting impression on those who work with them. In an online discussion about EOD support robots, one soldier wrote, “Those little bastards can develop a personality, and they save so many lives.” An infantryman responded by admitting, “We liked those EOD robots. I can’t blame you for giving your guy a proper burial, he helped keep a lot of people safe and did a job that most people wouldn’t want to do.”

A Navy unit used a remote-controlled vehicle with a mounted video camera in 2009 to investigate suspicious areas in southern Afghanistan.Mass Communication Specialist 2nd Class Patrick W. Mullen III/U.S. Navy

A Navy unit used a remote-controlled vehicle with a mounted video camera in 2009 to investigate suspicious areas in southern Afghanistan.Mass Communication Specialist 2nd Class Patrick W. Mullen III/U.S. Navy

But while some EOD teams established warm emotional bonds with their robots, others loathed the machines, especially when they malfunctioned. Take, for example, this case described by a Marine who served in Iraq:

My team once had a robot that was obnoxious. It would frequently accelerate for no reason, steer whichever way it wanted, stop, etc. This often resulted in this stupid thing driving itself into a ditch right next to a suspected IED. So of course then we had to call EOD [personnel] out and waste their time and ours all because of this stupid little robot. Every time it beached itself next to a bomb, which was at least two or three times a week, we had to do this. Then one day we saw yet another IED. We drove him straight over the pressure plate, and blew the stupid little sh*thead of a robot to pieces. All in all a good day.

Some battle-hardened warriors treat remote-controlled devices like brave, loyal, intelligent pets, while others describe them as clumsy, stubborn clods. Either way, observers have interpreted these accounts as unsettling glimpses of a future in which men and women ascribe personalities to artificially intelligent war machines.

Some battle-hardened warriors treat remote-controlled devices like brave, loyal, intelligent pets, while others describe them as clumsy, stubborn clods.

From this perspective, what makes robot funerals unnerving is the idea of an emotional slippery slope. If soldiers are bonding with clunky pieces of remote-controlled hardware, what are the prospects of humans forming emotional attachments with machines once they’re more autonomous in nature, nuanced in behavior, and anthropoid in form? And a more troubling question arises: On the battlefield, will Homo sapiens be capable of dehumanizing members of its own species (as it has for centuries), even as it simultaneously humanizes the robots sent to kill them?

As I’ll explain, the Pentagon has a vision of a warfighting force in which humans and robots work together in tight collaborative units. But to achieve that vision, it has called in reinforcements: “trust engineers” who are diligently helping the Department of Defense (DOD) find ways of rewiring human attitudes toward machines. You could say that they want more soldiers to play “Taps” for their robot helpers and fewer to delight in blowing them up.

The Pentagon’s Push for Robotics

For the better part of a decade, several influential Pentagon officials have relentlessly promoted robotic technologies, promising a future in which “humans will form integrated teams with nearly fully autonomous unmanned systems, capable of carrying out operations in contested environments.”

Soldiers test a vertical take-off-and-landing drone at Fort Campbell, Ky., in 2020.U.S. Army

Soldiers test a vertical take-off-and-landing drone at Fort Campbell, Ky., in 2020.U.S. Army

As The New York Times reported in 2016: “Almost unnoticed outside defense circles, the Pentagon has put artificial intelligence at the center of its strategy to maintain the United States’ position as the world’s dominant military power.” The U.S. government is spending staggering sums to advance these technologies: For fiscal year 2019, the U.S. Congress was projected to provide the DOD with US $9.6 billion to fund uncrewed and robotic systems—significantly more than the annual budget of the entire National Science Foundation.

Arguments supporting the expansion of autonomous systems are consistent and predictable: The machines will keep our troops safe because they can perform dull, dirty, dangerous tasks; they will result in fewer civilian casualties, since robots will be able to identify enemies with greater precision than humans can; they will be cost-effective and efficient, allowing more to get done with less; and the devices will allow us to stay ahead of China, which, according to some experts, will soon surpass America’s technological capabilities.

Former U.S. deputy defense secretary Robert O. Work has argued for more automation within the military. Center for a New American Security

Former U.S. deputy defense secretary Robert O. Work has argued for more automation within the military. Center for a New American Security

Among the most outspoken advocate of a roboticized military is Robert O. Work, who was nominated by President Barack Obama in 2014 to serve as deputy defense secretary. Speaking at a 2015 defense forum, Work—a barrel-chested retired Marine Corps colonel with the slight hint of a drawl—described a future in which “human-machine collaboration” would win wars using big-data analytics. He used the example of Lockheed Martin’s newest stealth fighter to illustrate his point: “The F-35 is not a fighter plane, it is a flying sensor computer that sucks in an enormous amount of data, correlates it, analyzes it, and displays it to the pilot on his helmet.”

The beginning of Work’s speech was measured and technical, but by the end it was full of swagger. To drive home his point, he described a ground combat scenario. “I’m telling you right now,” Work told the rapt audience, “10 years from now if the first person through a breach isn’t a friggin’ robot, shame on us.”

“The debate within the military is no longer about whether to build autonomous weapons but how much independence to give them,” said a 2016 New York Times article. The rhetoric surrounding robotic and autonomous weapon systems is remarkably similar to that of Silicon Valley, where charismatic CEOs, technology gurus, and sycophantic pundits have relentlessly hyped artificial intelligence.

For example, in 2016, the Defense Science Board—a group of appointed civilian scientists tasked with giving advice to the DOD on technical matters—released a report titled “Summer Study on Autonomy.” Significantly, the report wasn’t written to weigh the pros and cons of autonomous battlefield technologies; instead, the group assumed that such systems will inevitably be deployed. Among other things, the report included “focused recommendations to improve the future adoption and use of autonomous systems [and] example projects intended to demonstrate the range of benefits of autonomy for the warfighter.”

What Exactly Is a Robot Soldier?

The author’s book, War Virtually, is a critical look at how the U.S. military is weaponizing technology and data.University of California Press

The author’s book, War Virtually, is a critical look at how the U.S. military is weaponizing technology and data.University of California Press

Early in the 20th century, military and intelligence agencies began developing robotic systems, which were mostly devices remotely operated by human controllers. But microchips, portable computers, the Internet, smartphones, and other developments have supercharged the pace of innovation. So, too, has the ready availability of colossal amounts of data from electronic sources and sensors of all kinds. The Financial Times reports: “The advance of artificial intelligence brings with it the prospect of robot-soldiers battling alongside humans—and one day eclipsing them altogether.” These transformations aren’t inevitable, but they may become a self-fulfilling prophecy.

All of this raises the question: What exactly is a “robot-soldier”? Is it a remote-controlled, armor-clad box on wheels, entirely reliant on explicit, continuous human commands for direction? Is it a device that can be activated and left to operate semiautonomously, with a limited degree of human oversight or intervention? Is it a droid capable of selecting targets (using facial-recognition software or other forms of artificial intelligence) and initiating attacks without human involvement? There are hundreds, if not thousands, of possible technological configurations lying between remote control and full autonomy—and these differences affect ideas about who bears responsibility for a robot’s actions.

The U.S. military’s experimental and actual robotic and autonomous systems include a vast array of artifacts that rely on either remote control or artificial intelligence: aerial drones; ground vehicles of all kinds; sleek warships and submarines; automated missiles; and robots of various shapes and sizes—bipedal androids, quadrupedal gadgets that trot like dogs or mules, insectile swarming machines, and streamlined aquatic devices resembling fish, mollusks, or crustaceans, to name a few.

Members of a U.S. Air Force squadron test out an agile and rugged quadruped robot from Ghost Robotics in 2023.Airman First Class Isaiah Pedrazzini/U.S. Air Force

Members of a U.S. Air Force squadron test out an agile and rugged quadruped robot from Ghost Robotics in 2023.Airman First Class Isaiah Pedrazzini/U.S. Air Force

The transitions projected by military planners suggest that servicemen and servicewomen are in the midst of a three-phase evolutionary process, which begins with remote-controlled robots, in which humans are “in the loop,” then proceeds to semiautonomous and supervised autonomous systems, in which humans are “on the loop,” and then concludes with the adoption of fully autonomous systems, in which humans are “out of the loop.” At the moment, much of the debate in military circles has to do with the degree to which automated systems should allow—or require—human intervention.

“Ten years from now if the first person through a breach isn’t a friggin’ robot, shame on us.” —Robert O. Work

In recent years, much of the hype has centered around that second stage: semiautonomous and supervised autonomous systems that DOD officials refer to as “human-machine teaming.” This idea suddenly appeared in Pentagon publications and official statements after the summer of 2015. The timing probably wasn’t accidental; it came at a time when global news outlets were focusing attention on a public backlash against lethal autonomous weapon systems. The Campaign to Stop Killer Robots was launched in April 2013 as a coalition of nonprofit and civil society organizations, including the International Committee for Robot Arms Control, Amnesty International, and Human Rights Watch. In July 2015, the campaign released an open letter warning of a robotic arms race and calling for a ban on the technologies. Cosigners included world-renowned physicist Stephen Hawking, Tesla founder Elon Musk, Apple cofounder Steve Wozniak, and thousands more.

In November 2015, Work gave a high-profile speech on the importance of human-machine teaming, perhaps hoping to defuse the growing criticism of “killer robots.” According to one account, Work’s vision was one in which “computers will fly the missiles, aim the lasers, jam the signals, read the sensors, and pull all the data together over a network, putting it into an intuitive interface humans can read, understand, and use to command the mission”—but humans would still be in the mix, “using the machine to make the human make better decisions.” From this point forward, the military branches accelerated their drive toward human-machine teaming.

The Doubt in the Machine

But there was a problem. Military experts loved the idea, touting it as a win-win: Paul Scharre, in his book Army of None: Autonomous Weapons and the Future of War, claimed that “we don’t need to give up the benefits of human judgment to get the advantages of automation, we can have our cake and eat it too.” However, personnel on the ground expressed—and continue to express—deep misgivings about the side effects of the Pentagon’s newest war machines.

The difficulty, it seems, is humans’ lack of trust. The engineering challenges of creating robotic weapon systems are relatively straightforward, but the social and psychological challenges of convincing humans to place their faith in the machines are bewilderingly complex. In high-stakes, high-pressure situations like military combat, human confidence in autonomous systems can quickly vanish. The Pentagon’s Defense Systems Information Analysis Center Journal noted that although the prospects for combined human-machine teams are promising, humans will need assurances:

[T]he battlefield is fluid, dynamic, and dangerous. As a result, warfighter demands become exceedingly complex, especially since the potential costs of failure are unacceptable. The prospect of lethal autonomy adds even greater complexity to the problem [in that] warfighters will have no prior experience with similar systems. Developers will be forced to build trust almost from scratch.

In a 2015 article, U.S. Navy Commander Greg Smith provided a candid assessment of aviators’ distrust in aerial drones. After describing how drones are often intentionally separated from crewed aircraft, Smith noted that operators sometimes lose communication with their drones and may inadvertently bring them perilously close to crewed airplanes, which “raises the hair on the back of an aviator’s neck.” He concluded:

[I]n 2010, one task force commander grounded his manned aircraft at a remote operating location until he was assured that the local control tower and UAV [unmanned aerial vehicle] operators located halfway around the world would improve procedural compliance. Anecdotes like these abound…. After nearly a decade of sharing the skies with UAVs, most naval aviators no longer believe that UAVs are trying to kill them, but one should not confuse this sentiment with trusting the platform, technology, or [drone] operators.

U.S. Marines [top] prepare to launch and operate a MQ-9A Reaper drone in 2021. The Reaper [bottom] is designed for both high-altitude surveillance and destroying targets.Top: Lance Cpl. Gabrielle Sanders/U.S. Marine Corps; Bottom: 1st Lt. John Coppola/U.S. Marine Corps

U.S. Marines [top] prepare to launch and operate a MQ-9A Reaper drone in 2021. The Reaper [bottom] is designed for both high-altitude surveillance and destroying targets.Top: Lance Cpl. Gabrielle Sanders/U.S. Marine Corps; Bottom: 1st Lt. John Coppola/U.S. Marine Corps

Yet Pentagon leaders place an almost superstitious trust in those systems, and seem firmly convinced that a lack of human confidence in autonomous systems can be overcome with engineered solutions. In a commentary, Courtney Soboleski, a data scientist employed by the military contractor Booz Allen Hamilton, makes the case for mobilizing social science as a tool for overcoming soldiers’ lack of trust in robotic systems.

The problem with adding a machine into military teaming arrangements is not doctrinal or numeric…it is psychological. It is rethinking the instinctual threshold required for trust to exist between the soldier and machine.… The real hurdle lies in surpassing the individual psychological and sociological barriers to assumption of risk presented by algorithmic warfare. To do so requires a rewiring of military culture across several mental and emotional domains.… AI [artificial intelligence] trainers should partner with traditional military subject matter experts to develop the psychological feelings of safety not inherently tangible in new technology. Through this exchange, soldiers will develop the same instinctual trust natural to the human-human war-fighting paradigm with machines.

The Military’s Trust Engineers Go to Work

Soon, the wary warfighter will likely be subjected to new forms of training that focus on building trust between robots and humans. Already, robots are being programmed to communicate in more human ways with their users for the explicit purpose of increasing trust. And projects are currently underway to help military robots report their deficiencies to humans in given situations, and to alter their functionality according to the machine’s perceived emotional state of the user.

At the DEVCOM Army Research Laboratory, military psychologists have spent more than a decade on human experiments related to trust in machines. Among the most prolific is Jessie Chen, who joined the lab in 2003. Chen lives and breathes robotics—specifically “agent teaming” research, a field that examines how robots can be integrated into groups with humans. Her experiments test how humans’ lack of trust in robotic and autonomous systems can be overcome—or at least minimized.

For example, in one set of tests, Chen and her colleagues deployed a small ground robot called an Autonomous Squad Member that interacted and communicated with infantrymen. The researchers varied “situation-awareness-based agent transparency”—that is, the robot’s self-reported information about its plans, motivations, and predicted outcomes—and found that human trust in the robot increased when the autonomous “agent” was more transparent or honest about its intentions.

The Army isn’t the only branch of the armed services researching human trust in robots. The U.S. Air Force Research Laboratory recently had an entire group dedicated to the subject: the Human Trust and Interaction Branch, part of the lab’s 711th Human Performance Wing, located at Wright-Patterson Air Force Base, in Ohio.

In 2015, the Air Force began soliciting proposals for “research on how to harness the socio-emotional elements of interpersonal team/trust dynamics and inject them into human-robot teams.” Mark Draper, a principal engineering research psychologist at the Air Force lab, is optimistic about the prospects of human-machine teaming: “As autonomy becomes more trusted, as it becomes more capable, then the Airmen can start off-loading more decision-making capability on the autonomy, and autonomy can exercise increasingly important levels of decision-making.”

Air Force researchers are attempting to dissect the determinants of human trust. In one project, they examined the relationship between a person’s personality profile (measured using the so-called Big Five personality traits: openness, conscientiousness, extraversion, agreeableness, neuroticism) and his or her tendency to trust. In another experiment, entitled “Trusting Robocop: Gender-Based Effects on Trust of an Autonomous Robot,” Air Force scientists compared male and female research subjects’ levels of trust by showing them a video depicting a guard robot. The robot was armed with a Taser, interacted with people, and eventually used the Taser on one. Researchers designed the scenario to create uncertainty about whether the robot or the humans were to blame. By surveying research subjects, the scientists found that women reported higher levels of trust in “Robocop” than men.

The issue of trust in autonomous systems has even led the Air Force’s chief scientist to suggest ideas for increasing human confidence in the machines, ranging from better android manners to robots that look more like people, under the principle that

good HFE [human factors engineering] design should help support ease of interaction between humans and AS [autonomous systems]. For example, better “etiquette” often equates to better performance, causing a more seamless interaction. This occurs, for example, when an AS avoids interrupting its human teammate during a high workload situation or cues the human that it is about to interrupt—activities that, surprisingly, can improve performance independent of the actual reliability of the system. To an extent, anthropomorphism can also improve human-AS interaction, since people often trust agents endowed with more humanlike features…[but] anthropomorphism can also induce overtrust.

It’s impossible to know the degree to which the trust engineers will succeed in achieving their objectives. For decades, military trainers have trained and prepared newly enlisted men and women to kill other people. If specialists have developed simple psychological techniques to overcome the soldier’s deeply ingrained aversion to destroying human life, is it possible that someday, the warfighter might also be persuaded to unquestioningly place his or her trust in robots?

Reference: https://ift.tt/tXDTMZs

What began as a class of 28 students held in a restaurant in Kalispell, Mont., has grown into a statewide program run by Code Girls United. The nonprofit has taught coding and computer science to more than 1,000 elementary, middle, and high school students. Code Girls United

What began as a class of 28 students held in a restaurant in Kalispell, Mont., has grown into a statewide program run by Code Girls United. The nonprofit has taught coding and computer science to more than 1,000 elementary, middle, and high school students. Code Girls United

An autonomous vehicle drives across kilometers of desert with no prior map, no GPS, and no road.Field AI

An autonomous vehicle drives across kilometers of desert with no prior map, no GPS, and no road.Field AI Field AI

Field AI Same brain, lots of different robots: the Field AI team’s foundation models can be used on robots big, small, expensive, and somewhat less expensive.Field AI

Same brain, lots of different robots: the Field AI team’s foundation models can be used on robots big, small, expensive, and somewhat less expensive.Field AI

Chinese-language keyboards are often “pinyin keyboards,” which allow for thousands of characters to be typed using a QWERTY-style approach.Zamoeux/Wikimedia

Chinese-language keyboards are often “pinyin keyboards,” which allow for thousands of characters to be typed using a QWERTY-style approach.Zamoeux/Wikimedia

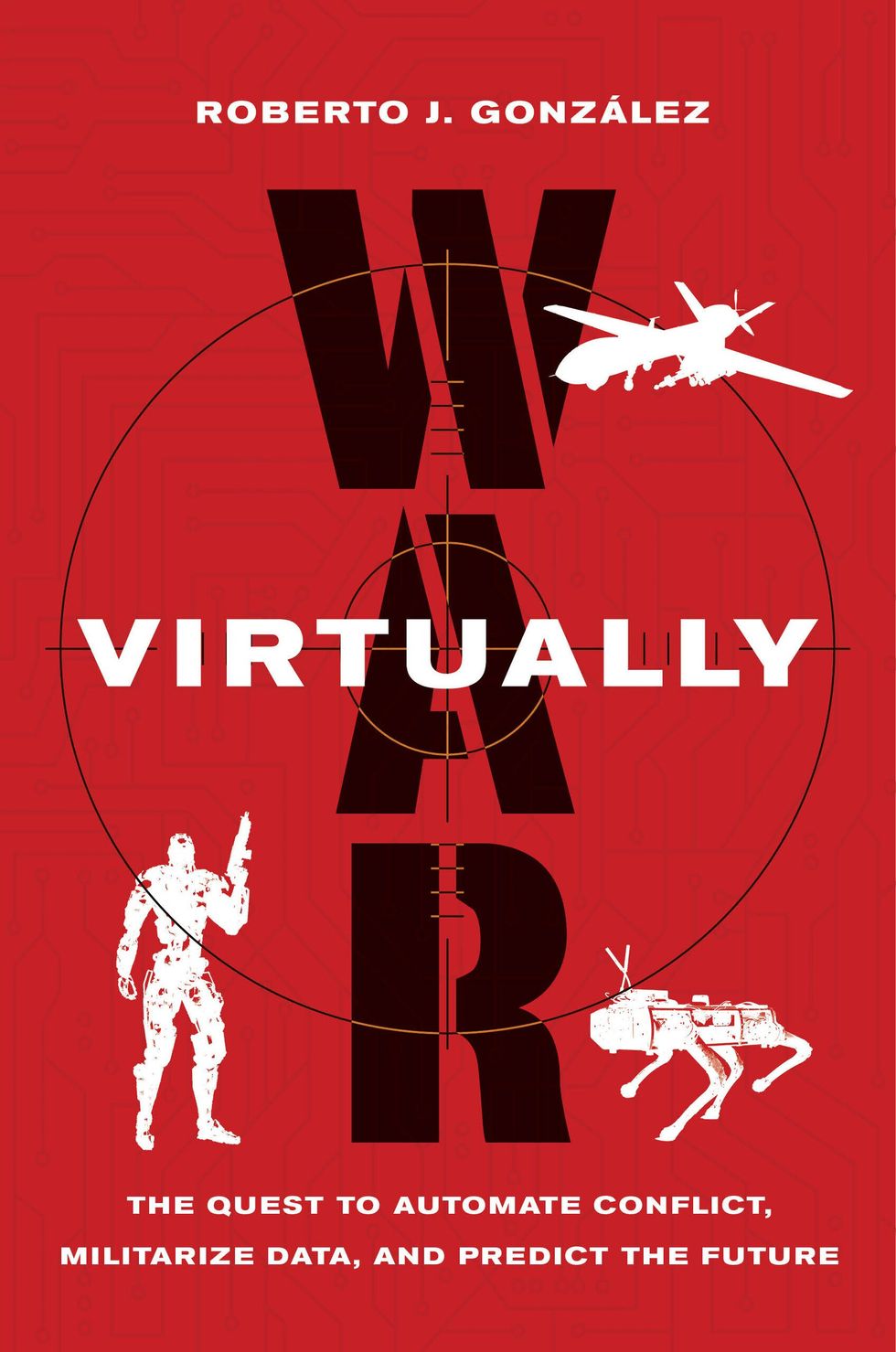

Electronically-assisted-astronomy photographs captured with my rig: the moon [top], the sun [middle], and the Orion Nebula [bottom] David Schneider

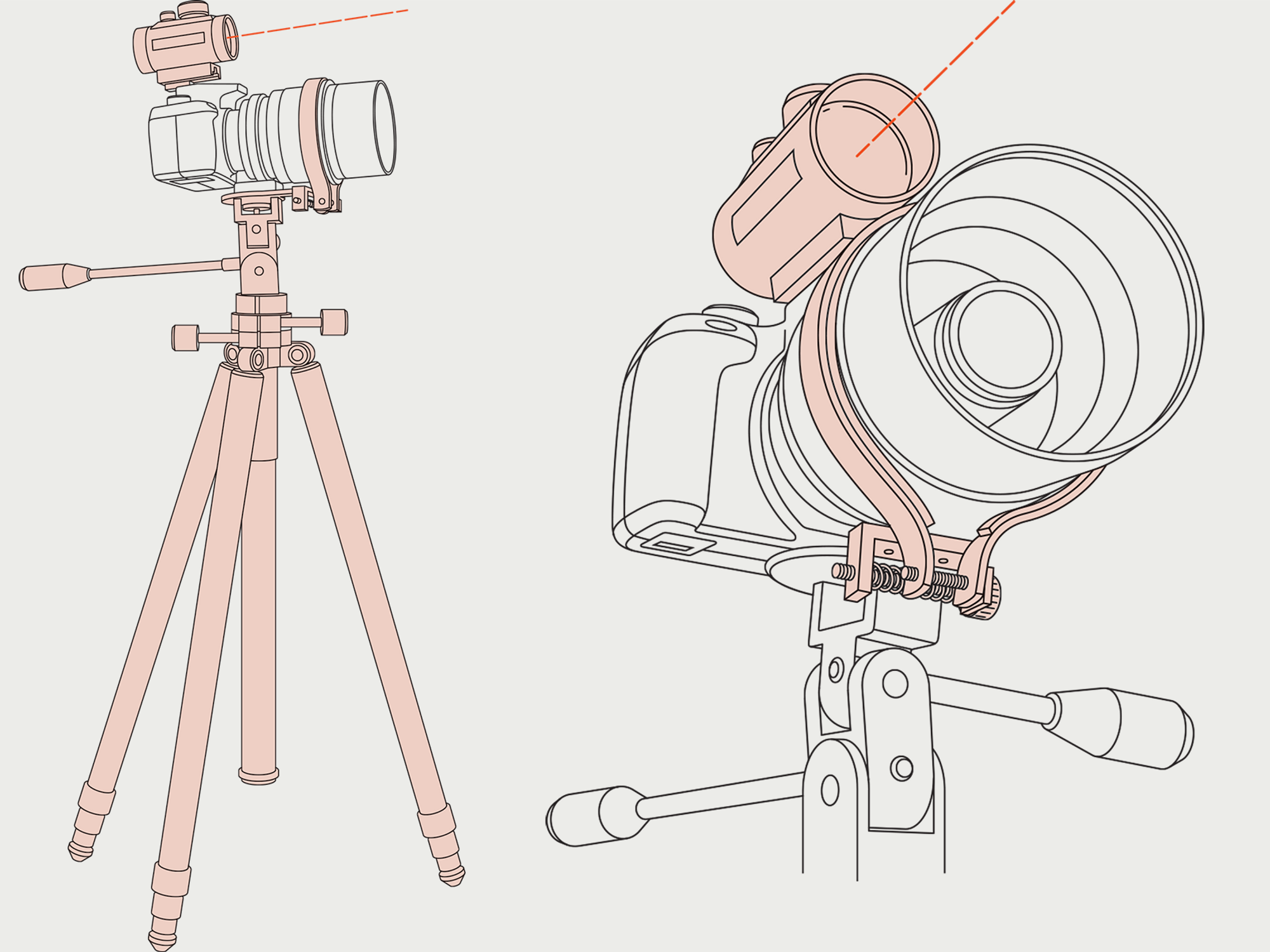

Electronically-assisted-astronomy photographs captured with my rig: the moon [top], the sun [middle], and the Orion Nebula [bottom] David Schneider Stacking software takes a series of images of the sky, compensates for the motion of the stars, and combines the images to simulate long exposures without blurring.

Stacking software takes a series of images of the sky, compensates for the motion of the stars, and combines the images to simulate long exposures without blurring.

A Navy unit used a remote-controlled vehicle with a mounted video camera in 2009 to investigate suspicious areas in southern Afghanistan.Mass Communication Specialist 2nd Class Patrick W. Mullen III/U.S. Navy

A Navy unit used a remote-controlled vehicle with a mounted video camera in 2009 to investigate suspicious areas in southern Afghanistan.Mass Communication Specialist 2nd Class Patrick W. Mullen III/U.S. Navy Soldiers test a vertical take-off-and-landing drone at Fort Campbell, Ky., in 2020.U.S. Army

Soldiers test a vertical take-off-and-landing drone at Fort Campbell, Ky., in 2020.U.S. Army Former U.S. deputy defense secretary Robert O. Work has argued for more automation within the military. Center for a New American Security

Former U.S. deputy defense secretary Robert O. Work has argued for more automation within the military. Center for a New American Security The author’s book,

The author’s book,  Members of a U.S. Air Force squadron test out an agile and rugged quadruped robot from Ghost Robotics in 2023.Airman First Class Isaiah Pedrazzini/U.S. Air Force

Members of a U.S. Air Force squadron test out an agile and rugged quadruped robot from Ghost Robotics in 2023.Airman First Class Isaiah Pedrazzini/U.S. Air Force U.S. Marines [top] prepare to launch and operate a MQ-9A Reaper drone in 2021. The Reaper [bottom] is designed for both high-altitude surveillance and destroying targets.Top: Lance Cpl. Gabrielle Sanders/U.S. Marine Corps; Bottom: 1st Lt. John Coppola/U.S. Marine Corps

U.S. Marines [top] prepare to launch and operate a MQ-9A Reaper drone in 2021. The Reaper [bottom] is designed for both high-altitude surveillance and destroying targets.Top: Lance Cpl. Gabrielle Sanders/U.S. Marine Corps; Bottom: 1st Lt. John Coppola/U.S. Marine Corps