In our last article, A How-To Guide on Acquiring AI Systems, we explained why the IEEE P3119 Standard for the Procurement of Artificial Intelligence (AI) and Automated Decision Systems (ADS) is needed.

In this article, we give further details about the draft standard and the use of regulatory “sandboxes” to test the developing standard against real-world AI procurement use cases.

Strengthening AI procurement practices

The IEEE P3119 draft standard is designed to help strengthen AI procurement approaches, using due diligence to ensure that agencies are critically evaluating the AI services and tools they acquire. The standard can give government agencies a method to ensure transparency from AI vendors about associated risks.

The standard is not meant to replace traditional procurement processes, but rather to optimize established practices. IEEE P3119’s risk-based-approach to AI procurement follows the general principles in IEEE’s Ethically Aligned Design treatise, which prioritizes human well-being.

The draft guidance is written in accessible language and includes practical tools and rubrics. For example, it includes a scoring guide to help analyze the claims vendors make about their AI solutions.

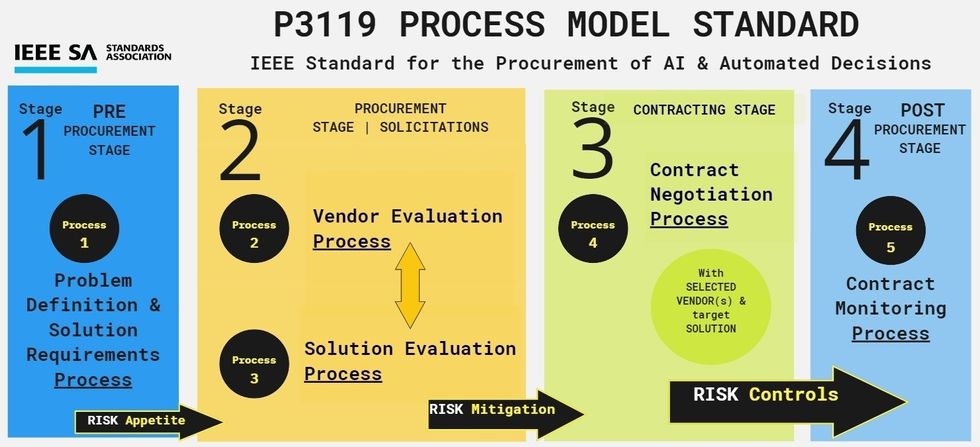

The IEEE P3119 standard is composed of five processes that will help users identify, mitigate, and monitor harms commonly associated with high-risk AI systems such as the automated decision systems found in education, health, employment, and many public sector areas.

An overview of the standard’s five processes is depicted below.

Gisele Waters

Gisele Waters

Steps for defining problems and business needs

The five processes are 1) defining the problem and solution requirements, 2) evaluating vendors, 3) evaluating solutions, 4) negotiating contracts, and 5) monitoring contracts. These occur across four stages: pre-procurement, procurement, contracting, and post-procurement. The processes will be integrated into what already happens in conventional global procurement cycles.

While the working group was developing the standard, it discovered that traditional procurement approaches often skip a pre-procurement stage of defining the problem or business need. Today, AI vendors offer solutions in search of problems instead of addressing problems that need solutions. That’s why the working group created tools to assist agencies with defining a problem and to assess the organization’s appetite for risk. These tools help agencies proactively plan procurements and outline appropriate solution requirements.

During the stage in which bids are solicited from vendors (often called the “request for proposals” or “invitation to tender” stage), the vendor evaluation and solution evaluation processes work in tandem to provide a deeper analysis. The vendor’s organizational AI governance practices and policies are assessed and scored, as are their solutions. With the standard, buyers will be required to get robust disclosure about the target AI systems to better understand what’s being sold. These AI transparency requirements are missing in existing procurement practices.

The contracting stage addresses gaps in existing software and information technology contract templates, which are not adequately evaluating the nuances and risks of AI systems. The standard offers reference contract language inspired by Amsterdam’s Contractual Terms for Algorithms, the European model contractual clauses, and clauses issued by the Society for Computers and Law AI Group.

“The working group created tools to assist agencies with defining a problem and to assess the organization’s appetite for risk. These tools help agencies proactively plan procurements and outline appropriate solution requirements.”

Providers will be able to help control for the risks they identified in the earlier processes by aligning them with curated clauses in their contracts. This reference contract language can be indispensable to agencies negotiating with AI vendors. When technical knowledge of the product being procured is extremely limited, having curated clauses can help agencies negotiate with AI vendors and advocate to protect the public interest.

The post-procurement stage involves monitoring for the identified risks, as well as terms and conditions embedded into the contract. Key performance indicators and metrics are also continuously assessed.

The five processes offer a risk-based approach that most agencies can apply across a variety of AI procurement use cases.

Sandboxes explore innovation and existing processes

In advance of the market deployment of AI systems, sandboxes are opportunities to explore and evaluate existing processes for the procurement of AI solutions.

Sandboxes are sometimes used in software development. They are isolated environments where new concepts and simulations can be tested. Harvard’s AI Sandbox, for example, enables university researchers to study security and privacy risks in generative AI.

Regulatory sandboxes are real-life testing environments for technologies and procedures that are not yet fully compliant with existing laws and regulations. They are typically enabled over a limited time period in a “safe space” where legal constraints are often “reduced” and agile exploration of innovation can occur. Regulatory sandboxes can contribute to evidence-based lawmaking and can provide feedback that allows agencies to identify possible challenges to new laws, standards and technologies.

We sought a regulatory sandbox to test our assumptions and the components of the developing standard, aiming to explore how the standard would fare on real-world AI use cases.

In search of sandbox partners last year, we engaged with 12 government agencies representing local, regional, and transnational jurisdictions. The agencies all expressed interest in responsible AI procurement. Together, we advocated for a sandbox “proof of concept” collaboration in which the IEEE Standards Association, IEEE P3119 working group members, and our partners could test the standard’s guidance and tools against a retrospective or future AI procurement use case. During several months of meetings we have learned which agencies have personnel with both the authority and the bandwidth needed to partner with us.

Two entities in particular have shown promise as potential sandbox partners: an agency representing the European Union and a consortium of local government councils in the United Kingdom.

Our aspiration is to use a sandbox to assess the differences between current AI procurement procedures and what could be if the draft standard adapts the status quo. For mutual gain, the sandbox would test for strengths and weaknesses in both existing procurement practices and our IEEE P3119 drafted components.

After conversations with government agencies, we faced the reality that a sandbox collaboration requires lengthy authorizations and considerations for IEEE and the government entity. The European agency for instance navigates compliance with the EU AI Act, General Data Protection Regulation, and its own acquisition regimes while managing procurement processes. Likewise, the U.K. councils bring requirements from their multi-layered regulatory environment.

Those requirements, while not surprising, should be recognized as substantial technical and political challenges to getting sandboxes approved. The role of regulatory sandboxes, especially for AI-enabled public services in high-risk domains, is critical to informing innovation in procurement practices.

A regulatory sandbox can help us learn whether a voluntary consensus-based standard can make a difference in the procurement of AI solutions. Testing the standard in collaboration with sandbox partners would give it a better chance of successful adoption. We look forward to continuing our discussions and engagements with our potential partners.

The approved IEEE 3119 standard is expected to be published early next year and possibly before the end of this year.

Reference: https://ift.tt/CKdIqTz

No comments:

Post a Comment