In recent years, AI ethicists have had a tough job. The engineers developing generative AI tools have been racing ahead, competing with each other to create models of even more breathtaking abilities, leaving both regulators and ethicists to comment on what’s already been done.

One of the people working to shift this paradigm is Alice Xiang, global head of AI ethics at Sony. Xiang has worked to create an ethics-first process in AI development within Sony and in the larger AI community. She spoke to Spectrum about starting with the data and whether Sony, with half its business in content creation, could play a role in building a new kind of generative AI.

Alice Xiang on...

- Responsible data collection

- Her work at Sony

- The impact of new AI regulations

- Creator-centric generative AI

Responsible data collection

IEEE Spectrum: What’s the origin of your work on responsible data collection? And in that work, why have you focused specifically on computer vision?

Alice Xiang: In recent years, there has been a growing awareness of the importance of looking at AI development in terms of entire life cycle, and not just thinking about AI ethics issues at the endpoint. And that’s something we see in practice as well, when we’re doing AI ethics evaluations within our company: How many AI ethics issues are really hard to address if you’re just looking at things at the end. A lot of issues are rooted in the data collection process—issues like consent, privacy, fairness, intellectual property. And a lot of AI researchers are not well equipped to think about these issues. It’s not something that was necessarily in their curricula when they were in school.

In terms of generative AI, there is growing awareness of the importance of training data being not just something you can take off the shelf without thinking carefully about where the data came from. And we really wanted to explore what practitioners should be doing and what are best practices for data curation. Human-centric computer vision is an area that is arguably one of the most sensitive for this because you have biometric information.

Spectrum: The term “human-centric computer vision”: Does that mean computer vision systems that recognize human faces or human bodies?

Xiang: Since we’re focusing on the data layer, the way we typically define it is any sort of [computer vision] data that involves humans. So this ends up including a much wider range of AI. If you wanted to create a model that recognizes objects, for example—objects exist in a world that has humans, so you might want to have humans in your data even if that’s not the main focus. This kind of technology is very ubiquitous in both high- and low-risk contexts.

“A lot of AI researchers are not well equipped to think about these issues. It’s not something that was necessarily in their curricula when they were in school.” —Alice Xiang, Sony

Spectrum: What were some of your findings about best practices in terms of privacy and fairness?

Xiang: The current baseline in the human-centric computer vision space is not great. This is definitely a field where researchers have been accustomed to using large web-scraped datasets that do not have any consideration of these ethical dimensions. So when we talk about, for example, privacy, we’re focused on: Do people have any concept of their data being collected for this sort of use case? Are they informed of how the data sets are collected and used? And this work starts by asking: Are the researchers really thinking about the purpose of this data collection? This sounds very trivial, but it’s something that usually doesn’t happen. People often use datasets as available, rather than really trying to go out and source data in a thoughtful manner.

This also connects with issues of fairness. How broad is this data collection? When we look at this field, most of the major datasets are extremely U.S.-centric, and a lot of biases we see are a result of that. For example, researchers have found that object-detection models tend to work far worse in lower-income countries versus higher-income countries, because most of the images are sourced from higher-income countries. Then on a human layer, that becomes even more problematic if the datasets are predominantly of Caucasian individuals and predominantly male individuals. A lot of these problems become very hard to fix once you’re already using these [datasets].

So we start there, and then we go into much more detail as well: If you were to collect a data set from scratch, what are some of the best practices? [Including] these purpose statements, the types of consent and best practices around human-subject research, considerations for vulnerable individuals, and thinking very carefully about the attributes and metadata that are collected.

Spectrum: I recently read Joy Buolamwini’s book Unmasking AI, in which she documents her painstaking process to put together a dataset that felt ethical. It was really impressive. Did you try to build a dataset that felt ethical in all the dimensions?

Xiang: Ethical data collection is an important area of focus for our research, and we have additional recent work on some of the challenges and opportunities for building more ethical datasets, such as the need for improved skin tone annotations and diversity in computer vision. As our own ethical data collection continues, we will have more to say on this subject in the coming months.

back to top

Her work at Sony

Spectrum: How does this work manifest within Sony? Are you working with internal teams who have been using these kinds of datasets? Are you saying they should stop using them?

Xiang: An important part of our ethics assessment process is asking folks about the datasets they use. The governance team that I lead spends a lot of time with the business units to talk through specific use cases. For particular datasets, we ask: What are the risks? How do we mitigate those risks? This is especially important for bespoke data collection. In the research and academic space, there’s a primary corpus of data sets that people tend to draw from, but in industry, people are often creating their own bespoke datasets.

“I think with everything AI ethics related, it’s going to be impossible to be purists.” —Alice Xiang, Sony

Spectrum: I know you’ve spoken about AI ethics by design. Is that something that’s in place already inside Sony? Are AI ethics talked about from the beginning stages of a product or a use case?

Xiang: Definitely. There are a bunch of different processes, but the one that’s probably the most concrete is our process for all our different electronics products. For that one, we have several checkpoints as part of the standard quality management system. This starts in the design and planning stage, and then goes to the development stage, and then the actual release of the product. As a result, we are talking about AI ethics issues from the very beginning, even before any sort of code has been written, when it’s just about the idea for the product.

back to top

The impact of new AI regulations

Spectrum: There’s been a lot of action recently on AI regulations and governance initiatives around the world. China already has AI regulations, the EU passed its AI Act, and here in the U.S. we had President Biden’s executive order. Have those changed either your practices or your thinking about product design cycles?

Xiang: Overall, it’s been very helpful in terms of increasing the relevance and visibility of AI ethics across the company. Sony’s a unique company in that we are simultaneously a major technology company, but also a major content company. A lot of our business is entertainment, including films, music, video games, and so forth. We’ve always been working very heavily with folks on the technology development side. Increasingly we’re spending time talking with folks on the content side, because now there’s a huge interest in AI in terms of the artists they represent, the content they’re disseminating, and how to protect rights.

“When people say ‘go get consent,’ we don’t have that debate or negotiation of what is reasonable.” —Alice Xiang, Sony

Generative AI has also dramatically impacted that landscape. We’ve seen, for example, one of our executives at Sony Music making statements about the importance of consent, compensation, and credit for artists whose data is being used to train AI models. So [our work] has expanded beyond just thinking of AI ethics for specific products, but also the broader landscapes of rights, and how do we protect our artists? How do we move AI in a direction that is more creator-centric? That’s something that is quite unique about Sony, because most of the other companies that are very active in this AI space don’t have much of an incentive in terms of protecting data rights.

back to top

Creator-centric generative AI

Spectrum: I’d love to see what more creator-centric AI would look like. Can you imagine it being one in which the people who make generative AI models get consent or compensate artists if they train on their material?

Xiang: It’s a very challenging question. I think this is one area where our work on ethical data curation can hopefully be a starting point, because we see the same problems in generative AI that we see for more classical AI models. Except they’re even more important, because it’s not only a matter of whether my image is being used to train a model, now [the model] might be able to generate new images of people who look like me, or if I’m the copyright holder, it might be able to generate new images in my style. So a lot of these things that we’re trying to push on—consent, fairness, IP and such—they become a lot more important when we’re thinking about [generative AI]. I hope that both our past research and future research projects will be able to really help.

Spectrum: Are you able to say whether Sony is developing generative AI models?

“I don’t think we can just say, ‘Well, it’s way too hard for us to solve today, so we’re just going to try to filter the output at the end.’” —Alice Xiang, Sony

Xiang: I can’t speak for all of Sony, but certainly we believe that AI technology, including generative AI, has the potential to augment human creativity. In the context of my work, we think a lot about the need to respect the rights of stakeholders, including creators, through the building of AI systems that creators can use with peace of mind.

Spectrum: I’ve been thinking a lot lately about generative AI’s problems with copyright and IP. Do you think it’s something that can be patched with the Gen AI systems we have now, or do you think we really need to start over with how we train these things? And this can be totally your opinion, not Sony’s opinion.

Xiang: In my personal opinion, I think with everything AI ethics related, it’s going to be impossible to be purists. Even though we are pushing very strongly for these best practices, we also acknowledge in all our research papers just how insanely difficult this is. If you were to, for example, uphold the highest practices for obtaining consent, it’s difficult to imagine that you could have datasets of the magnitude that a lot of the models nowadays require. You’d have to maintain relationships with billions of people around the world in terms of informing them of how their data is being used and letting them revoke consent.

Part of the problem right now is when people say “go get consent,” we don’t have that debate or negotiation of what is reasonable. The tendency becomes either to throw the baby out with the bathwater and ignore this issue, or go to the other extreme, and not have the technology at all. I think the reality will always have to be somewhere in between.

So when it comes to these issues of reproduction of IP-infringing content, I think it’s great that there’s a lot of research now being done on this specific topic. There are a lot of patches and filters that people are proposing. That said, I think we also will need to think more carefully about the data layer as well. I don’t think we can just say, “Well, it’s way too hard for us to solve today, so we’re just going to try to filter the output at the end.”

We’ll ultimately see what shakes out in terms of the courts in terms of whether this is going to be okay from a legal perspective. But from an ethics perspective, I think we’re at a point where there needs to be deep conversations on what is reasonable in terms of the relationships between companies that benefit from AI technologies and the people whose works were used to create it. My hope is that Sony can play a role in those conversations.

back to top

Reference: https://ift.tt/0jIt3ua

The Perseverance rover took this picture of Ingenuity on on Aug. 2, 2023, just before flight 54.NASA/JPL-Caltech/ASU/MSSS

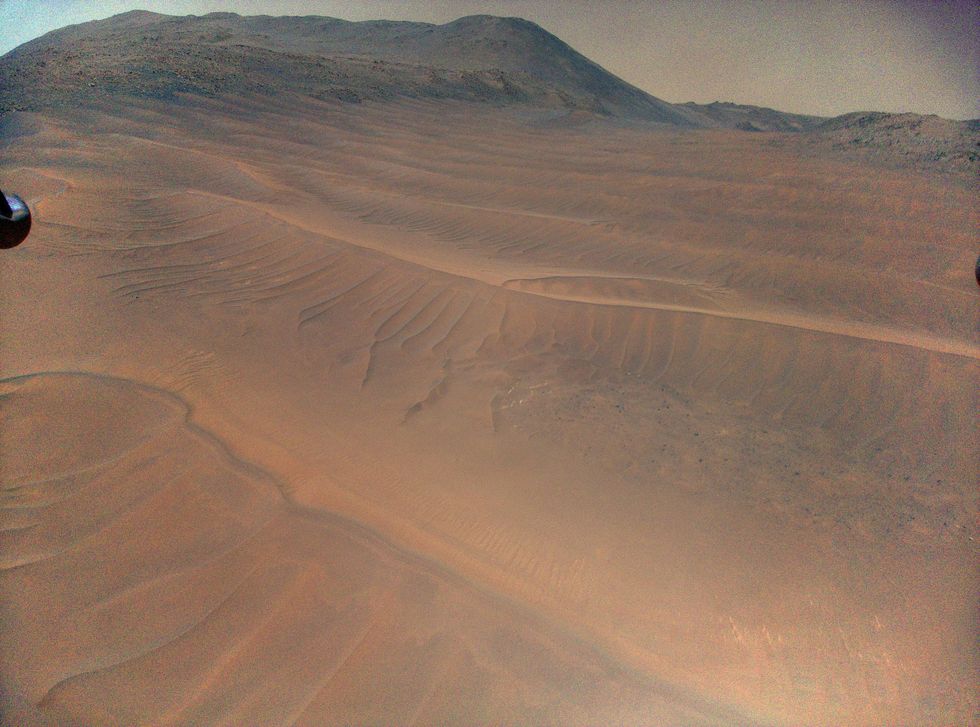

The Perseverance rover took this picture of Ingenuity on on Aug. 2, 2023, just before flight 54.NASA/JPL-Caltech/ASU/MSSS This view from Ingenuity’s navigation camera during flight 70 (on December 22) shows areas of nearly featureless terrain that would cause problems during flights 71 and 72.NASA/JPL-Caltech

This view from Ingenuity’s navigation camera during flight 70 (on December 22) shows areas of nearly featureless terrain that would cause problems during flights 71 and 72.NASA/JPL-Caltech A closeup of the shadow of the damaged blade tip.NASA/JPL-Caltech

A closeup of the shadow of the damaged blade tip.NASA/JPL-Caltech Perseverance watches Ingenuity take off on flight 47 on March 14, 2023.NASA/JPL-Caltech/ASU/MSSS

Perseverance watches Ingenuity take off on flight 47 on March 14, 2023.NASA/JPL-Caltech/ASU/MSSS