When Figure announced earlier this year that it was working on a general purpose humanoid robot, our excitement was tempered somewhat by the fact that the company didn’t have much to show besides renders of the robot that it hoped to eventually build. Figure had a slick looking vision, but without anything to back it up (besides a world-class robotics team, of course), it was unclear how fast they’d be able to progress.

As it turns out, they’ve progressed pretty darn fast, and today Figure is unveiling its Figure 01 robot, which has gone from nothing at all to dynamic walking in under a year.

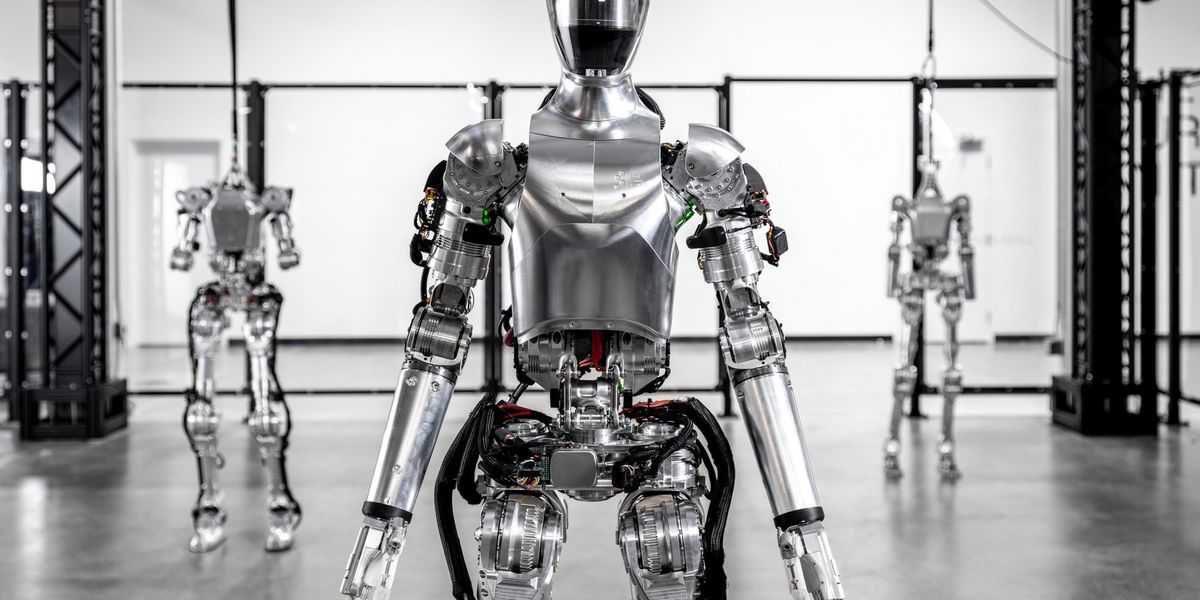

A couple of things to note about the video, once you tear your eyes away from that shiny metal skin and the enormous battery backpack: first, the robot is walking dynamically without a tether and there are no nervous-looking roboticists within easy grabbing distance. Impressive! Dynamic walking means that there are points during the robot’s gait cycle where abruptly stopping would cause the robot to fall over, since it’s depending on momentum to keep itself in motion. It’s the kind of walking that humans do, and is significantly more difficult than a more traditional “robotic” walk, in which a robot makes sure that its center of mass is always safely positioned above a solid ground contact. Dynamic walking is also where those gentle arm swings come from—they’re helping keep the robot’s motion smooth and balanced, again in a human-like way.

The second thing that stands out is how skinny (and shiny!) this robot is, especially if you can look past the cable routing. Figure had initially shown renderings of a robot with the form factor of a slim human, but there’s usually a pretty big difference between an initial fancy render and real hardware that shows up months later. It now looks like Figure actually has a shot at keeping to that slim design, which has multiple benefits—there are human-robot interaction considerations, where a smaller form factor is likely to be less intimidating, but more importantly, the mass you save by slimming down as much as possible leads to a robot that’s more efficient, cheaper, and safer.

Obviously, there’s a lot more going on here than Figure could squeeze into is press release, so for more details, we spoke with Jenna Reher, a Senior Robotics/AI Engineer at Figure, and Jerry Pratt, Figure’s CTO.

What was the process like for you to teach this robot how to walk? How difficult was it to do that in a year?

Jenna Reher: We’ve been really focused on making sure that we’re validating a lot of the hardware as it’s built. With the robot that’s shown in the video, earlier this year it was just the pelvis bolted onto a test fixture. Then we added the spine joints and all the joints connected to that pelvis, and literally built the robot out from that pelvis. We added the legs and had those swinging in the air, and then built up the torso on top of that. At each of those stages, we were making sure to have a good process for validating that those low level pieces of this overall system were really well tuned in. I think that once you get to something as complex as a whole humanoid, all that validation really saves you a lot of time on the other end, since you have a lot more confidence in the lower level systems as you start working on higher level behaviors like locomotion

We also have a lot of people at the company that have experience on prior legged robotic platforms, so there’s a well of knowledge that we can draw from there. And there’s a large pool of literature that’s been published by people in the locomotion community that roboticists can now pull from. With our locomotion controller, it’s not like we’re trying to reinvent stable locomotion, so being able to implement things that we know already work is a big help.

Jerry Pratt: The walking algorithm we’re using has a lot of similarities to the ones that were developed during the DARPA Robotics Challenge. We’re doing a lot of machine learning on the perception side, but we’re not really doing any machine learning for control right now. For the walking algorithm, it’s pretty much robotics controllers 101.

And Jenna mentioned the step-by-step hardware bring-up. While that’s happening, we’re doing a lot of development on the controller in simulation to get to the point where the robot is walking in simulation pretty well, which means that we have a good chance of the controller working on the real robot once it comes online. I think as a company, we’ve done a good job coordinating all the pieces, and a lot of that has come from having people with the experience of having done this several times before.

More broadly, eight years after the DARPA Robotics Challenge, how hard is it to get a human-sized bipedal robot to walk?

Pratt: Theoretically, we understand walking pretty well now. There are a lot of different simple models, different toolboxes that are available, and about a dozen different approaches that you can take. A lot of it depends on having good hardware—it can be really difficult if you don’t have that. But for okay-ish walking on flat ground, it’s become easier and easier now with all of the prior work that’s been done.

There are still a lot of challenges for walking naturally, though. We really want to get to the point where our robot looks like a human when it walks. There are some robots that have gotten close, but none that I would say have passed the Turing test for walking, where if you looked at a silhouette of it, you’d think it was a human. Although, there’s not a good business case for doing that, except that it should be more efficient.

Jenna: Walking is becoming more and more understood, and also accessible to roboticists if you have the hardware for it, but there are still a lot of challenges to be able to walk while doing something useful at the same time—interacting with your environment while moving, manipulating things while moving—these are still challenging problems.

What are some important things to look for when you see a bipedal robot walk to get a sense of how capable it might be?

Reher: I think we as humans have pretty good intuition for judging how well something is locomoting—we’re kind of hardwired to do it. So if you see buzzing oscillations, or a very stiff upper body, those may be indications that a robot’s low-level controls are not quite there. A lot of success in bipedal walking comes down to making sure that a very complex systems engineering architecture is all playing nice together.

Pratt: There have been some recent efforts to come up with performance metrics for walking. Some are kind of obvious, like walking speed. Some are harder to measure, like robustness to disturbances, because it matters what phase of gait the robot is at when it gets pushed—if you push it at just the right time, it’s much harder for it to recover. But I think the person pushing the robot test is a pretty good one. While we haven’t done pushes yet, we probably will in an upcoming video.

How important is it for your robot to be able to fall safely, and at what point do you start designing for that?

Pratt: I think it’s critical to fall safely, to survive a fall, and be able to get back up. People fall— not very often, but they do— and they get back up. And there will be times in almost any application where the robot falls for one reason or another and we’re going to have to just accept that. I often tell people working on humanoids to build in a fall behavior. If the robot’s not falling, make it fall! Because if you’re trying to make the robot so that it can never fall, it’s just too hard of a problem, and it’s going to fall anyway, and then it’ll be dangerous.

I think falling can be done safely. As long as computers are still in control of the hardware, you can do very graceful, judo-style falls. You should be able to detect where people are if you are falling, and fall away from them. So, I think we can make these robots relatively safe. The hardest part of falling, I think, is protecting your hands so they don’t break as you’re falling. But it’s definitely not an insurmountable problem.

Industrial design is a focus of Figure.Figure

Industrial design is a focus of Figure.Figure

You have a very slim and shiny robot. Did the design require any engineering compromises?

Pratt: It’s actually a really healthy collaboration. We’re trying to fit inside a medium-size female body shape, and so the industrial design team will make these really sleek looking robot silhouettes and say, “okay mechanical team, everything needs to fit in there.” And the mechanical team will be like, “we can’t fit that motor in, we need a couple more millimeters.” It’s kind of fun watching the discussions, and sometimes there will be arguments and stuff, but it almost always leads to a better design. Even if it’s simply because it causes us to look at the problem a couple of extra times.

Reher: From my perspective, the kind of interaction with the mechanical engineers that led to the robot that we have now has been very beneficial for the controls side. We have a sleeker design with lower inertia legs, which means that we’re not trying to move a lot of mass around. That ends up helping us down the line for designing control algorithms that we can execute on the hardware.

Pratt: That’s right. And keeping the legs slim allows you to do things like crossover steps—you get more range of motion because you don’t have parts of the robot bumping into each other. Self-collisions are something that you always have to worry about with a robot, so if your robot has fewer protruding cables or bumps, it’s pretty important.

Your CEO posted a picture of some compact custom actuators that your robot is using. Do you feel like your actuator design (or something else) gives your robot some kind of secret sauce that will help it be successful?

Figure’s custom actuator (left) vs. off-the-shelf actuator (right) with equal torque.Figure

Figure’s custom actuator (left) vs. off-the-shelf actuator (right) with equal torque.Figure

Pratt: At this point, it’s mostly really amazing engineering and software development and super talented people. About half of our team have worked on humanoids before, and half of our team have worked in some related field. That’s important— things like, making batteries for cars, making electric motors for cars, software and management systems for electric airplanes. There are a few things we’ve learned along the way that we hadn’t learned before. Maybe they’re not super secret things that other people don’t know, but there’s a handful of tricks that we’ve picked up from bashing our heads against some problem over and over. But there’s not a lot of new technology going into the robot, let’s put it that way.

Are there opportunities in the humanoid robot space for someone to develop a new technology that would significantly change the industry?

Pratt: I think getting to whatever it takes to open up new application areas, and do it relatively quickly. We’re interested in things like using large language models to plan general purpose tasks, but they’re not quite there yet. A lot of the examples that you see are at the research-y stage where they might work until you change up what’s going on—it’s not robust. But if someone cracks that open, that’s a huge advantage.

And then hand designs. If somebody can come up with a super robust large degree of freedom hand that has force sensing and tactile sensing on it, that would be huge too.

The robot is designed to fit inside a medium-size female body shape.Figure

The robot is designed to fit inside a medium-size female body shape.Figure

This is a lot of progress from Figure in a very short time, but they’re certainly not alone in their goal of developing a commercial bipedal robot, and relative to other companies who’ve had operational hardware for longer, Figure may have some catching up to do. Or they may not—until we start seeing robots doing practical tasks outside of carefully controlled environments, it’s hard to know for sure.

Reference: https://ift.tt/nHyLVKY

No comments:

Post a Comment