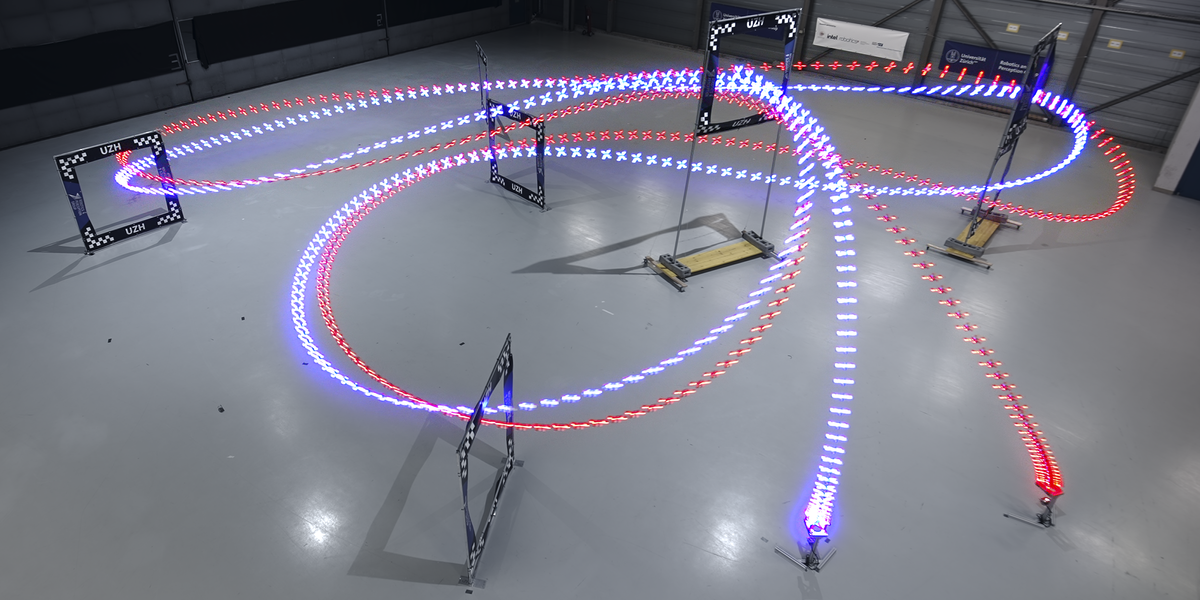

The drone screams. It’s flying so fast that following it with my camera is hopeless, so I give up and watch in disbelief. The shrieking whine from the four motors of the racing quadrotor Dopplers up and down as the drone twists, turns, and backflips its way through the square plastic gates of the course at a speed that is literally superhuman. I’m cowering behind a safety net, inside a hangar at an airfield just outside of Zurich, along with the drone’s creators from the Robotics and Perception Group at the University of Zurich.

“I don’t even know what I just watched,” says Alex Vanover, as the drone comes to a hovering halt after completing the 75-meter course in 5.3 seconds. “That was beautiful,” Thomas Bitmatta adds. “One day, my dream is to be able to achieve that.” Vanover and Bitmatta are arguably the world’s best drone-racing pilots, multiyear champions of highly competitive international drone-racing circuits. And they’re here to prove that human pilots have not been bested by robots. Yet.

AI Racing FPV Drone Full Send! - University of Zurich youtu.be

Comparing these high-performance quadrotors to the kind of drones that hobbyists use for photography is like comparing a jet fighter to a light aircraft: Racing quadrotors are heavily optimized for speed and agility. A typical racing quadrotor can output 35 newton meters (26 pound-feet) of force, with four motors spinning tribladed propellers at 30,000 rpm. The drone weighs just 870 grams, including a 1,800-milliampere-hour battery that lasts a mere 2 minutes. This extreme power-to-weight ratio allows the drone to accelerate at 4.5 gs, reaching 100 kilometers per hour in less than a second.

The autonomous racing quadrotors have similar specs, but the one we just saw fly doesn’t have a camera because it doesn’t need one. Instead, the hangar has been equipped with a 36-camera infrared tracking system that can localize the drone within millimeters, 400 times every second. By combining the location data with a map of the course, an off-board computer can steer the drone along an optimal trajectory, which would be difficult, if not impossible, for even the best human pilot to match.

These autonomous drones are, in a sense, cheating. The human pilots have access to the single view only from a camera mounted on the drone, along with their knowledge of the course and flying experience. So, it’s really no surprise that US $400,000 worth of sensors and computers can outperform a human pilot. But the reason why these professional drone pilots came to Zurich is to see how they would do in a competition that’s actually fair.

A human-piloted racing drone [red] chases an autonomous vision-based drone [blue] through a gate at over 13 meters per second.Leonard Bauersfeld

A human-piloted racing drone [red] chases an autonomous vision-based drone [blue] through a gate at over 13 meters per second.Leonard Bauersfeld

Solving Drone Racing

By the Numbers: Autonomous Racing Drones

Frame size:

215 millimeters

Weight:

870 grams

Maximum thrust:

35 newton meters (26 pound-feet)

Flight duration:

2 minutes

Acceleration:

4.5 gs

Top speed:

130+ kilometers per hour

Onboard sensing:

Intel RealSense T265 tracking camera

Onboard computing:

Nvidia Jetson TX2

“We’re trying to make history,” says Davide Scaramuzza, who leads the Robotics and Perception Group at the University of Zurich (UZH). “We want to demonstrate that an AI-powered, vision-based drone can achieve human-level, and maybe even superhuman-level, performance in a drone race.” Using vision is the key here: Scaramuzza has been working on drones that sense the way most people do, relying on cameras to perceive the world around them and making decisions based primarily on that visual data. This is what will make the race fair—human eyes and a human brain versus robotic eyes and a robotic brain, each competitor flying the same racing quadrotors as fast as possible around the same course.

“Drone racing [against humans] is an ideal framework for evaluating the progress of autonomous vision-based robotics,” Scaramuzza explains. “And when you solve drone racing, the applications go much further because this problem can be generalized to other robotics applications, like inspection, delivery, or search and rescue.”

While there are already drones doing these tasks, they tend to fly slowly and carefully. According to Scaramuzza, being able to fly faster can make drones more efficient, improving their flight duration and range and thus their utility. “If you want drones to replace humans at dull, difficult, or dangerous tasks, the drones will have to do things faster or more efficiently than humans. That is what we are working toward—that’s our ambition,” Scaramuzza explains. “There are many hard challenges in robotics. Fast, agile, autonomous flight is one of them.”

Autonomous Navigation

Scaramuzza’s autonomous-drone system, called Swift, starts with a three-dimensional map of the course. The human pilots have access to this map as well, so that they can practice in simulation. The goal of both human and robot-drone pilots is to fly through each gate as quickly as possible, and the best way of doing this is via what’s called a time-optimal trajectory.

Robots have an advantage here because it’s possible (in simulation) to calculate this trajectory for a given course in a way that is provably optimal. But knowing the optimal trajectory gets you only so far. Scaramuzza explains that simulations are never completely accurate, and things that are especially hard to model—including the turbulent aerodynamics of a drone flying through a gate and the flexibility of the drone itself—make it difficult to stick to that optimal trajectory.

While the human-piloted drones [red] are each equipped with an FPV camera, each of the autonomous drones [blue] has an Intel RealSense vision system powered by a Nvidia Jetson TX2 onboard computer. Both sets of drones are also equipped with reflective markers that are tracked by an external camera system. Evan Ackerman

While the human-piloted drones [red] are each equipped with an FPV camera, each of the autonomous drones [blue] has an Intel RealSense vision system powered by a Nvidia Jetson TX2 onboard computer. Both sets of drones are also equipped with reflective markers that are tracked by an external camera system. Evan Ackerman

The solution, says Scaramuzza, is to use deep-reinforcement learning. You’re still training your system in simulation, but you’re also tasking your reinforcement-learning algorithm with making continuous adjustments, tuning the system to a specific track in a real-world environment. Some real-world data is collected on the track and added to the simulation, allowing the algorithm to incorporate realistically “noisy” data to better prepare it for flying the actual course. The drone will never fly the most mathematically optimal trajectory this way, but it will fly much faster than it would using a trajectory designed in an entirely simulated environment.

From there, the only thing that remains is to determine how far to push Swift. One of the lead researchers, Elia Kaufmann, quotes Mario Andretti: “If everything seems under control, you’re just not going fast enough.” Finding that edge of control is the only way the autonomous vision-based quadrotors will be able to fly faster than those controlled by humans. “If we had a successful run, we just cranked up the speed again,” Kaufmann says. “And we’d keep doing that until we crashed. Very often, our conditions for going home at the end of the day are either everything has worked, which never happens, or that all the drones are broken.”

Evan Ackerman

Evan Ackerman

Although the autonomous vision-based drones were fast, they were also less robust. Even small errors could lead to crashes from which the autonomous drones could not recover.Regina Sablotny

Although the autonomous vision-based drones were fast, they were also less robust. Even small errors could lead to crashes from which the autonomous drones could not recover.Regina Sablotny

How the Robots Fly

Once Swift has determined its desired trajectory, it needs to navigate the drone along that trajectory. Whether you’re flying a drone or driving a car, navigation involves two fundamental things: knowing where you are and knowing how to get where you want to go. The autonomous drones have calculated the time-optimal route in advance, but to fly that route, they need a reliable way to determine their own location as well as their velocity and orientation.

To that end, the quadrotor uses an Intel RealSense vision system to identify the corners of the racing gates and other visual features to localize itself on the course. An Nvidia Jetson TX2 module, which includes a GPU, a CPU, and associated hardware, manages all of the image processing and control on board.

Using only vision imposes significant constraints on how the drone flies. For example, while quadrotors are equally capable of flying in any direction, Swift’s camera needs to point forward most of the time. There’s also the issue of motion blur, which occurs when the exposure length of a single frame in the drone’s camera feed is long enough that the drone’s own motion over that time becomes significant. Motion blur is especially problematic when the drone is turning: The high angular velocity results in blurring that essentially renders the drone blind. The roboticists have to plan their flight paths to minimize motion blur, finding a compromise between a time-optimal flight path and one that the drone can fly without crashing.

Davide Scaramuzza [far left], Elia Kaufmann [far right] and other roboticists from the University of Zurich watch a close race.Regina Sablotny

Davide Scaramuzza [far left], Elia Kaufmann [far right] and other roboticists from the University of Zurich watch a close race.Regina Sablotny

How the Humans Fly

For the human pilots, the challenges are similar. The quadrotors are capable of far better performance than pilots normally take advantage of. Bitmatta estimates that he flies his drone at about 60 percent of its maximum performance. But the biggest limiting factor for the human pilots is the video feed.

People race drones in what’s called first-person view (FPV), using video goggles that display a real-time feed from a camera mounted on the front of the drone. The FPV video systems that the pilots used in Zurich can transmit at 60 interlaced frames per second in relatively poor analog VGA quality. In simulation, drone pilots practice in HD at over 200 frames per second, which makes a substantial difference. “Some of the decisions that we make are based on just four frames of data,” explains Bitmatta. “Higher-quality video, with better frame rates and lower latency, would give us a lot more data to use.” Still, one of the things that impresses the roboticists the most is just how well people perform with the video quality available. It suggests that these pilots develop the ability to perform the equivalent of the robot’s localization and state-estimation algorithms.

It seems as though the human pilots are also attempting to calculate a time-optimal trajectory, Scaramuzza says. “Some pilots have told us that they try to imagine an imaginary line through a course, after several hours of rehearsal. So we speculate that they are actually building a mental map of the environment, and learning to compute an optimal trajectory to follow. It’s very interesting—it seems that both the humans and the machines are reasoning in the same way.”

But in his effort to fly faster, Bitmatta tries to avoid following a predefined trajectory. “With predictive flying, I’m trying to fly to the plan that I have in my head. With reactive flying, I’m looking at what’s in front of me and constantly reacting to my environment.” Predictive flying can be fast in a controlled environment, but if anything unpredictable happens, or if Bitmatta has even a brief lapse in concentration, the drone will have traveled tens of meters before he can react. “Flying reactively from the start can help you to recover from the unexpected,” he says.

Will Humans Have an Edge?

“Human pilots are much more able to generalize, to make decisions on the fly, and to learn from experiences than are the autonomous systems that we currently have,” explains Christian Pfeiffer, a neuroscientist turned roboticist at UZH who studies how human drone pilots do what they do. “Humans have adapted to plan into the future—robots don’t have that long-term vision. I see that as one of the main differences between humans and autonomous systems right now.”

Scaramuzza agrees. “Humans have much more experience, accumulated through years of interacting with the world,” he says. “Their knowledge is so much broader because they’ve been trained across many different situations. At the moment, the problem that we face in the robotics community is that we always need to train an algorithm for each specific task. Humans are still better than any machine because humans can make better decisions in very complex situations and in the presence of imperfect data.”

“I think there’s a lot that we as humans can learn from how these robots fly.” —Thomas Bimatta

This understanding that humans are still far better generalists has placed some significant constraints on the race. The “fairness” is heavily biased in favor of the robots in that the race, while designed to be as equal as possible, is taking place in the only environment in which Swift is likely to have a chance. The roboticists have done their best to minimize unpredictability—there’s no wind inside of the hangar, for example, and the illumination is tightly controlled. “We are using state-of-the-art perception algorithms,” Scaramuzza explains, “but even the best algorithms still have a lot of failure modes because of illumination changes.”

To ensure consistent lighting, almost all of the data for Swift’s training was collected at night, says Kaufmann. “The nice thing about night is that you can control the illumination; you can switch on the lights and you have the same conditions every time. If you fly in the morning, when the sunlight is entering the hangar, all that backlight makes it difficult for the camera to see the gates. We can handle these conditions, but we have to fly at slower speeds. When we push the system to its absolute limits, we sacrifice robustness.”

Race Day

The race starts on a Saturday morning. Sunlight streams through the hangar’s skylights and open doors, and as the human pilots and autonomous drones start to fly test laps around the track, it’s immediately obvious that the vision-based drones are not performing as well as they did the night before. They’re regularly clipping the sides of the gates and spinning out of control, a telltale sign that the vision-based state estimation is being thrown off. The roboticists seem frustrated. The human pilots seem cautiously optimistic.

The winner of the competition will fly the three fastest consecutive laps without crashing. The humans and the robots pursue that goal in essentially the same way, by adjusting the parameters of their flight to find the point at which they’re barely in control. Quadrotors tumble into gates, walls, floors, and ceilings, as the racers push their limits. This is a normal part of drone racing, and there are dozens of replacement drones and staff to fix them when they break.

Professional drone pilot Thomas Bitmatta [left] examines flight paths recorded by the external tracking system. The human pilots felt they could fly better by studying the robots.Evan Ackerman

Professional drone pilot Thomas Bitmatta [left] examines flight paths recorded by the external tracking system. The human pilots felt they could fly better by studying the robots.Evan Ackerman

There will be several different metrics by which to decide whether the humans or the robots are faster. The external localization system used to actively control the autonomous drone last night is being used today for passive tracking, recording times for each segment of the course, each lap of the course, and for each three-lap multidrone race.

As the human pilots get comfortable with the course, their lap times decrease. Ten seconds per lap. Then 8 seconds. Then 6.5 seconds. Hidden behind their FPV headsets, the pilots are concentrating intensely as their shrieking quadrotors whirl through the gates. Swift, meanwhile, is much more consistent, typically clocking lap times below 6 seconds but frequently unable to complete three consecutive laps without crashing. Seeing Swift’s lap times, the human pilots push themselves, and their lap times decrease further. It’s going to be very close.

Zurich Drone Racing: AI vs Human https://rpg.ifi.uzh.ch/

The head-to-head races start, with Swift and a human pilot launching side-by-side at the sound of the starting horn. The human is immediately at a disadvantage, because a person’s reaction time is slow compared to that of a robot: Swift can launch in less than 100 milliseconds, while a human takes about 220 ms to hear a noise and react to it.

UZH’s Elia Kaufmann prepares an autonomous vision-based drone for a race. Since landing gear would only slow racing drones down, they take off from stands, which allows them to launch directly toward the first gate.Evan Ackerman

UZH’s Elia Kaufmann prepares an autonomous vision-based drone for a race. Since landing gear would only slow racing drones down, they take off from stands, which allows them to launch directly toward the first gate.Evan Ackerman

On the course, the human pilots can almost keep up with Swift: The robot’s best three-lap time is 17.465 seconds, while Bitmatta’s is 18.746 seconds and Vanover manages 17.956 seconds. But in nine head-to-head races with Swift, Vanover wins four, and in seven races, Bitmatta wins three. That’s because Swift doesn’t finish the majority of the time, colliding either with a gate or with its opponent. The human pilots can recover from collisions, even relaunching from the ground if necessary. Swift doesn’t have those skills. The robot is faster, but it’s also less robust.

Zurich Drone Racing: Onboard View https://rpg.ifi.uzh.ch/

Getting Even Faster

Thomas Bitmatta, two-time MultiGP International Open World Cup champion, pilots his drone through the course in FPV (first-person view).Regina Sablotny

Thomas Bitmatta, two-time MultiGP International Open World Cup champion, pilots his drone through the course in FPV (first-person view).Regina Sablotny

In drone racing, crashing is part of the process. Both Swift and the human pilots crashed dozens of drones, which were constantly being repaired.Regina Sablotny

In drone racing, crashing is part of the process. Both Swift and the human pilots crashed dozens of drones, which were constantly being repaired.Regina Sablotny

“The absolute performance of the robot—when it’s working, it’s brilliant,” says Bitmatta, when I speak to him at the end of race day. “It’s a little further ahead of us than I thought it would be. It’s still achievable for humans to match it, but the good thing for us at the moment is that it doesn’t look like it’s very adaptable.”

UZH’s Kaufmann doesn’t disagree. “Before the race, we had assumed that consistency was going to be our strength. It turned out not to be.” Making the drone more robust so that it can adapt to different lighting conditions, Kaufmann adds, is mostly a matter of collecting more data. “We can address this by retraining the perception system, and I’m sure we can substantially improve.”Kaufmann believes that under controlled conditions, the potential performance of the autonomous vision-based drones is already well beyond what the human pilots are capable of. Even if this wasn’t conclusively proved through the competition, bringing the human pilots to Zurich and collecting data about how they fly made Kaufmann even more confident in what Swift can do. “We had overestimated the human pilots,” he says. “We were measuring their performance as they were training, and we slowed down a bit to increase our success rate, because we had seen that we could fly slower and still win. Our fastest strategies accelerate the quadrotor at 4.5 gs, but we saw that if we accelerate at only 3.8 gs, we can still achieve a safe win.”

Bitmatta feels that the humans have a lot more potential, too. “The kind of flying we were doing last year was nothing compared with what we’re doing now. Our rate of progress is really fast. And I think there’s a lot that we as humans can learn from how these robots fly.”

Useful Flying Robots

As far as Scaramuzza is aware, the event in Zurich, which was held last summer, was the first time that a fully autonomous mobile robot achieved world-champion performance in a real-world competitive sport. But, he points out, “this is still a research experiment. It’s not a product. We are very far from making something that can work in any environment and any condition.”

Besides making the drones more adaptable to different lighting conditions, the roboticists are teaching Swift to generalize from a known course to a new one, as humans do, and to safely fly around other drones. All of these skills are transferable and will eventually lead to practical applications. “Drone racing is pushing an autonomous system to its absolute limits,” roboticist Christian Pfeiffer says. “It’s not the ultimate goal—it’s a stepping-stone toward building better and more capable autonomous robots.” When one of those robots flies through your window and drops off a package on your coffee table before zipping right out again, these researchers will have earned your thanks.

Scaramuzza is confident that his drones will one day be the champions of the air—not just inside a carefully controlled hangar in Zurich but wherever they can be useful to humanity. “I think ultimately, a machine will be better than any human pilot, especially when consistency and precision are important,” he says. “I don’t think this is controversial. The question is, when? I don’t think it will happen in the next few decades. At the moment, humans are much better with bad data. But this is just a perception problem, and computer vision is making giant steps forward. Eventually, robotics won’t just catch up with humans, it will outperform them.”

Meanwhile, the human pilots are taking this in stride. “Seeing people use racing as a way of learning—I appreciate that,” Bitmatta says. “Part of me is a racer who doesn’t want anything to be faster than I am. And part of me is really excited for where this technology can lead. The possibilities are endless, and this is the start of something that could change the whole world.”

This article appears in the September 2023 print issue as “Superhuman Speed: AI Drones for the Win.”

Reference: https://ift.tt/GUkFIQL

No comments:

Post a Comment