Rapid and pivotal advances in technology have a way of unsettling people, because they can reverberate mercilessly, sometimes, through business, employment, and cultural spheres. And so it is with the current shock and awe over large language models, such as GPT-4 from OpenAI.

It’s a textbook example of the mixture of amazement and, especially, anxiety that often accompanies a tech triumph. And we’ve been here many times, says Rodney Brooks. Best known as a robotics researcher, academic, and entrepreneur, Brooks is also an authority on AI: he directed the Computer Science and Artificial Intelligence Laboratory at MIT until 2007, and held faculty positions at Carnegie Mellon and Stanford before that. Brooks, who is now working on his third robotics startup, Robust.AI, has written hundreds of articles and half a dozen books and was featured in the motion picture Fast, Cheap & Out of Control. He is a rare technical leader who has had a stellar career in business and in academia and has still found time to engage with the popular culture through books, popular articles, TED Talks, and other venues.

“It gives an answer with complete confidence, and I sort of believe it. And half the time, it’s completely wrong.”

—Rodney Brooks, Robust.AI

IEEE Spectrum caught up with Brooks at the recent Vision, Innovation, and Challenges Summit, where he was being honored with the 2023 IEEE Founders Medal. He spoke about this moment in AI, which he doesn’t regard with as much apprehension as some of his peers, and about his latest startup, which is working on robots for medium-sized warehouses.

Rodney Brooks on…

- Will GPT-4 and other large language models lead to an artificial general intelligence in the foreseeable future?

- Will companies marketing large language models every justify the enormous valuations some of these companies are now enjoying?

- When are we going to have full (level-5) self-driving cars?

- What are the most attractive opportunities now in warehouse robotics?

You wrote a famous article in 2017, “The Seven Deadly Sins of AI Prediction.“ You said then that you wanted an artificial general intelligence to exist—in fact, you said it had always been your personal motivation for working in robotics and AI. But you also said that AGI research wasn’t doing very well at that time at solving the basic problems that had remained intractable for 50 years. My impression now is that you do not think the emergence of GPT-4 and other large language models means that an AGI will be possible within a decade or so.

Rodney Brooks: You’re exactly right. And by the way, GPT-3.5 guessed right—I asked it about me, and it said I was a skeptic about it. But that doesn’t make it an AGI.

The large language models are a little surprising. I’ll give you that. And I think what they say, interestingly, is how much of our language is very much rote, R-O-T-E, rather than generated directly, because it can be collapsed down to this set of parameters. But in that Seven Deadly Sins article, I said that one of the deadly sins was how we humans mistake performance for competence.

If I can just expand on that a little. When we see a person with some level performance at some intellectual thing, like describing what’s in a picture, for instance, from that performance, we can generalize about their competence in the area they’re talking about. And we’re really good at that. Evolutionarily, it’s something that we ought to be able to do. We see a person do something, and we know what else they can do, and we can make a judgement quickly. But our models for generalizing from a performance to a competence don’t apply to AI systems.

The example I used at the time was, I think it was a Google program labeling an image of people playing Frisbee in the park. And if a person says, “Oh, that’s a person playing Frisbee in the park,” you would assume you could ask him a question, like, “Can you eat a Frisbee?” And they would know, of course not; it’s made of plastic. You’d just expect they’d have that competence. That they would know the answer to the question, “can you play Frisbee in a snowstorm? Or, how far can a person throw a Frisbee? Can they throw it 10 miles? Can they only throw it 10 centimeters?” You’d expect all that competence from that one piece of performance: a person saying, “That’s a picture of people playing Frisbee in the park.”

“What the large language models are good at is saying what an answer should sound like, which is different from what an answer should be.”

—Rodney Brooks, Robust.AI

We don’t get that same level of competence from the performance of a large language model. When you poke it, you find that it doesn’t have the logical inference that it may have seemed to have in its first answer.

I’ve been using large language models for the last few weeks to help me with the really arcane coding that I do, and they’re much better than a search engine. And no doubt, that’s because it’s 4000 parameters or tokens. Or 60,000 tokens. So it’s a lot better than just a 10-word Google search. More context. So when I’m doing something very arcane, it gives me stuff.

But what I keep having to do, and I keep making this mistake—it answers with such confidence any question I ask. It gives an answer with complete confidence, and I sort of believe it. And half the time, it’s completely wrong. And I spend two or three hours using that hint, and then I say, “That didn’t work,” and it just does this other thing. Now, that’s not the same as intelligence. It’s not the same as interacting. It’s looking it up.

It sounds like you don’t think GPT-5 or GPT-6 is going to make a lot of progress on these issues.

Brooks: No, because it doesn’t have any underlying model of the world. It doesn’t have any connection to the world. It is correlation between language.

By the way, I recommend a long blog post by Stephen Wolfram. He’s also turned it into a book.

I’ve read it. It’s superb.

Brooks: It gives a really good technical understanding. What the large language models are good at is saying what an answer should sound like, which is different from what an answer should be.

Not long after ChatGPT and GPT-3.5 went viral last January, OpenAI was reportedly considering a tender offer that valued the company at almost $30 billion. Indeed, Microsoft invested an amount that has been reported as $10 billion. Do you think we’re ever going to see anything come out of this application that will justify these kind of numbers?

Brooks: Probably not. My understanding is that Microsoft’s initial investment was in time on the cloud computing rather than hard, cold cash. OpenAI certainly needed [cloud computing time] to build these models because they’re enormously expensive in terms of the computing needed. I think what we’re going to see—and I’ve seen a bunch of papers recently about boxing in large language models—is much smoother language interfaces, input and output. But you have to box things in carefully so that the craziness doesn’t come out, and the making stuff up doesn’t come out.

“I think they’re going to be better than the Watson Jeopardy! program, which IBM said, ‘It’s going to solve medicine.’ Didn’t at all. It was a total flop. I think it’s going to be better than that.”

—Rodney Brooks, Robust.AI

So you’ve got to box things in because it’s not a database. It just makes up stuff that sounds good. But if you box it in, you can get really much better language than we’ve had before.

So when the smoke clears, do you think we’ll have major applications? I mean, putting aside the question of whether they justify the investments or the valuations, is it going to still make a mark?

Brooks: I think it’s going to be another thing that’s useful. It’s going to be better language input and output. Because of the large numbers of tokens that get buffered up, you get much better context. But you have to box it so much… I am starting to see papers, how to put this other stuff on top of the language model. And sometimes it’s traditional AI methods, which everyone had sort of forgotten about, but now they’re coming back as a way of boxing it in.

I wrote a list of about 30 or 40 events like this over the last 50 years where, it was going to be the next big thing. And many of them have turned out to be utter duds. They’re useful, like the chess-playing programs in the ‘90s. That was supposed to be the end of humans playing chess. No, it wasn’t the end of humans playing chess. Chess is a different game now and that’s interesting.

But just to articulate where I think the large language models come in: I think they’re going to be better than the Watson Jeopardy! program, which IBM said, “It’s going to solve medicine.” Didn’t at all. It was a total flop. I think it’s going to be better than that. But not AGI.

“A very famous senior person said, ‘Radiologists will be out of business before long.’ And people stopped enrolling in radiology specialties, and now there’s a shortage of them.”

—Rodney Brooks, Robust.AI

So what about these predictions that entire classes of employment will go away, paralegals, and so on? Is that a legitimate concern?

Brooks: You certainly hear these things. I was reviewing a government report a few weeks ago, and it said, “Lawyers are going to disappear in 10 years.” So I tracked it down and it was one barrister in England, who knew nothing about AI. He said, “surely, if it’s this good, it’s going to get so much better that we’ll be out of jobs in 10 years.” There’s a lot of disaster hype. Someone suggests something and it gets amplified.

We saw that with radiologists. A very famous senior person said, “Radiologists will be out of business before long.” And people stopped enrolling in radiology specialties and now there’s a shortage of them. Same with truck driving…. There are so many ads from all these companies recruiting truck drivers because there’s not enough truck drivers, because three or four years ago, people were saying, “Truck driving is going to go away.”

In fact, six or seven years ago, there were predictions that we would have fully self-driving cars by now.

Brooks: Lots of predictions. CEOs of major auto companies were all saying by 2020 or 2021 or 2022, roughly.

Full self-driving, or level 5, still seems really far away. Or am I missing something?

Brooks: No. It is far away. I think the Level-2 and Level-3 stuff in cars is amazingly good now. If you get a brand-new car and pay good money for it, it’s pretty amazingly good. The level 5, or even level 4, not so much. I live in the city of San Francisco, and for almost a year now, I’ve been able to take rides after 10:30 PM and before 5:00 AM, if it’s not a foggy day—I’ve been able to take rides in a Cruise vehicle with no driver. Just in the last few weeks, Cruise and Waymo got an agreement with the city where every day, I now see cars, as I’m driving during the day, with no driver in them.

GM supposedly lost $561 million on Cruise in just the first three months of this year.

Brooks: That’s how much cost it cost them to run that effort. Yeah. It’s a long way from break-even. A long, long way from break-even.

So I mean, I guess the question is, can even a company like GM get from here to there, where it’s throwing off huge profits?

Brooks: I wonder about that. We’ve seen a lot of the efforts shut down. It sort of didn’t make sense that there were so many different companies all trying to do it. Maybe, now that we’re merged down to one or two efforts and out of that, we’ll gradually get there. But here’s another case where the hype, I think, has slowed us down. In the ‘90s, there was a lot of research, especially at Berkeley, about what sensors you could embed in freeways which would help cars drive without a driver paying attention. So putting sensors, changing the infrastructure, and changing the cars so they used that new infrastructure, you would get attentionless driving.

“One of the standard processes has four teraflops—four million million floating point operations a second on a piece of silicon that costs 5 bucks. It’s just mind-blowing, the amount of computation.”

—Rodney Brooks, Robust.AI

But then the hype came: “Oh no, we don’t even need that. It’s just going to be a few years and the cars will drive themselves. You don’t need to change infrastructure.” So we stopped changing infrastructure. And I think that slowed the whole autonomous vehicles for commuting down by at least 10, maybe 20 years. There’s a few companies starting to do it now again.

It takes a long time to make these things real.

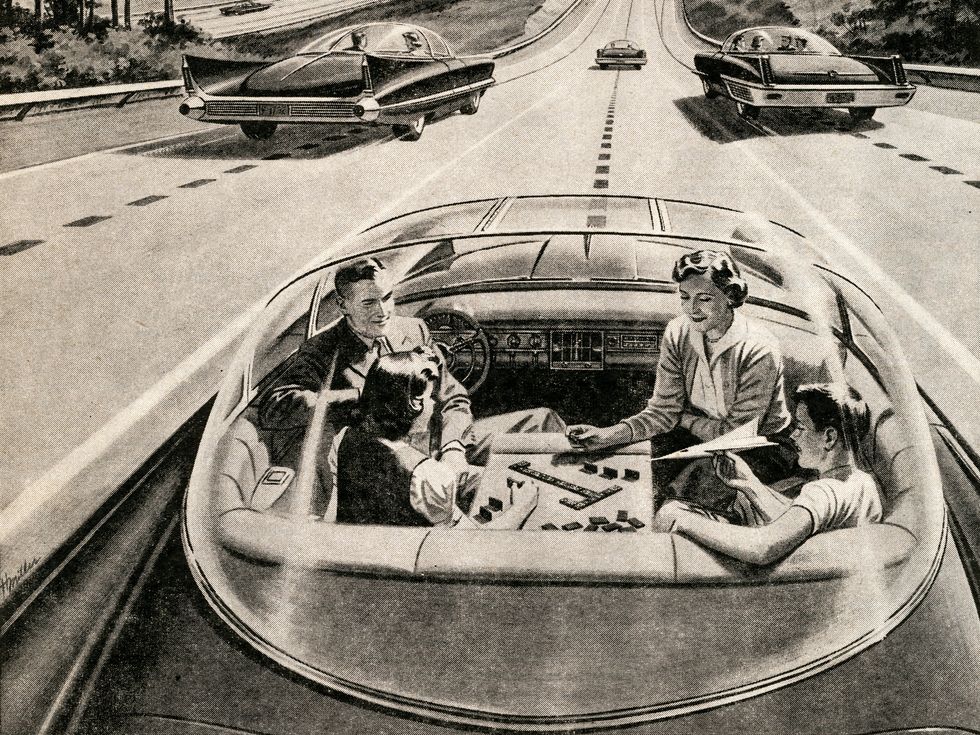

I don’t really enjoy driving, so when I see these pictures from popular magazines in the 1950s of people sitting in bubble-dome cars, facing each other, four people enjoying themselves playing cards on the highway, count me in.

Brooks: Absolutely. And as a species, humanity, we have changed up our mobility infrastructure multiple times. In the early 1800s, it was steam trains. We had to do enormous changes to our infrastructure. We had to put flat rails right across countries. When we started adopting automobiles around the turn from the 19th to the 20th century, we changed the roads. We changed the laws. People could no longer walk in the middle of the road like they used to.

Hulton Archive/Getty Images

Hulton Archive/Getty Images

We changed the infrastructure. When you go from trains that are driven by a person to self-driving trains, such as we see in airports and a few out there, there’s a whole change in infrastructure so that you can’t possibly have a person walking on the tracks. We’ve tried to make this transition [to self-driving cars] without changing infrastructure. You always need to change infrastructure if you’re going to do a major change.

You recently wrote that there will be no viable robotics applications that will harness the serious power of GPTs in any meaningful way. But given that, is there some other avenue of AI development now that will prove more beneficial for robotics, or more transformative? Or alternatively, will AI and robotics kind of diverge for a while, while enormous resources are put on large language models?

Brooks: Well, let me give a very positive spin. There has been a transformation. It’s just taking a little longer to get there. Convolutional neural networks being able to label regions of an image. It’s not perceiving in the same way a person perceives, but we can label what’s there. Along with the end of Moore’s Law and Dennard scaling—this is allowing silicon designers to get outside of the idea of just a faster PC. And so now, we’re seeing very cheap pieces of very effective silicon that you put right with a camera. Instead of getting an image out, you now get labels out, labels of what’s there. And it’s pretty damn good. And it’s really cheap. So one of the standard processes has four teraflops—four million million floating point operations a second on a piece of silicon that costs 5 bucks. It’s just mind-blowing, the amount of computation.

It would narrow floating point, 16-bit floating point, being applied to this labelling. We’re not seeing that yet in many deployed robots, but a lot of people are using that, and building, experimenting, getting towards product. So there’s a case where AI, convolutional neural networks—which, by the way, applied to vision is 10 years old—is going to make a difference.

“Amazon really made life difficult for other suppliers by doing [robotics in the warehouse]. But 80 percent of warehouses in the US have zero automation, only 5 percent are heavily automated.”

—Rodney Brooks, Robust.AI

And here’s one of my other Seven Deadly Sins of AI Prediction. It was how fast people think new stuff is going to be deployed. It takes a while to deploy it, especially when hardware is involved, because that’s just lots of stuff that all has to be balanced out. It takes time. Like the self-driving cars.

So of the major categories of robotics—warehouse robots, collaborative robots, manufacturing robots, autonomous vehicles—which are the most exciting right now to you or which of these sub-disciplines has experienced the most rapid and interesting growth?

Brooks: Well, I’m personally working in warehouse robots for logistics.

And your last company did collaborative robots.

Brooks: Did collaborative robots in factories. That company was a beautiful artistic success, a financial failure, but--

This is Rethink Robotics.

Brooks: Rethink Robotics, but going on from that now, we were too early, and I made some dumb errors. I take responsibility for that. Some dumb errors in how we approached the market. But that whole thing is now—that’s going along. It’s going to take another 10 or 15 years.

Collaborative robots will.

Brooks: Collaborative robots, but that’s what people expect now. Robots don’t need to be in cages anymore. They can be out with humans. In warehouses, we’ve had more and more. You buy stuff at home, expect it to be delivered to your home. COVID accelerated that.

People expect it the same day now in some places.

Brooks: Well, Amazon really made life difficult for other suppliers by doing it. But 80 percent of warehouses in the US have zero automation, only 5 percent are heavily automated.

And those are probably the largest.

Brooks: Yeah, they’re the big ones. Amazon has enormous numbers of those, for instance. But there’s a large number of warehouses which don’t have automation.

So these are medium-sized warehouses?

Brooks: Yeah. 100,000 square feet, something of that sort, whereas the Amazon ones tend to be over a million square feet and you completely rebuild it [around the automation]. But these 80 percent are not going to get rebuilt. They have to adopt automation into an existing workflow and modify it over time. And there are a few companies that have been successful, and I think there’s a lot of room for other companies and other workflows.

“[Warehouse workers] are not subject to the whims of the automation. They get to take over. When the robot’s clearly doing something dumb, they can just grab it and move it, and it repairs.”

—Rodney Brooks, Robust.AI

So for your current company, Robust.AI, this is your target.

Brooks: That’s what we’re doing. Yeah.

So what is your vision? So you have a program—you have a software suite called Grace and you also have a hardware platform called Carter.

Brooks: Exactly. And let me say a few words about it. We start with the assumption that there are going to be people in the warehouses that we’re in. There’s going to be people for a long time. It’s not going to be lights-out, full automation because those 80 percent of warehouses are not going to rebuild the whole thing and put millions of dollars of equipment in. They’ll be gradually putting stuff in. So we’re trying to make our robots human-centered, we call it. They’re aware of people. They’re using convolutional neural networks to see that that’s a person, to see which way they’re facing, to see where their legs are, where their arms are. You can track that in real time, 30 frames a second, right at the camera. And knowing where people are, who they are. They are people not obstacles, so treating them with respect.

But then the magic of our robot is that it looks like a shopping cart. It’s got handlebars on it. If a person goes up and grabs it, it’s now a powered shopping cart or powered cart that they can move around. So they [the warehouse workers] are not subject to the whims of the automation. They get to take over. When the robot’s clearly doing something dumb, they can just grab it and move it, and it repairs.

You are unusual for a technologist because you think broadly and widely, and you’re not afraid to have an opinion on things going on in the technical conversation. I mean, we’re living in really interesting times in this weird post-pandemic world where lots of things seem to be at some sort of inflection. Are there any of these big projects now that fill you with hope and optimism? What are some big technological initiatives that give you hope or enthusiasm?

Brooks: Well, here’s one that I haven’t written about, but I’ve been aware of and following. Climate change makes farming more difficult, more uncertain. So there’s a lot of work on indoor farming, changing how we do farming from the way we’ve done it for the 10,000 years we, as a species, have been farming that we know about, to technology indoors, and combining it with genetic engineering of microbes, combining it with a lot of computation, machine learning, getting the control loops right. There’s some fantastic things at small scale right now, producing interesting, good food in ways that are so much cleaner, use so much less water, and give me hope that we will be able to have a viable food supply. Not just horrible gunk to eat, but actually stuff that we like, with a way smaller impact on our planet than farm animals have and the widespread use of fertilizer, polluting the water supplies. I think we can get to a good, clean system of providing food for billions of people. I’m really hopeful about that. I think there’s a lot of exciting things happening there. It’s going to take 10, 20, 30 years before it becomes commonplace, but already, in my local grocery store in San Francisco, I can buy lettuce that’s grown indoors. So we’re seeing leafy greens getting out to the mainstream already. There’s a whole lot more coming.

Reference: https://ift.tt/v39zGCW

No comments:

Post a Comment