Interconnects—those sometimes nanometers-wide metal wires that link transistors into circuits on an IC—are in need of a major overhaul. And as chip fabs march toward the outer reaches of Moore’s Law, interconnects are also becoming the industry’s choke point.

“For some 20-25 years now, copper has been the metal of choice for interconnects. However we’re reaching a point where the scaling of copper is slowing down,” IBM’s Chris Penny, told engineers last month at the IEEE International Electron Device Meeting (IEDM). “And there is an opportunity for alternative conductors.”

Ruthenium is a leading candidate, but it’s not as simple as swapping one metal for another, according to research reported at IEDM 2022. The processes of how they’re formed on a chip must be turned upside down. These new interconnects will need a different shape and a higher density. These new interconnects will also need better insulation, lest signal-sapping capacitance take away all their advantage.

Even where the interconnects go is set to change, and soon. But studies are starting to show, the gains from that shift come with a certain cost.

Ruthenium, top vias, and air gaps

Among the replacements for copper, ruthenium has gained a following. But research is showing that the old formulas used to build copper interconnects are a disadvantage to ruthenium. Copper interconnects are built using what’s called a damascene process. First chip makers use lithography to carve the shape of the interconnect into the dielectric insulation above the transistors. Then they deposit a liner and a barrier material, which prevents copper atoms from drifting out into the rest of the chip to muck things up. Copper then fills the trench. In fact, it overfills it, so the excess must be polished away.

All that extra stuff, the liner and barrier, take up space, as much as 40-50 percent of the interconnect volume, Penny told engineers at IEDM. So the conductive part of the interconnects are narrowing, especially in the ultrafine vertical connections between layers of interconnects, increasing resistance. But IBM and Samsung researchers have found a way to build tightly-spaced, low-resistance ruthenium interconnects that don’t need a liner or a seed. The process is called spacer assisted litho-etch litho-etch, or SALELE, and, as the name implies, it relies on a double helping of extreme-ultraviolet lithography. Instead of filling in trenches, it etches the ruthenium interconnects out of a layer or metal and then fills in the gaps with dielectric.

The researchers achieve the best resistance using tall, thin horizontal interconnects. However, that increases capacitance, trading away the benefit. Fortunately, due to the way SALELE builds vertical connections called vias—on top of horizontal interconnects instead of beneath them—the spaces between slender ruthenium lines can easily be filled with air, which is the best insulator available. For these tall, narrow interconnects “the potential benefit of adding an air gap is huge… as much as a 30 percent line capacitance reduction,” said Penny.

The SALELE process “provides a roadmap to 1-nanometer processes and beyond,” he said.

Buried rails, back-side power delivery, and hot 3D chips

As early as 2024, Intel plans to make a radical change to the location of interconnects that carry power to transistors on a chip. The scheme, called back-side power delivery, takes the network of power delivery interconnects and moves it beneath the silicon, so they approach the transistors from below. This has two main advantages: It allows electricity to flow through wider, less resistive interconnects, leading to less power loss. And it frees up room above the transistors for signal-carrying interconnects, meaning logic cells can be smaller. (Researchers from Arm and the Belgian nanotech research hub Imec explained it all here.)

At IEDM 2022, Imec researchers came up with some formulas to make back-side power work better, by finding ways to move the end points of the power delivery network, called buried power rails, closer to transistors without messing up those transistors’ electronic properties. But they also uncovered a somewhat troubling problem, back-side power could lead to a build-up of heat when used in 3D stacked chips.

First the good news: When imec researchers explored how much horizontal distance you need between a buried power rail and a transistor, the answer was pretty much zero. It took some extra cycles of processing to ensure that the transistors were unaffected, but they showed that you can build the rail right beside the transistor channel region—though still tens of nanometers below it. And that could mean even smaller logic cells.

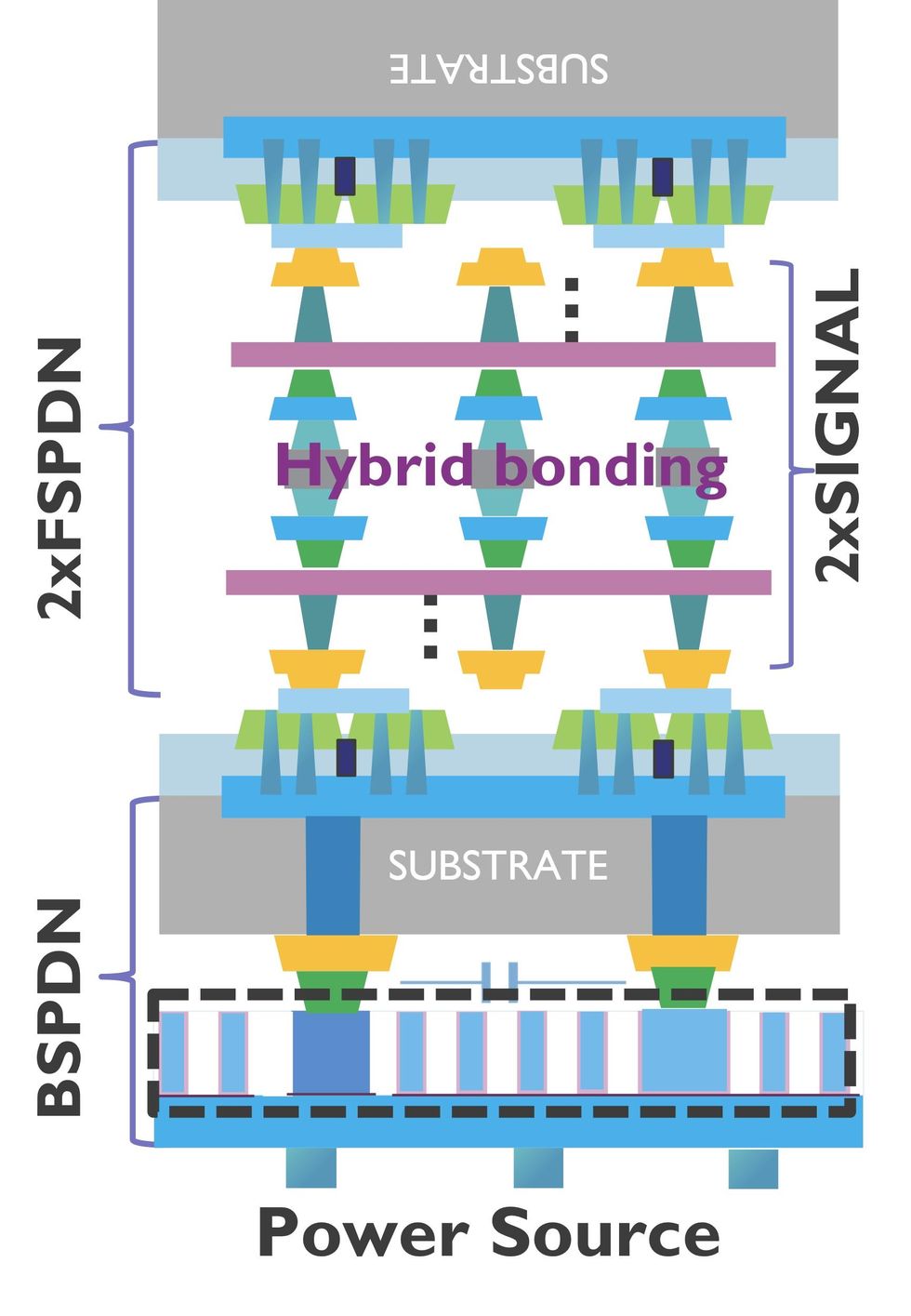

Now the bad news: In separate research, imec engineers simulated several versions of the same future CPU. Some had the kind of power delivery network in use today, called front-side power delivery, where all interconnects, both data and power, are built in layers above the silicon. Some had back-side power delivery networks . And one was a 3D stack of two CPUs, the bottom having back-side power and the top having front-side.

Back-side power’s advantages were confirmed by the simulations of the 2D CPUs. Compared to front-side delivery, it cut the loss from power delivery in half, for example. And transient voltage drops were less pronounced. What’s more, the CPU area was 8 percent smaller. However, the hottest part of back-side chip was about 45 percent hotter than the hottest part of a front-side chip. The likely cause is that back-side power requires thinning the chip down to the point where it needs to be bonded to a separate piece of silicon just to remain stable. That bond acts as a barrier to the flow of heat.

Researchers tested a scenario where a CPU [bottom grey] with a back-side power delivery network is bonded to a second CPU having a front-side power delivery network [top grey].

Researchers tested a scenario where a CPU [bottom grey] with a back-side power delivery network is bonded to a second CPU having a front-side power delivery network [top grey].

The real problems arose with the 3D IC. The top CPU has to get its power from the bottom CPU, but the long journey to the top had consequences. While the bottom CPU still had better voltage-drop characteristics than a front-side chip, the top CPU performed much worse in that respect. And the 3D IC’s power network ate up more than twice the power that a single front-side chip’s network would consume. Worse still, heat could not escape the 3D stack very well, with the hottest part of the bottom die almost 2.5 times as hot as a single front-side CPU. The top CPU was cooler, but not by much.

The 3D IC simulation is admittedly somewhat unrealistic, imec’s Rongmei Chen told engineers at IEDM. Stacking two otherwise identical CPUs atop each other is an unlikely scenario. (It’s much more common to stack memory with a CPU.) “It’s not a very fair comparison,” he said. But it does point out some potential issues.

Reference: https://ift.tt/YL0wtrf

No comments:

Post a Comment