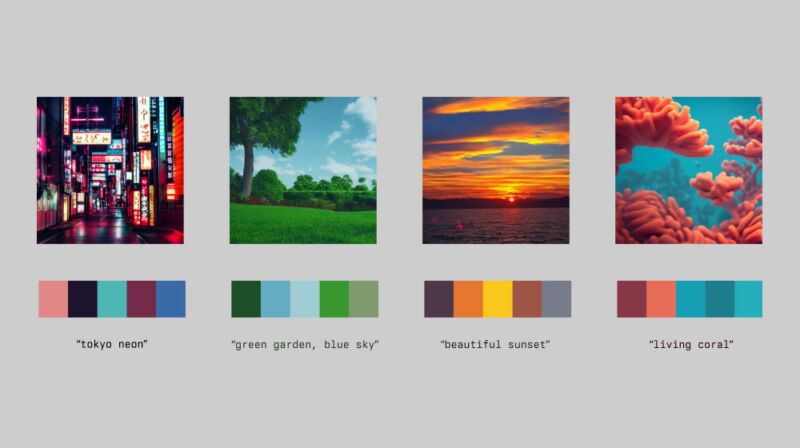

Enlarge / A series of four example color palettes extracted from short written prompts by Matt DesLauriers. (credit: Matt DesLauriers)

A London-based artist named Matt DesLauriers has developed a tool to generate color palettes from any text prompt, allowing someone to type in "beautiful sunset" and get a series of colors that matches a typical sunset scene, for example. Or you could get more abstract, finding colors that match "a sad and rainy Tuesday."

To achieve the effect, DesLauriers uses Stable Diffusion, an open source image synthesis model, to generate an image that matches the text prompt. Next, a JavaScript GIF encoder named gifenc extracts the palette information by analyzing the image and quantizing the colors down to a certain set.

DesLauriers has posted his code on GitHub; it requires a local Stable Diffusion installation and Node.JS. It's a bleeding-edge prototype at the moment that requires some technical skill to set up, but it's also a noteworthy example of the unexpected graphical innovations that can come from open source releases of powerful image synthesis models. Stable Diffusion, which went open source on August 22, generates images from a neural network that has been trained on tens of millions of images pulled from the Internet. Its ability to draw from a wide range of visual influences translates well to extracting color palette information.

No comments:

Post a Comment