Fifty years after the birth of the rechargeable lithium-ion battery, it’s easy to see its value. It’s used in billions of laptops, cellphones, power tools, and cars. Global sales top US $45 billion a year, on their way to more than $100 billion in the coming decade.

And yet this transformative invention took nearly two decades to make it out of the lab, with numerous companies in the United States, Europe, and Asia considering the technology and yet failing to recognize its potential.

The first iteration, developed by M. Stanley Whittingham at Exxon in 1972, didn’t get far. It was manufactured in small volumes by Exxon, appeared at an electric vehicle show in Chicago in 1977, and served briefly as a coin cell battery. But then Exxon dropped it.

Various scientists around the world took up the research effort, but for some 15 years, success was elusive. It wasn’t until the development landed at the right company at the right time that it finally started down a path to battery world domination.

Did Exxon invent the rechargeable lithium battery?

Akira Yoshino, John Goodenough, and M. Stanley Whittingham [from left] shared the 2019 Nobel Prize in Chemistry. At 97, Goodenough was the oldest recipient in the history of the Nobel awards. Jonas Ekstromer/AFP/Getty Images

Akira Yoshino, John Goodenough, and M. Stanley Whittingham [from left] shared the 2019 Nobel Prize in Chemistry. At 97, Goodenough was the oldest recipient in the history of the Nobel awards. Jonas Ekstromer/AFP/Getty Images

In the early 1970s, Exxon scientists predicted that global oil production would peak in the year 2000 and then fall into a steady decline. Company researchers were encouraged to look for oil substitutes, pursuing any manner of energy that didn’t involve petroleum.

Whittingham, a young British chemist, joined the quest at Exxon Research and Engineering in New Jersey in the fall of 1972. By Christmas, he had developed a battery with a titanium-disulfide cathode and a liquid electrolyte that used lithium ions.

Whittingham’s battery was unlike anything that had preceded it. It worked by inserting ions into the atomic lattice of a host electrode material—a process called intercalation. The battery’s performance was also unprecedented: It was both rechargeable and very high in energy output. Up to that time, the best rechargeable battery had been nickel cadmium, which put out a maximum of 1.3 volts. In contrast, Whittingham’s new chemistry produced an astonishing 2.4 volts.

In the winter of 1973, corporate managers summoned Whittingham to the company’s New York City offices to appear before a subcommittee of the Exxon board. “I went in there and explained it—5 minutes, 10 at the most,” Whittingham told me in January 2020. “And within a week, they said, yes, they wanted to invest in this.”

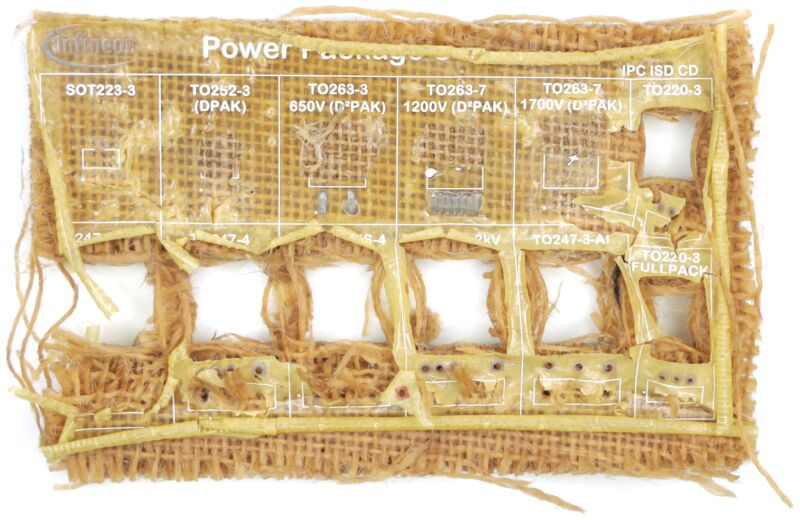

Whittingham’s battery, the first lithium intercalation battery, was developed at Exxon in 1972 using titanium disulfide for the cathode and metallic lithium for the anode.Johan Jarnestad/The Royal Swedish Academy of Sciences

Whittingham’s battery, the first lithium intercalation battery, was developed at Exxon in 1972 using titanium disulfide for the cathode and metallic lithium for the anode.Johan Jarnestad/The Royal Swedish Academy of Sciences

It looked like the beginning of something big. Whittingham published a paper in Science; Exxon began manufacturing coin cell lithium batteries, and a Swiss watch manufacturer, Ebauches, used the cells in a solar-charging wristwatch.

But by the late 1970s, Exxon’s interest in oil alternatives had waned. Moreover, company executives thought Whittingham’s concept was unlikely to ever be broadly successful. They washed their hands of lithium titanium disulfide, licensing the technology to three battery companies—one in Asia, one in Europe, and one in the United States.

“I understood the rationale for doing it,” Whittingham said. “The market just wasn’t going to be big enough. Our invention was just too early.”

Oxford takes the handoff

In 1976, John Goodenough [left] joined the University of Oxford, where he headed development of the first lithium cobalt oxide cathode.The University of Texas at Austin

In 1976, John Goodenough [left] joined the University of Oxford, where he headed development of the first lithium cobalt oxide cathode.The University of Texas at Austin

It was the first of many false starts for the rechargeable lithium battery. John B. Goodenough at the University of Oxford was the next scientist to pick up the baton. Goodenough was familiar with Whittingham’s work, in part because Whittingham had earned his Ph.D. at Oxford. But it was a 1978 paper by Whittingham, “Chemistry of Intercalation Compounds: Metal Guests in Chalcogenide Hosts,” that convinced Goodenough that the leading edge of battery research was lithium. [Goodenough passed away on 25 June at the age of 100.]

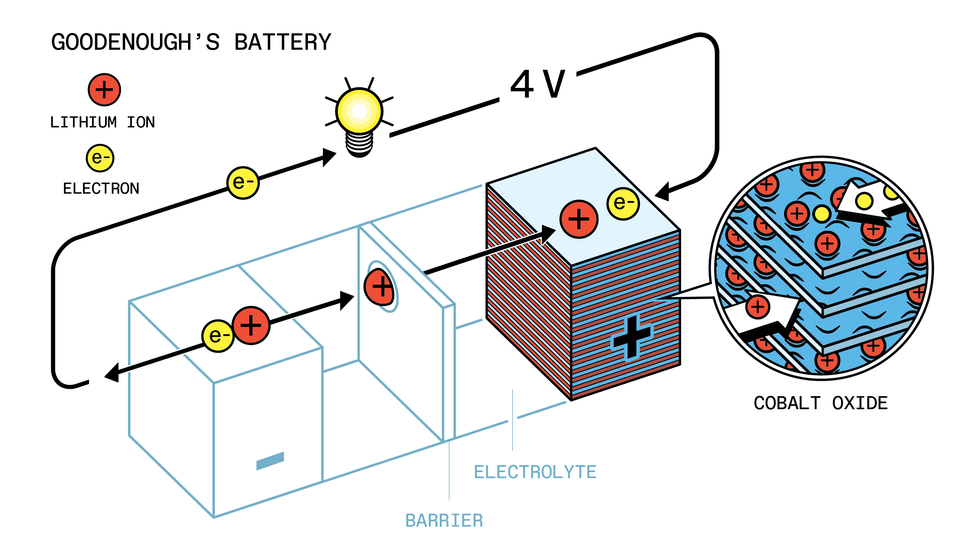

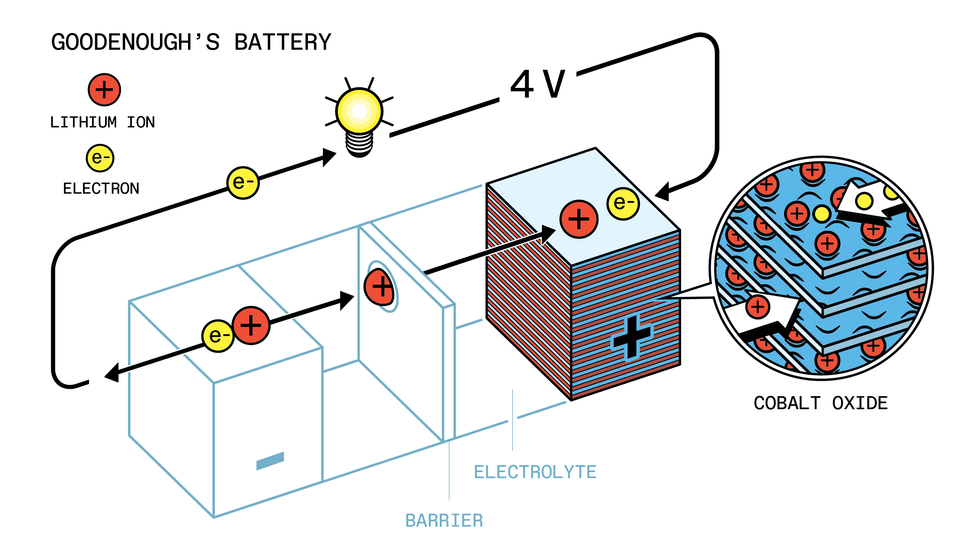

Goodenough and research fellow Koichi Mizushima began researching lithium intercalation batteries. By 1980, they had improved on Whittingham’s design, replacing titanium disulfide with lithium cobalt oxide. The new chemistry boosted the battery’s voltage by another two-thirds, to 4 volts.

Goodenough wrote to battery companies in the United States, United Kingdom, and the European mainland in hopes of finding a corporate partner, he recalled in his 2008 memoir, Witness to Grace. But he found no takers.

He also asked the University of Oxford to pay for a patent, but Oxford declined. Like many universities of the day, it did not concern itself with intellectual property, believing such matters to be confined to the commercial world.

Goodenough’s 1980 battery replaced Whittingham’s titanium disulfide in the cathode with lithium cobalt oxide.Johan Jarnestad/The Royal Swedish Academy of Sciences

Goodenough’s 1980 battery replaced Whittingham’s titanium disulfide in the cathode with lithium cobalt oxide.Johan Jarnestad/The Royal Swedish Academy of Sciences

Still, Goodenough had confidence in his battery chemistry. He visited the Atomic Energy Research Establishment (AERE), a government lab in Harwell, about 20 kilometers from Oxford. The lab agreed to bankroll the patent, but only if the 59-year-old scientist signed away his financial rights. Goodenough complied. The lab patented it in 1981; Goodenough never saw a penny of the original battery’s earnings.

For the AERE lab, this should have been the ultimate windfall. It had done none of the research, yet now owned a patent that would turn out to be astronomically valuable. But managers at the lab didn’t see that coming. They filed it away and forgot about it.

Asahi Chemical steps up to the plate

The rechargeable lithium battery’s next champion was Akira Yoshino, a 34-year-old chemist at Asahi Chemical in Japan. Yoshino had independently begun to investigate using a plastic anode—made from electroconductive polyacetylene—in a battery and was looking for a cathode to pair with it. While cleaning his desk on the last day of 1982, he found a 1980 technical paper coauthored by Goodenough, Yoshino recalled in his autobiography, Lithium-Ion Batteries Open the Door to the Future, Hidden Stories by the Inventor. The paper—which Yoshino had sent for but hadn’t gotten around to reading—described a lithium cobalt oxide cathode. Could it work with his plastic anode?

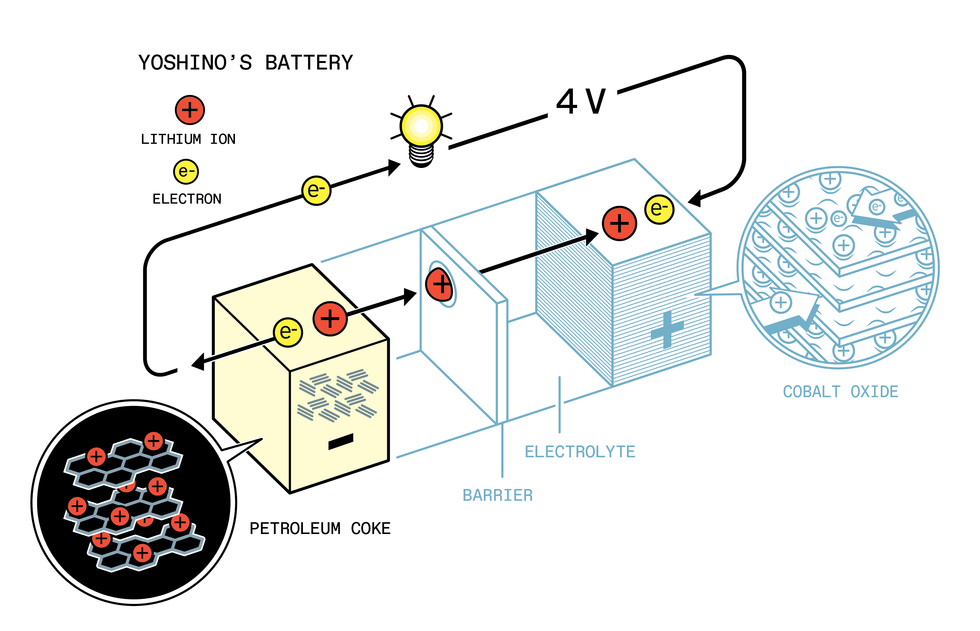

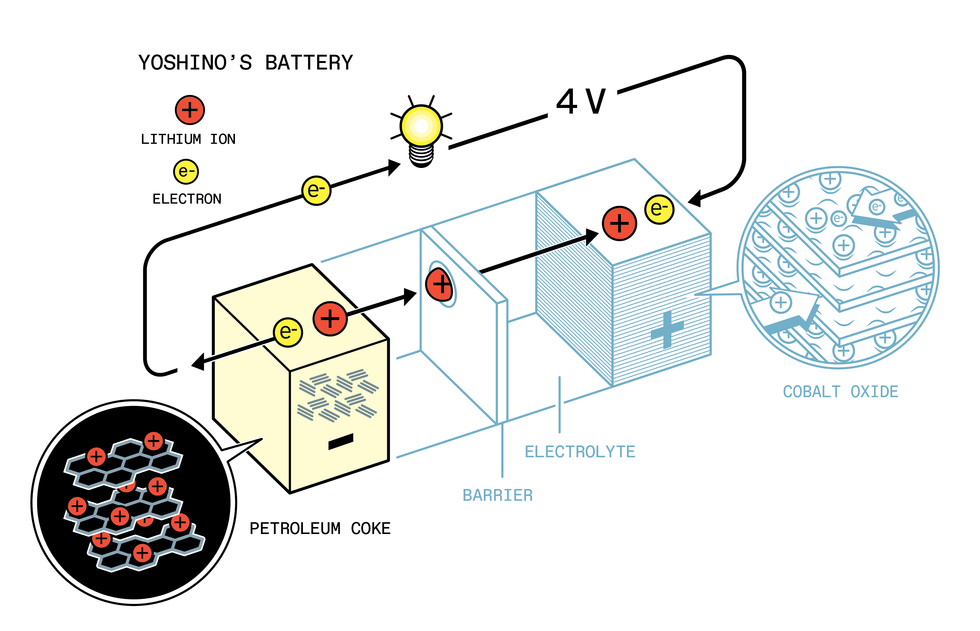

Yoshino, along with a small team of colleagues, paired Goodenough’s cathode with the plastic anode. They also tried pairing the cathode with a variety of other anode materials, mostly made from different types of carbons. Eventually, he and his colleagues settled on a carbon-based anode made from petroleum coke.

Yoshino’s battery, developed at Asahi Chemical in the late 1980s, combined Goodenough’s cathode with a petroleum coke anode. Johan Jarnestad/The Royal Swedish Academy of Sciences

Yoshino’s battery, developed at Asahi Chemical in the late 1980s, combined Goodenough’s cathode with a petroleum coke anode. Johan Jarnestad/The Royal Swedish Academy of Sciences

This choice of petroleum coke turned out to be a major step forward. Whittingham and Goodenough had used anodes made from metallic lithium, which was volatile and even dangerous. By switching to carbon, Yoshino and his colleagues had created a battery that was far safer.

Still, there were problems. For one, Asahi Chemical was a chemical company, not a battery maker. No one at Asahi Chemical knew how to build production batteries at commercial scale, nor did the company own the coating or winding equipment needed to manufacture batteries. The researchers had simply built a crude lab prototype.

Enter Isao Kuribayashi, an Asahi Chemical research executive who had been part of the team that created the battery. In his book, A Nameless Battery with Untold Stories, Kuribayashi recounted how he and a colleague sought out consultants in the United States who could help with the battery’s manufacturing. One consultant recommended Battery Engineering, a tiny firm based in a converted truck garage in the Hyde Park area of Boston. The company was run by a small band of Ph.D. scientists who were experts in the construction of unusual batteries. They had built batteries for a host of uses, including fighter jets, missile silos, and downhole drilling rigs.

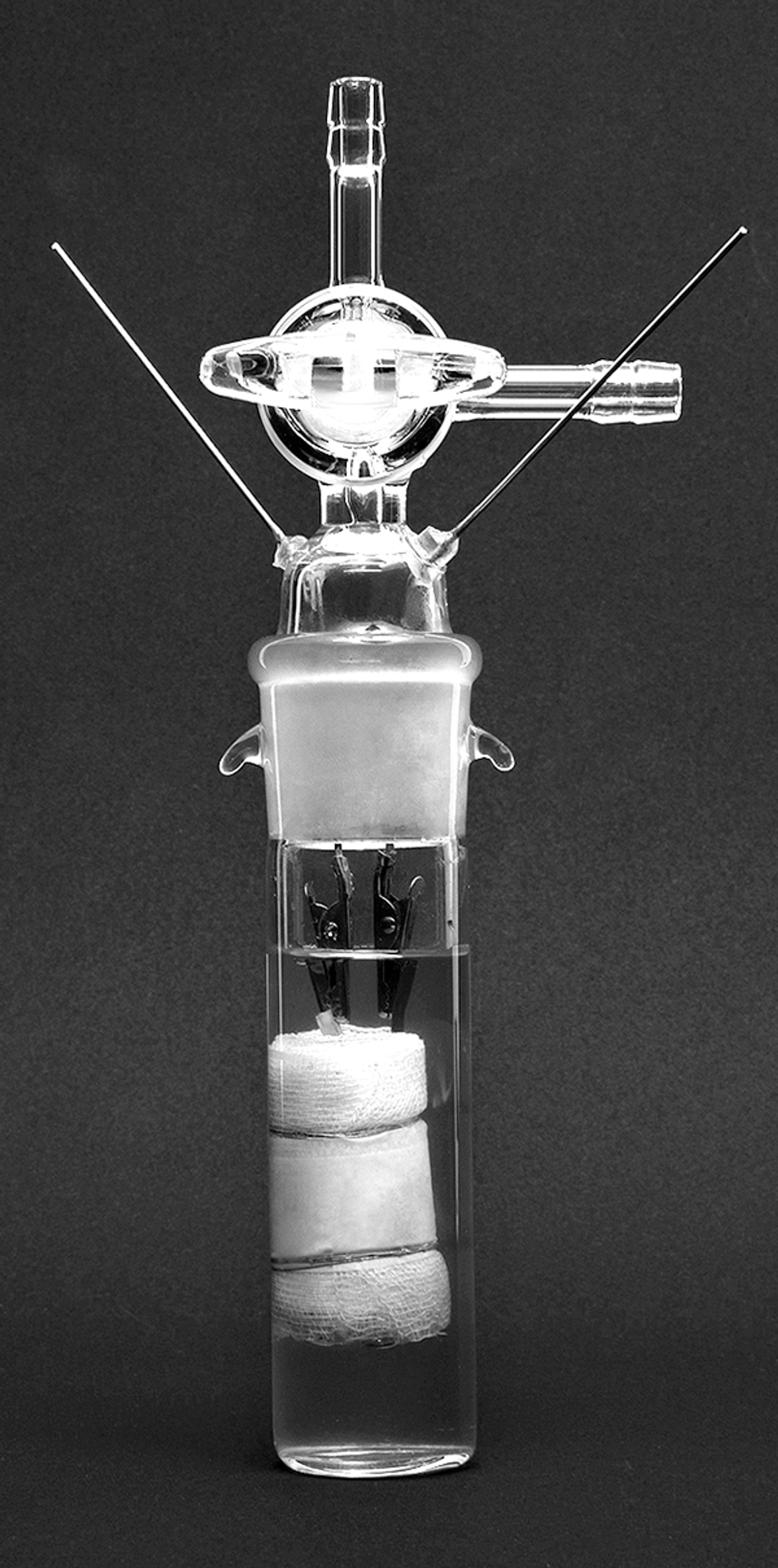

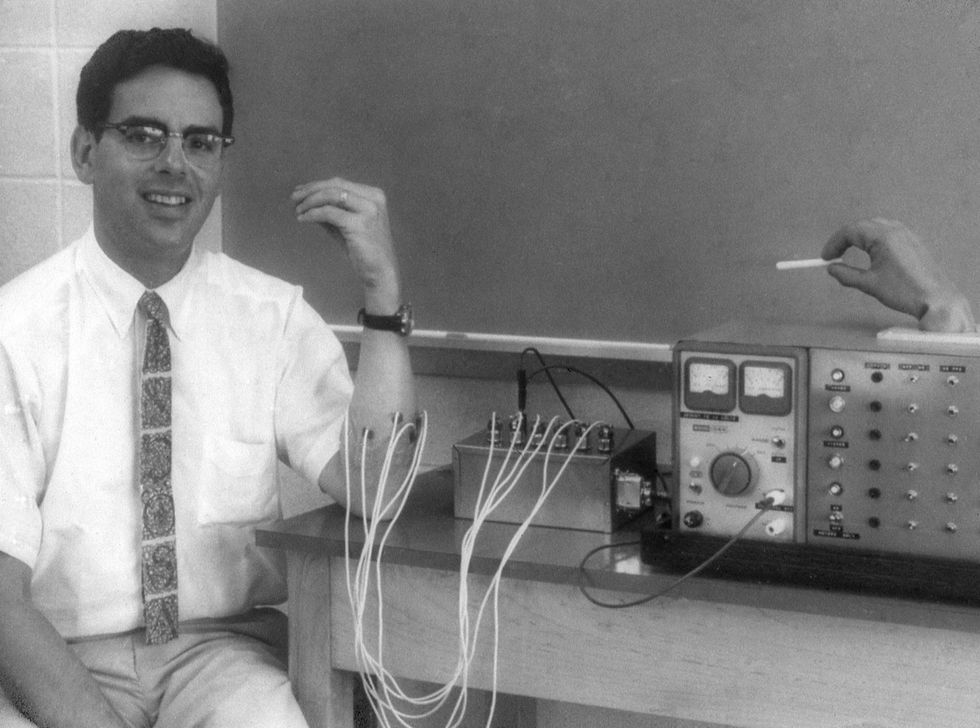

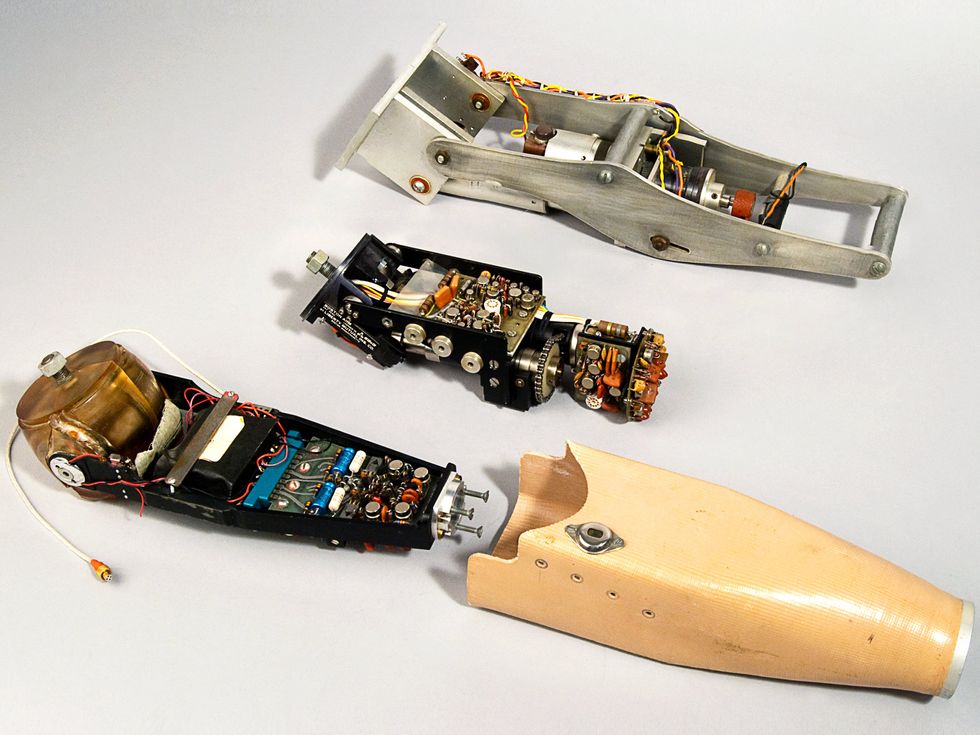

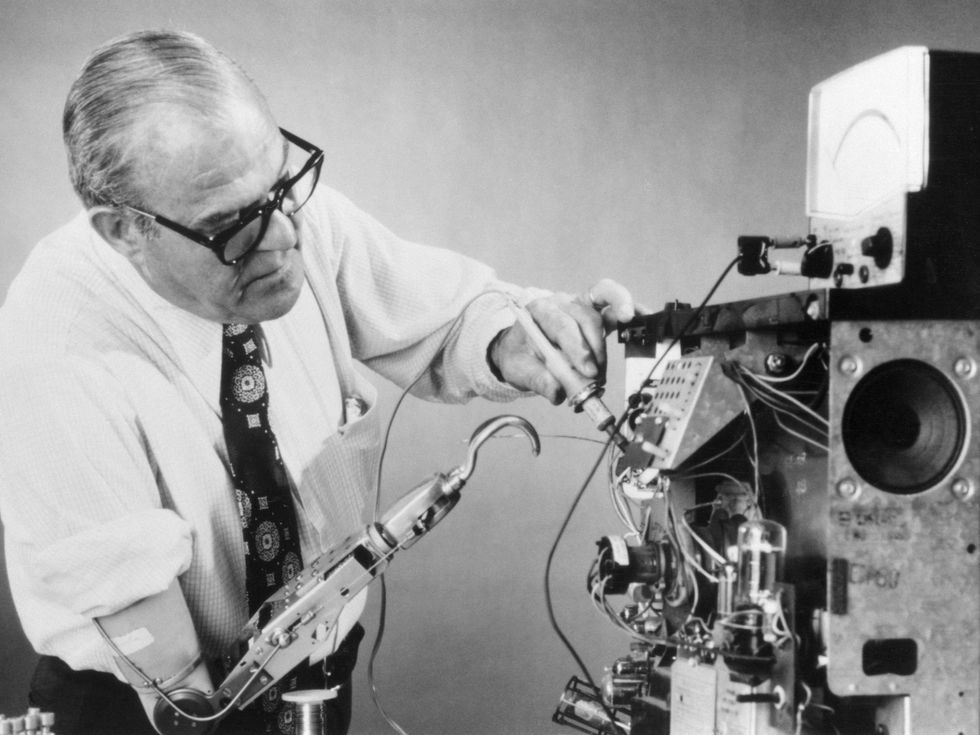

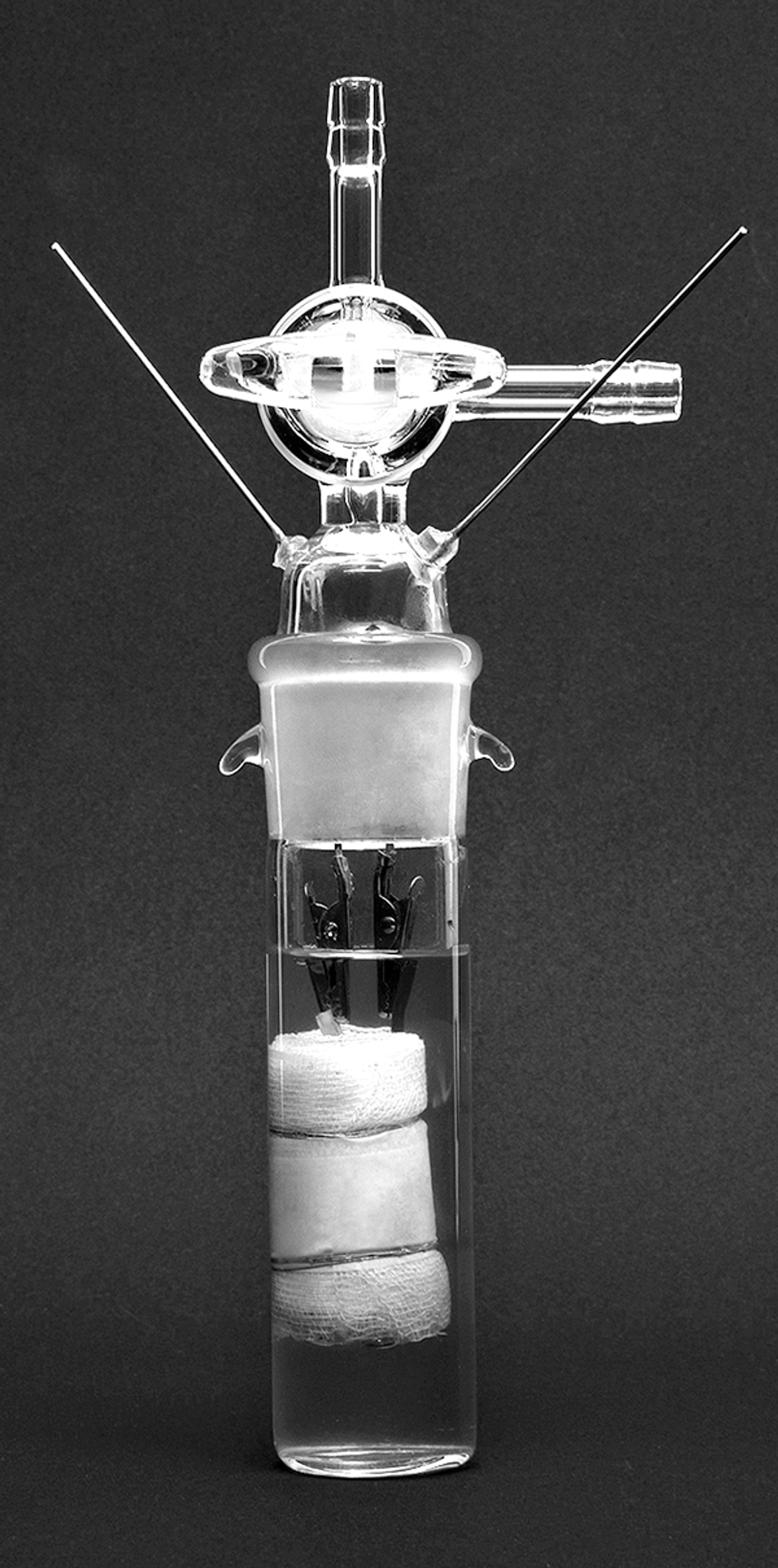

Nikola Marincic, working at Battery Engineering in Boston, transformed Asahi Chemical’s crude prototype [below] into preproduction cells. Lidija Ortloff

Nikola Marincic, working at Battery Engineering in Boston, transformed Asahi Chemical’s crude prototype [below] into preproduction cells. Lidija Ortloff

So Kuribayashi and his colleague flew to Boston in June of 1986, showing up at Battery Engineering unannounced with three jars of slurry—one containing the cathode, one the anode, and the third the electrolyte. They asked company cofounder Nikola Marincic to turn the slurries into cylindrical cells, like the kind someone might buy for a flashlight.

“They said, ‘If you want to build the batteries, then don’t ask any more questions,’” Marincic told me in a 2020 interview. “They didn’t tell me who sent them, and I didn’t want to ask.”

Kuribayashi and his colleague further stipulated that Marincic tell no one about their battery. Even Marincic’s employees didn’t know until 2020 that they had participated in the construction of the world’s first preproduction lithium-ion cells.

Marincic charged $30,000 ($83,000 in today’s dollars) to build a batch of the batteries. Two weeks later, Kuribayashi and his colleague departed for Japan with a box of 200 C-size cells.

Even with working batteries in hand, however, Kuribayashi still met resistance from Asahi Chemical’s directors, who continued to fear moving into an unknown business.

Sony gets pulled into the game

Kuribayashi wasn’t ready to give up. On 21 January 1987, he visited Sony’s camcorder division to make a presentation about Asahi Chemical’s new battery. He took one of the C cells and rolled it across the conference room table to his hosts.

Kuribayashi didn’t give many more details in his book, simply writing that by visiting Sony, he hoped to “confirm the battery technology.”

Sony, however, did more than “confirm” it. By this time, Sony was considering developing its own rechargeable lithium battery, according to its corporate history. When company executives saw Asahi’s cell, they recognized its enormous value. Because Sony was both a consumer electronics manufacturer and a battery manufacturer, its management team understood the battery from both a customer’s and a supplier’s perspective.

And the timing was perfect. Sony engineers were working on a new camcorder, later to be known as the Handycam, and that product dearly needed a smaller, lighter battery. To them, the battery that Kuribayashi presented seemed like a gift from the heavens.

John Goodenough and his coinventor, Koichi Mizushima, convinced the Atomic Energy Research Establishment to fund the cost of patenting their lithium cobalt oxide battery but had to sign away their financial rights to do so. U.S. PATENT AND TRADEMARK OFFICE

John Goodenough and his coinventor, Koichi Mizushima, convinced the Atomic Energy Research Establishment to fund the cost of patenting their lithium cobalt oxide battery but had to sign away their financial rights to do so. U.S. PATENT AND TRADEMARK OFFICE

Several meetings followed. Some Sony scientists were allowed inside Asahi’s labs, and vice versa, according to Kuribayashi. Ultimately, Sony proposed a partnership. Asahi Chemical declined.

Here, the story of the lithium-ion battery’s journey to commercialization gets hazy. Sony researchers continued to work on developing rechargeable lithium batteries, using a chemistry that Sony’s corporate history would later claim was created in house. But Sony’s battery used the same essential chemistry as Asahi Chemical’s. The cathode was lithium cobalt oxide; the anode was petroleum coke; the liquid electrolyte contained lithium ions.

What is clear is that for the next two years, from 1987 to 1989, Sony engineers did the hard work of transforming a crude prototype into a product. Led by battery engineer Yoshio Nishi, Sony’s team worked with suppliers to develop binders, electrolytes, separators, and additives. They developed in-house processes for heat-treating the anode and for making cathode powder in large volumes. They deserve credit for creating a true commercial product.

Only one step remained. In 1989, one of Sony’s executives called the Atomic Energy Research Establishment in Harwell, England. The executive asked about one of the lab’s patents that had been gathering dust for eight years—Goodenough’s cathode. He said Sony was interested in licensing the technology.

Scientists and executives at the Harwell lab scratched their heads. They couldn’t imagine why anyone would be interested in the patent “Electrochemical Cell with New Fast Ion Conductors.”

“It was not clear what the market was going to be, or how big it would be,” Bill Macklin, an AERE scientist at the time, told me. A few of the older scientists even wondered aloud whether it was appropriate for an atomic lab in England to share secrets with a company in Japan, a former World War II adversary. Eventually, though, a deal was struck.

Sony takes it across the finish line

Sony introduced the battery in 1991, giving it the now-familiar moniker “ lithium-ion.” It quickly began to make its way into camcorders, then cellphones.

By that time, 19 years had passed since Whittingham’s invention. Multiple entities had had the opportunity to take this technology all the way—and had dismissed it.

First, there was Exxon, whose executives couldn’t have dreamed that lithium-ion batteries would end up enabling electric vehicles to compete with oil in a big way. Some observers would later contend that, by abandoning the technology, Exxon had conspired to suppress a challenger to oil. But Exxon licensed the technology to three other companies, and none of those succeeded with it, either.

Then there was the University of Oxford, which had refused to pay for a patent.

Finally, there was Asahi Chemical, whose executives struggled with the decision of whether to enter the battery market. (Asahi finally got into the game in 1993, teaming up with Toshiba to make lithium-ion batteries.)

Sony and AERE, the entities that gained the most financially from the battery, both benefitted from luck. The Atomic Energy Research Establishment paid only legal fees for what turned out to be a valuable patent and later had to be reminded that it even owned the patent. AERE’s profits from its patent are unknown, but most observers agree that it reaped at least $50 million, and possibly more than $100 million, before the patent expired.

Sony, meanwhile, had received that fortuitous visit from Asahi Chemical’s Kuribayashi, which set the company on the path toward commercialization. Sony sold tens of millions of cells and then sublicensed the AERE patent to more than two dozen other Asian battery manufacturers, which made billions more. (In 2016, Sony sold its battery business to Murata Manufacturing for 17.5 billion yen, roughly $126 million today).

None of the original investigators—Whittingham, Goodenough, and Yoshino—received a cut of these profits. All three, however, shared the 2019 Nobel Prize in Chemistry. Sony’s Yoshio Nishi, by then retired, wasn’t included in the Nobel, a decision he criticized at a press conference, according to the Mainichi Shimbun newspaper.

In retrospect, lithium-ion’s early history now looks like a tale of two worlds. There was a scientific world and a business world, and they seldom overlapped. Chemists, physicists, and materials scientists worked quietly, sharing their triumphs in technical publications and on overhead projectors at conferences. The commercial world, meanwhile, did not look to university scientists for breakthroughs, and failed to spot the potential of this new battery chemistry, even those advances made by their own researchers.

Had it not been for Sony, the rechargeable lithium battery might have languished for many more years. Almost certainly, the company succeeded because its particular circumstances prepared it to understand and appreciate Kuribayashi’s prototype. Sony was already in the battery business, it needed a better battery for its new camcorder, and it had been toying with the development of its own rechargeable lithium battery. Sony engineers and managers knew exactly where this puzzle piece could go, recognizing what so many others had overlooked. As Louis Pasteur had famously stated more than a century earlier, “Chance favors the prepared mind.”

The story of the lithium-ion battery shows that Pasteur was right.

This article appears in the August 2023 print issue as “The Lithium-ion Battery’s Long and Winding Road.”

Reference: https://ift.tt/aBGTWLS

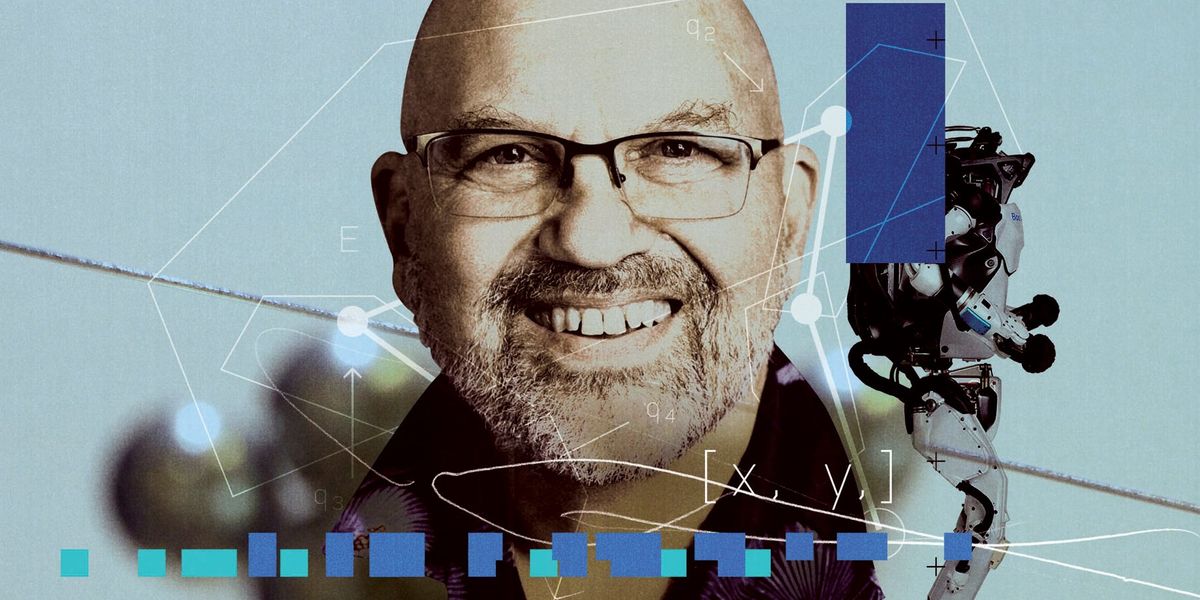

Right now, robots must be carefully trained to complete specific tasks. But Marc Raibert wants to give robots the ability to watch a human do a task, understand what\u2019s happening, and then do the task themselves, whether it\u2019s in a factory [top left and bottom] or in your home [top right and bottom]. Boston Dynamics AI Institute

Right now, robots must be carefully trained to complete specific tasks. But Marc Raibert wants to give robots the ability to watch a human do a task, understand what\u2019s happening, and then do the task themselves, whether it\u2019s in a factory [top left and bottom] or in your home [top right and bottom]. Boston Dynamics AI Institute

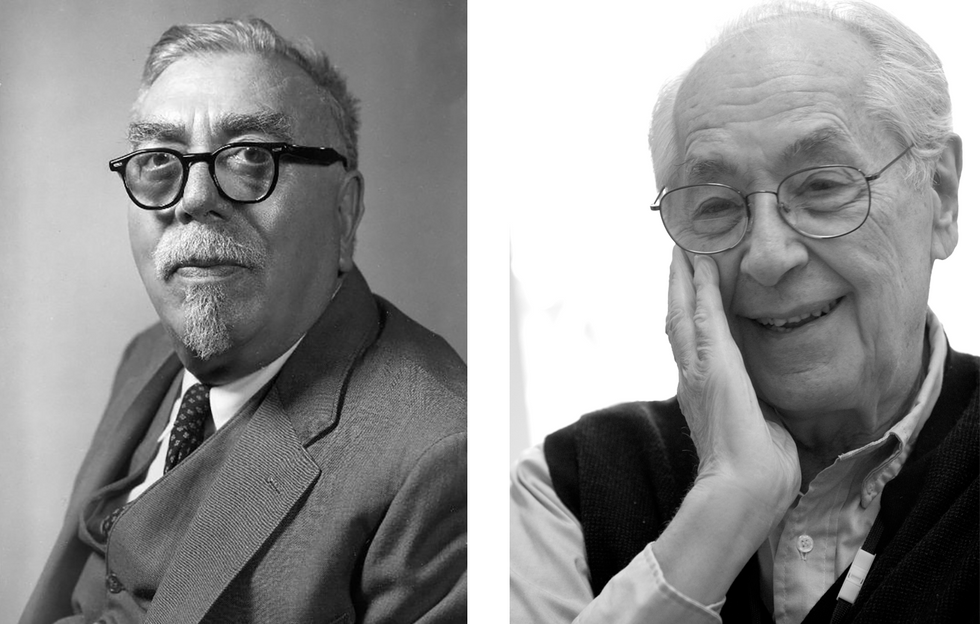

Discussions between cyberneticist Norbert Wiener [left] and surgeon Melvin Glimcher [right] inspired the Boston Arm. Left: MIT Museum; Right: Stephanie Mitchell/Harvard University

Discussions between cyberneticist Norbert Wiener [left] and surgeon Melvin Glimcher [right] inspired the Boston Arm. Left: MIT Museum; Right: Stephanie Mitchell/Harvard University MIT grad student Ralph Alter worked on signal processing and software for the Boston Arm. Robert W. Mann Collection/MIT Museum and Liberating Technologies/Coapt

MIT grad student Ralph Alter worked on signal processing and software for the Boston Arm. Robert W. Mann Collection/MIT Museum and Liberating Technologies/Coapt The Boston Arm’s movements were controlled by electrical signals from an amputee’s bicep and tricep muscles.Michael Cardinali/MIT Museum

The Boston Arm’s movements were controlled by electrical signals from an amputee’s bicep and tricep muscles.Michael Cardinali/MIT Museum Liberty Mutual Insurance Co. supported the development of the Boston Arm as a way of getting amputees off disability and back on the job.MIT Museum

Liberty Mutual Insurance Co. supported the development of the Boston Arm as a way of getting amputees off disability and back on the job.MIT Museum

In February 2023 this Waymo car stopped in a San Francisco street, backing up traffic behind it. The reason? The back door hadn’t been completely closed.Terry Chea/AP

In February 2023 this Waymo car stopped in a San Francisco street, backing up traffic behind it. The reason? The back door hadn’t been completely closed.Terry Chea/AP

Akira Yoshino, John Goodenough, and M. Stanley Whittingham [from left] shared the 2019 Nobel Prize in Chemistry. At 97, Goodenough was the oldest recipient in the history of the Nobel awards. Jonas Ekstromer/AFP/Getty Images

Akira Yoshino, John Goodenough, and M. Stanley Whittingham [from left] shared the 2019 Nobel Prize in Chemistry. At 97, Goodenough was the oldest recipient in the history of the Nobel awards. Jonas Ekstromer/AFP/Getty Images Whittingham’s battery, the first lithium intercalation battery, was developed at Exxon in 1972 using titanium disulfide for the cathode and metallic lithium for the anode.Johan Jarnestad/The Royal Swedish Academy of Sciences

Whittingham’s battery, the first lithium intercalation battery, was developed at Exxon in 1972 using titanium disulfide for the cathode and metallic lithium for the anode.Johan Jarnestad/The Royal Swedish Academy of Sciences In 1976, John Goodenough [left] joined the University of Oxford, where he headed development of the first lithium cobalt oxide cathode.The University of Texas at Austin

In 1976, John Goodenough [left] joined the University of Oxford, where he headed development of the first lithium cobalt oxide cathode.The University of Texas at Austin Goodenough’s 1980 battery replaced Whittingham’s titanium disulfide in the cathode with lithium cobalt oxide.Johan Jarnestad/The Royal Swedish Academy of Sciences

Goodenough’s 1980 battery replaced Whittingham’s titanium disulfide in the cathode with lithium cobalt oxide.Johan Jarnestad/The Royal Swedish Academy of Sciences Yoshino’s battery, developed at Asahi Chemical in the late 1980s, combined Goodenough’s cathode with a petroleum coke anode. Johan Jarnestad/The Royal Swedish Academy of Sciences

Yoshino’s battery, developed at Asahi Chemical in the late 1980s, combined Goodenough’s cathode with a petroleum coke anode. Johan Jarnestad/The Royal Swedish Academy of Sciences Nikola Marincic, working at Battery Engineering in Boston, transformed Asahi Chemical’s crude prototype [below] into preproduction cells. Lidija Ortloff

Nikola Marincic, working at Battery Engineering in Boston, transformed Asahi Chemical’s crude prototype [below] into preproduction cells. Lidija Ortloff

John Goodenough and his coinventor, Koichi Mizushima, convinced the Atomic Energy Research Establishment to fund the cost of patenting their lithium cobalt oxide battery but had to sign away their financial rights to do so. U.S. PATENT AND TRADEMARK OFFICE

John Goodenough and his coinventor, Koichi Mizushima, convinced the Atomic Energy Research Establishment to fund the cost of patenting their lithium cobalt oxide battery but had to sign away their financial rights to do so. U.S. PATENT AND TRADEMARK OFFICE

Source: IEEE Spectrum

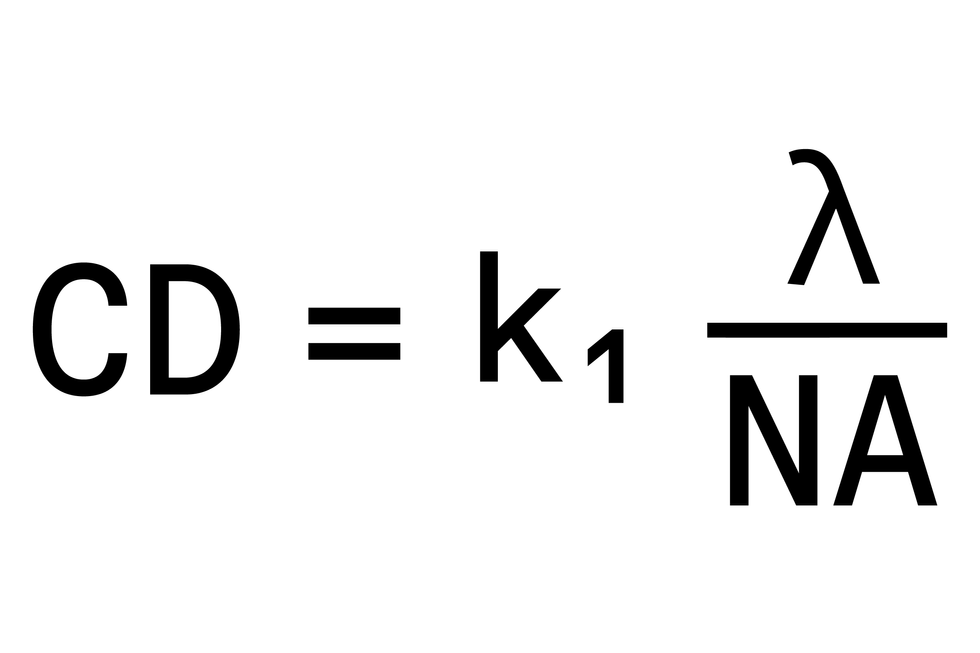

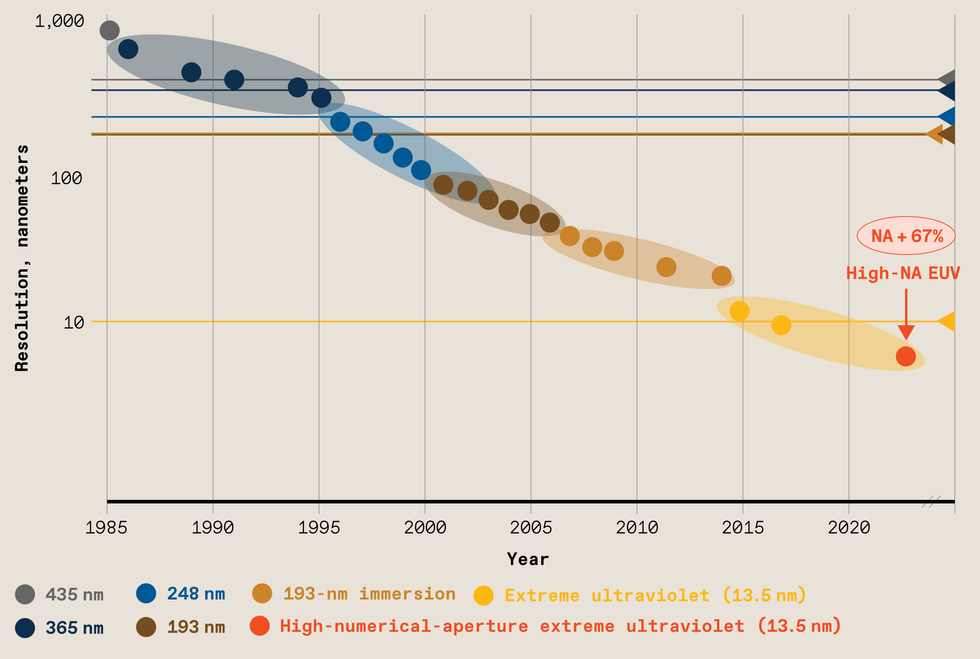

Source: IEEE Spectrum The resolution of photolithography has improved about 10,000-fold over the last four decades. That’s due in part to using smaller and smaller wavelengths of light, but it has also required greater numerical aperture and improved processing techniques.Source: ASML

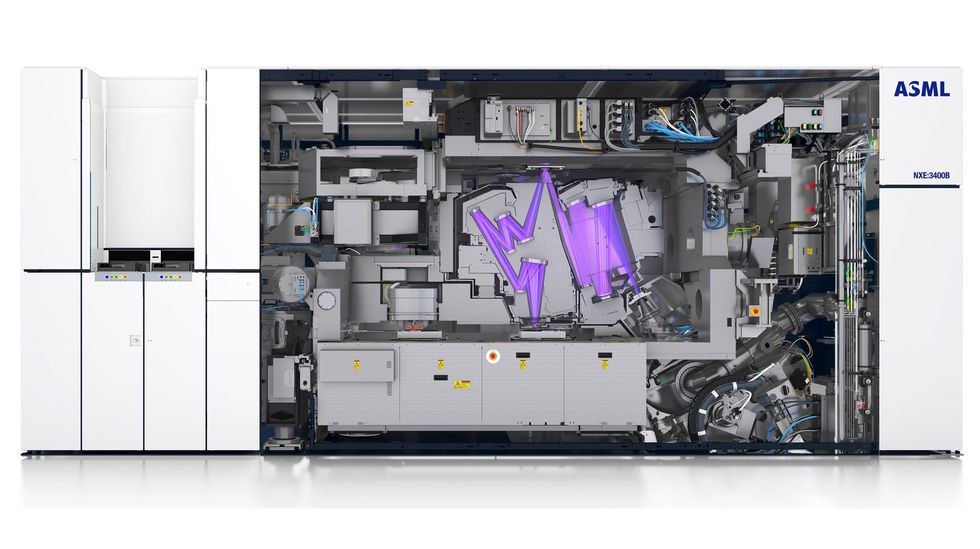

The resolution of photolithography has improved about 10,000-fold over the last four decades. That’s due in part to using smaller and smaller wavelengths of light, but it has also required greater numerical aperture and improved processing techniques.Source: ASML In a vacuum chamber, EUV light [purple] reflects off multiple mirrors before bouncing off the photomask [top center]. From there the light continues its journey until it is projected onto the wafer [bottom center], carrying the photomask’s pattern. The illustration shows today’s commercial system with a 0.33 numerical aperture. The optics in future systems, with an NA of 0.55, will be different. Source: ASML

In a vacuum chamber, EUV light [purple] reflects off multiple mirrors before bouncing off the photomask [top center]. From there the light continues its journey until it is projected onto the wafer [bottom center], carrying the photomask’s pattern. The illustration shows today’s commercial system with a 0.33 numerical aperture. The optics in future systems, with an NA of 0.55, will be different. Source: ASML If the EUV light strikes the photomask at too steep an angle, it will not reflect properly.Source: ASML

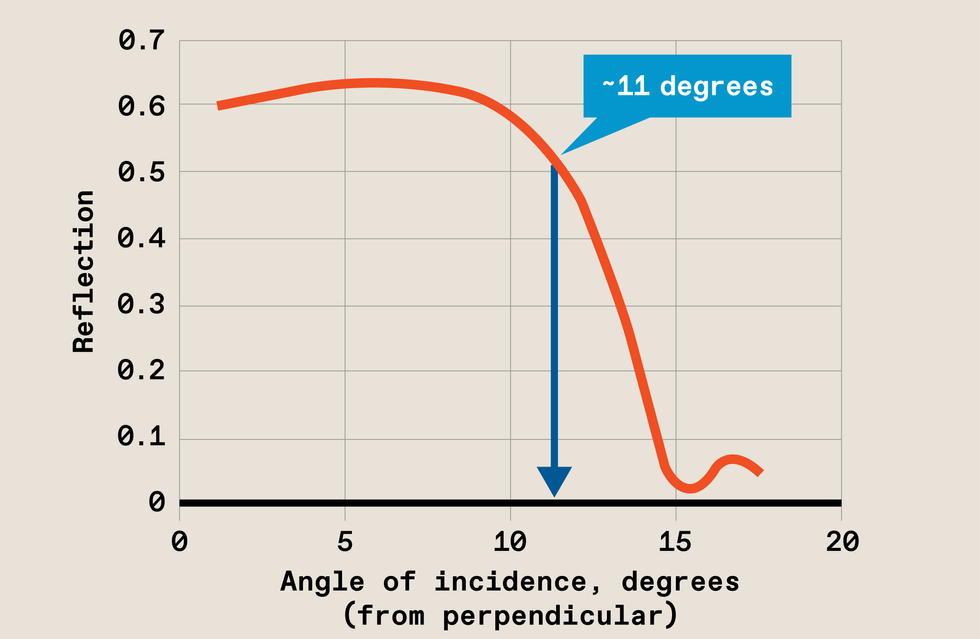

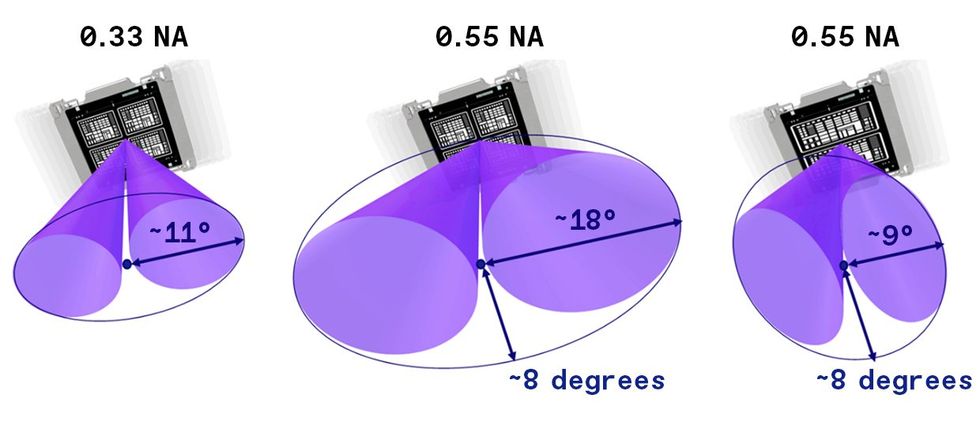

If the EUV light strikes the photomask at too steep an angle, it will not reflect properly.Source: ASML The angle of reflection at the mask in today’s EUV is at its limit [left] Increasing the numerical aperture of EUV would result in an angle of reflection that is too wide [center]. So high-NA EUV uses anamorphic optics, which allow the angle to increase in only one direction [right]. The field that can be imaged this way is half the size, so the pattern on the mask must be distorted in one direction, but that’s good enough to maintain throughput through the machine.Source: ASML

The angle of reflection at the mask in today’s EUV is at its limit [left] Increasing the numerical aperture of EUV would result in an angle of reflection that is too wide [center]. So high-NA EUV uses anamorphic optics, which allow the angle to increase in only one direction [right]. The field that can be imaged this way is half the size, so the pattern on the mask must be distorted in one direction, but that’s good enough to maintain throughput through the machine.Source: ASML