For more than a century, utility companies have used electromechanical relays to protect power systems against damage that might occur during severe weather, accidents, and other abnormal conditions. But the relays could neither locate the faults nor accurately record what happened.

Then, in 1977, Edmund O. Schweitzer III invented the digital microprocessor-based relay as part of his doctoral thesis. Schweitzer’s relay, which could locate a fault within the radius of 1 kilometer, set new standards for utility reliability, safety, and efficiency.

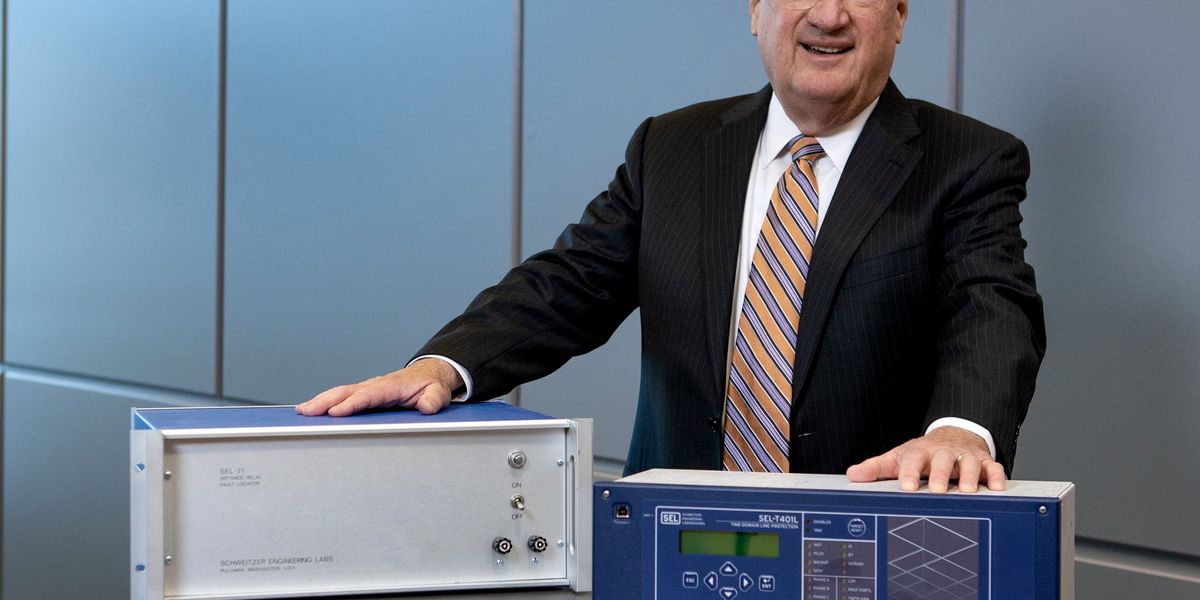

Edmund O. Schweitzer III

Employer:

Schweitzer Engineering Laboratories

Title:

President and CTO

Member grade:

Life Fellow

Alma maters:

Purdue University, West Lafayette, Ind.; Washington State University, Pullman

To develop and manufacture his relay, he launched Schweitzer Engineering Laboratories in 1982 from his basement in Pullman, Wash. Today SEL manufactures hundreds of products that protect, monitor, control, and automate electric power systems in more than 165 countries.

Schweitzer, an IEEE Life Fellow, is his company’s president and chief technology officer. He started SEL with seven workers; it now has more than 6,000.

The 40-year-old employee-owned company continues to grow. It has four manufacturing facilities in the United States. Its newest one, which opened in March in Moscow, Idaho, fabricates printed circuit boards.

Schweitzer has received many accolades for his work, including the 2012 IEEE Medal in Power Engineering. In 2019 he was inducted into the U.S. National Inventors Hall of Fame.

Advances in power electronics

Power system faults can happen when a tree or vehicle hits a power line, a grid operator makes a mistake, or equipment fails. The fault shunts extra current to some parts of the circuit, shorting it out.

If there is no proper scheme or device installed with the aim of protecting the equipment and ensuring continuity of the power supply, an outage or blackout could propagate throughout the grid.

Overcurrent is not the only damage that can occur, though. Faults also can change voltages, frequencies, and the direction of current.

A protection scheme should quickly isolate the fault from the rest of the grid, thus limiting damage on the spot and preventing the fault from spreading to the rest of the system. To do that, protection devices must be installed.

That’s where Schweitzer’s digital microprocessor-based relay comes in. He perfected it in 1982. It later was commercialized and sold as the SEL-21 digital distance relay/fault locator.

Inspired by a blackout and a protective relays book

Schweitzer says his relay was, in part, inspired by an event that took place during his first year of college.

“Back in 1965, when I was a freshman at Purdue University, a major blackout left millions without power for hours in the U.S. Northeast and Ontario, Canada,” he recalls. “It was quite an event, and I remember it well. I learned many lessons from it. One was how difficult it was to restore power.”

He says he also was inspired by the book Protective Relays: Their Theory and Practice. He read it while an engineering graduate student at Washington State University, in Pullman.

“I bought the book on the Thursday before classes began and read it over the weekend,” he says. “I couldn’t put it down. I was hooked.

“I realized that these solid-state devices were special-purpose signal processors. They read the voltage and current from the power systems and decided whether the power systems’ apparatuses were operating correctly. I started thinking about how I could take what I knew about digital signal processing and put it to work inside a microprocessor to protect an electric power system.”

The 4-bit and 8-bit microprocessors were new at the time.

“I think this is how most inventions start: taking one technology and putting it together with another to make new things,” he says. “The inventors of the microprocessor had no idea about all the kinds of things people would use it for. It is amazing.”

He says he was introduced to signal processing, signal analysis, and how to use digital techniques in 1968 while at his first job, working for the U.S. Department of Defense at Fort Meade, in Maryland.

Faster ways to clear faults and improve cybersecurity

Schweitzer continues to invent ways of protecting and controlling electric power systems. In 2016 his company released the SEL-T400L, which samples a power system every microsecond to detect the time between traveling waves moving at the speed of light. The idea is to quickly detect and locate transmission line faults.

The relay decides whether to trip a circuit or take other actions in 1 to 2 milliseconds. Previously, it would take a protective relay on the order of 16 ms. A typical circuit breaker takes 30 to 40 ms in high-voltage AC circuits to trip.

“The inventors of the microprocessor had no idea about all the kinds of things people would use it for. It is amazing.”

“I like to talk about the need for speed,” Schweitzer says. “In this day and age, there’s no reason to wait to clear a fault. Faster tripping is a tremendous opportunity from a point of view of voltage and angle stability, safety, reducing fire risk, and damage to electrical equipment.

“We are also going to be able to get a lot more out of the existing infrastructure by tripping faster. For every millisecond in clearing time saved, the transmission system stability limits go up by 15 megawatts. That’s about one feeder per millisecond. So, if we save 12 ms, all of the sudden we are able to serve 12 more distribution feeders from one part of one transmission system.”

The time-domain technology also will find applications in transformer and distribution protection schemes, he says, as well as have a significant impact on DC transmission.

What excites Schweitzer today, he says, is the concept of energy packets, which he and SEL have been working on. The packets measure energy exchange for all signals including distorted AC systems or DC networks.

“Energy packets precisely measure energy transfer, independent of frequency or phase angle, and update at a fixed rate with a common time reference such as every millisecond,” he says. “Time-domain energy packets provide an opportunity to speed up control systems and accurately measure energy on distorted systems—which challenges traditional frequency-domain calculation methods.”

He also is focusing on improving the reliability of critical infrastructure networks by improving cybersecurity, situational awareness, and performance. Plug-and-play and best-effort networking aren’t safe enough for critical infrastructure, he says.

“SEL OT SDN technology solves some significant cybersecurity problems,” he says, “and frankly, it makes me feel comfortable for the first time with using Ethernet in a substation.”

From engineering professor to inventor

Schweitzer didn’t start off planning to launch his own company. He began a successful career in academia in 1977 after joining the electrical engineering faculty at Ohio University, in Athens. Two years later, he moved to Pullman, Wash., where he taught at Washington State’s Voiland College of Engineering and Architecture for the next six years. It was only after sales of the SEL-21 took off that he decided to devote himself to his startup full time.

It’s little surprise that Schweitzer became an inventor and started his own company, as his father and grandfather were inventors and entrepreneurs.

His grandfather, Edmund O. Schweitzer, who held 87 patents, invented the first reliable high-voltage fuse in collaboration with Nicholas J. Conrad in 1911, the year the two founded Schweitzer and Conrad—today known as S&C Electric Co.—in Chicago.

Schweitzer’s father, Edmund O. Schweitzer Jr., had 208 patents. He invented several line-powered fault-indicating devices, and he founded the E.O. Schweitzer Manufacturing Co. in 1949. It is now part of SEL.

Schweitzer says a friend gave him the best financial advice he ever got about starting a business: Save your money.

“I am so proud that our 6,000-plus-person company is 100 percent employee-owned,” Schweitzer says. “We want to invest in the future, so we reinvest our savings into growth.”

He advises those who are planning to start a business to focus on their customers and create value for them.

“Unleash your creativity,” he says, “and get engaged with customers. Also, figure out how to contribute to society and make the world a better place.”

Reference: https://ift.tt/cYUkMsB

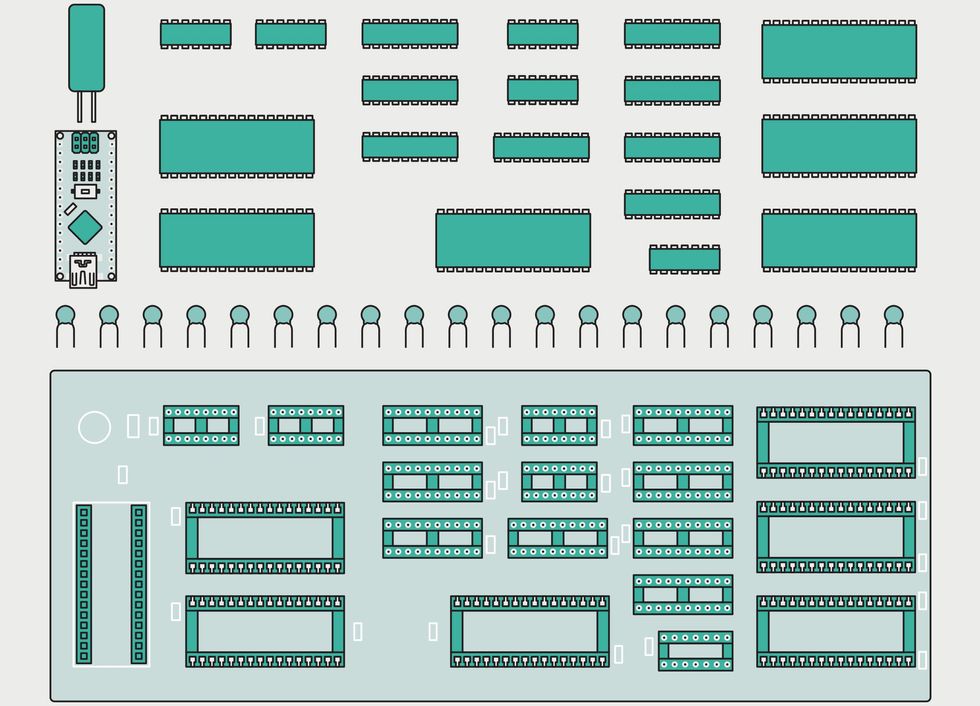

Apart from some flip-flops, a couple of NOT gates, and an up-down counter, the PureTuring machine uses only RAM and ROM chips—there are no logic chips. An Arduino board [bottom] monitors the RAM to extract display data. James Provost

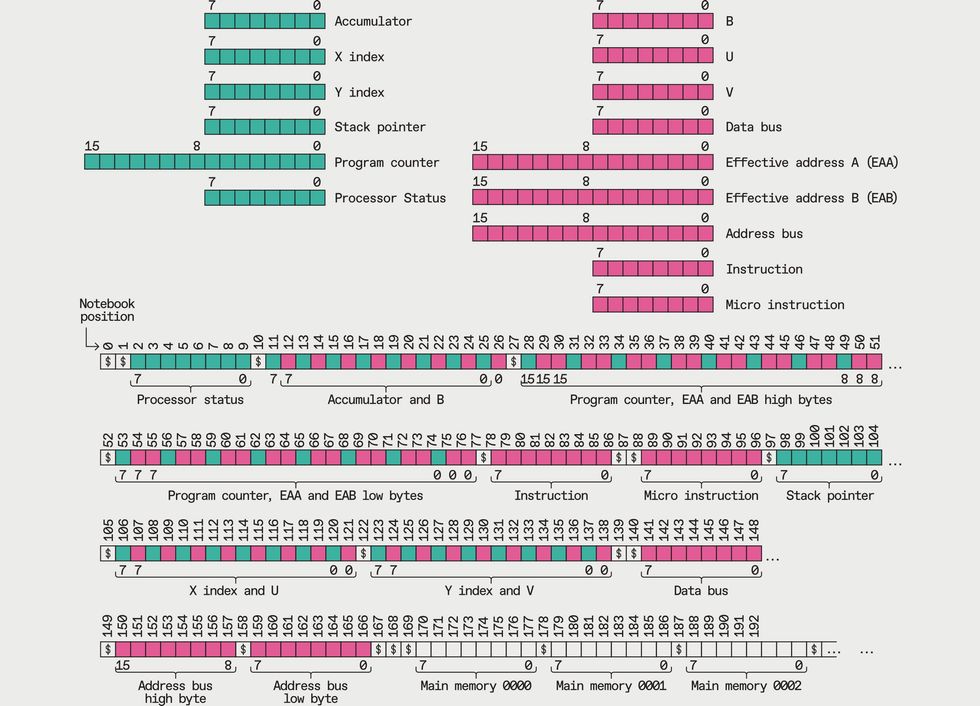

Apart from some flip-flops, a couple of NOT gates, and an up-down counter, the PureTuring machine uses only RAM and ROM chips—there are no logic chips. An Arduino board [bottom] monitors the RAM to extract display data. James Provost Only some of the 6502 registers are exposed to programmers [green]; its internal, hidden registers [purple] are used to execute instructions. Below each register a how the registers are arranged, and sometime interleaved, on the PureTuring’s “tape.”James Provost

Only some of the 6502 registers are exposed to programmers [green]; its internal, hidden registers [purple] are used to execute instructions. Below each register a how the registers are arranged, and sometime interleaved, on the PureTuring’s “tape.”James Provost

The robot and the professional human both achieved similar results in their garden plots.UC Berkeley

The robot and the professional human both achieved similar results in their garden plots.UC Berkeley

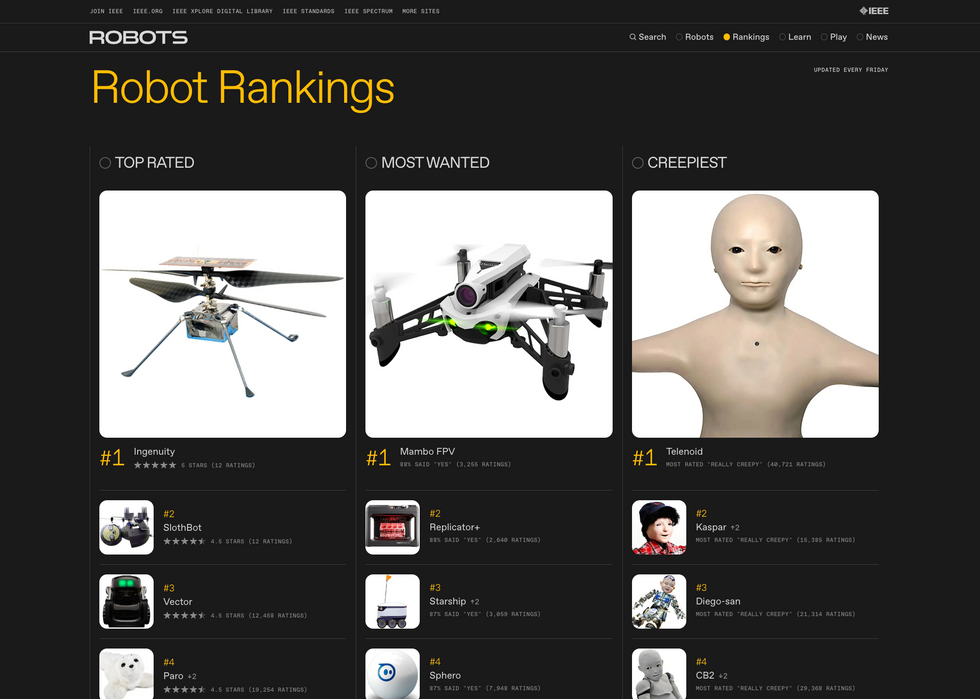

Rate this robot: For each robot on the site, you can submit your overall rating, answer if you’d want to have this robot, and rate its appearance.IEEE Spectrum

Rate this robot: For each robot on the site, you can submit your overall rating, answer if you’d want to have this robot, and rate its appearance.IEEE Spectrum The Robots Guide features three rankings: Top Rated, Most Wanted, and Creepiest.IEEE Spectrum

The Robots Guide features three rankings: Top Rated, Most Wanted, and Creepiest.IEEE Spectrum

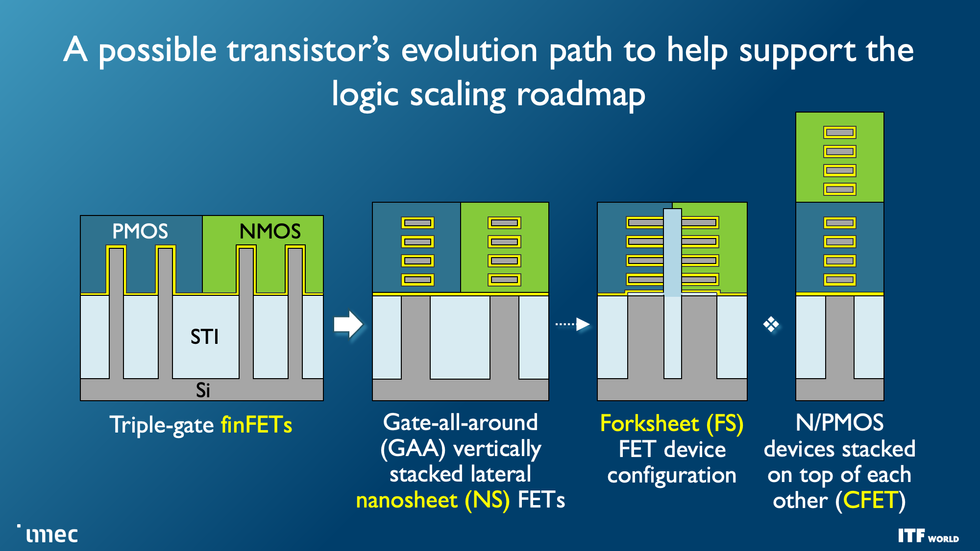

Leading-edge transistors are already transitioning from the fin field-effect transistor (FinFET) architecture to nanosheets. The ultimate goal is to stack two devices atop each other in a CFET configuration. The forksheet may be an intermediary step on the way.Imec

Leading-edge transistors are already transitioning from the fin field-effect transistor (FinFET) architecture to nanosheets. The ultimate goal is to stack two devices atop each other in a CFET configuration. The forksheet may be an intermediary step on the way.Imec