Monday, October 31, 2022

Republicans Continue to Spread Baseless Claims About Pelosi Attack

Video Monday: IROS 2022 Award Winners

IROS 2022 took place in Kyoto last week, bringing together thousands of roboticists from around the world to share all the latest awesome research they’ve been working on. We’ve got a bunch of stuff to bring you from the conference, but while we work on that (and recover from some monster jetlag), here are the presentation videos of all of the IROS 2022 award-winning papers. This is the some of the best, most impactful robotics research presented this year. Congratulations to all of the winners!

IROS 2022 Best Paper Award

“SpeedFolding: Learning Efficient Bimanual Folding of Garment,” by Yahav Avigal, Lars Berscheid, Tamim Asfour, Torsten Kroeger, and Ken Goldberg from UC Berkeley and Karlsruhe Institute of Technology.

Read more: https://events.infovaya.com/presentation?id=84508

IROS 2022 Best Student Paper Award – Sponsored by ABB

“FAR Planner: Fast, Attemptable Route Planner Using Dynamic Visibility Update,” by Fan Yang, Chao Cao, Hongbiao Zhu, Jean Oh, and Ji Zhang from Carnegie Mellon University and Harbin Institute of Technology.

Read more: https://events.infovaya.com/presentation?id=84511

IROS Best Paper Award on Cognitive Robotics – Sponsored by KROS

“Gesture2Vec: Clustering Gestures Using Representation Learning Methods for Co-Speech Gesture Generation,” by Payam Jome Yazdian, Mo Chen, and Angelica Lim from Simon Fraser University.

Read more: https://events.infovaya.com/presentation?id=90186

IROS 2022 Best RoboCup Paper Award – Sponsored by RoboCup Federation

“RCareWorld: A Human-centric Simulation World for Caregiving Robots,” by Ruolin Ye, Wenqiang Xu, Haoyuan Fu, Rajat Kumar Jenamani, Vy Nguyen, Cewu Lu, Katherine Dimitropoulou, and Tapomayukh Bhattacharjee from Cornell University, Shanghai Jiaotong University, and Columbia University.

Read more: https://events.infovaya.com/presentation?id=84520

IROS Best Paper Award on Robot Mechanisms and Design – Sponsored by ROBOTIS

“Aerial Grasping and the Velocity Sufficiency Region,” by Tony G. Chen, Kenneth Hoffmann, JunEn Low, Keiko Nagami, David Lentink, and Mark Cutkosky from Stanford University and Wageningen University.

Read more: https://events.infovaya.com/presentation?id=85675

IROS Best Entertainment and Amusement Paper Award – Sponsored by JTCF

“Robot Learning to Paint From Demonstrations,” by Younghyo Park, Seunghun Jeon, and Taeyoon Lee from Seoul National University, KAIST, and Naver Labs.

Read more: https://events.infovaya.com/presentation?id=85681

IROS Best Paper Award on Safety, Security, and Rescue Robotics in memory of Motohiro Kisoi – Sponsored by IRS

“Power-Based Safety Layer for Aerial Vehicles in Physical Interaction Using Lyapunov Exponents,” by Eugenio Cuniato, Nicholas Lawrance, Marco Tognon, and Roland Siegwart from ETH Zurich and CSIRO.

Read more: https://events.infovaya.com/presentation?id=86266

IROS Best Paper Award on Agri-Robotics – Sponsored by YANMAR

“Explicitly Incorporating Spatial Information to Recurrent Networks for Agriculture,” by Claus Smitt, Michael Allan Halstead, Alireza Ahmadi, and Christopher Steven McCool from University of Bonn.

Read more: https://events.infovaya.com/presentation?id=86839

IROS Best Paper Award on Mobile Manipulation – Sponsored by OMRON Sinic X Corp.

“Robot Learning of Mobile Manipulation with Reachability Behavior Priors,” by Snehal Jauhri, Jan Peters, and Georgia Chalvatzaki from TU Darmstadt.

Read more: https://events.infovaya.com/presentation?id=86827

IROS Best Application Paper Award – Sponsored by ICROS

“Soft Tissue Characterisation Using a Novel Robotic Medical Percussion Device with Acoustic Analysis and Neural Network,” by Pilar Zhang Qiu, Yongxuan Tan, Oliver Thompson, Bennet Cobley, and Thrishantha Nanayakkara from Imperial College London.

Read more: https://events.infovaya.com/presentation?id=86287

IROS Best Paper Award for Industrial Robotics Research for Applications – Sponsored by Mujin Inc.

“Absolute Position Detection in 7-Phase Sensorless Electric Stepper Motor,” by Vincent Groenhuis, Gijs Rolff, Koen Bosman, Leon Abelmann, and Stefano Stramigioli from University of Twente, IMS BV, and Eye-on-Air.

Read more: https://events.infovaya.com/presentation?id=85705

Reference: https://ift.tt/6ngfobOUnconfirmed hack of Liz Truss’ phone prompts calls for “urgent investigation”

Enlarge / Liz Truss, then Chief Secretary to the Treasury, taking a picture on her phone on May 1, 2018, in London. (credit: Getty Images)

British opposition politicians are calling for an "urgent investigation" into an unconfirmed media report that spies suspected of working for Russia hacked the phone of former Prime Minister Liz Truss while she was serving as foreign minister.

The report, published by the UK's Mail on Sunday newspaper, cited unnamed people speaking on condition of anonymity saying that Truss' personal cell phone had been hacked "by agents suspected of working for the Kremlin." The attackers acquired "up to a year's worth of messages," discussing "highly sensitive discussions with senior international foreign ministers about the war in Ukraine, including detailed discussions about arms shipments."

The Mail said that the hack was discovered during the Conservative Party's first leadership campaign over the summer, which ultimately named Truss prime minister. The discovery was reportedly "suppressed" by Boris Johnson, the UK Prime Minister at the time of the campaign, and Cabinet Secretary Simon Case, with the latter reportedly imposing a news blackout.

Why the App Store’s tone-deaf gambling ads make me worry about Apple

Enlarge (credit: Aurich Lawson | Getty Images)

Apple released iOS 16.1 and iPadOS 16.1 to the public last week, with a long list of new features, fixes, and high-priority zero-day security updates. The updates also included the latest version of SKAdNetwork, Apple's ad services framework for the App Store, and putting advertisements outside of the "Search" tab, where they had been relegated previously. Other changes included new App Store rules that give Apple a cut of NFT sales and of purchases made to boost posts within social media apps.

Whatever the intended effects of these new ad-related updates were supposed to be, indications from Apple's third-party app developers, bloggers, and users indicated the end result was a flood of irrelevant and obnoxious ads, quite often for crypto-related scams and gambling. This included quite a few instances where those ads were not just annoying but inappropriate—next to apps for kids' games or apps for gambling addiction recovery.

We contacted Apple to see whether it has anything to share about its ad rollout, and the company told us (and other outlets) that it had "paused ads related to gambling and a few other categories on App Store product pages." In the short term, the most egregious problem has been addressed, and in any case, "gambling apps advertised next to gambling addiction recovery apps" seemed like a result of unforeseen circumstances rather than something that Apple intended to happen.

Sunday, October 30, 2022

Can Elon Musk Make the Math Work on Owning Twitter? It’s Dicey.

Elon Musk, in a Tweet, Shares Link From Site Known to Publish False News

Saturday, October 29, 2022

Elon Musk Is Said to Have Ordered Job Cuts Across Twitter

This Implant Turns Brain Waves Into Words

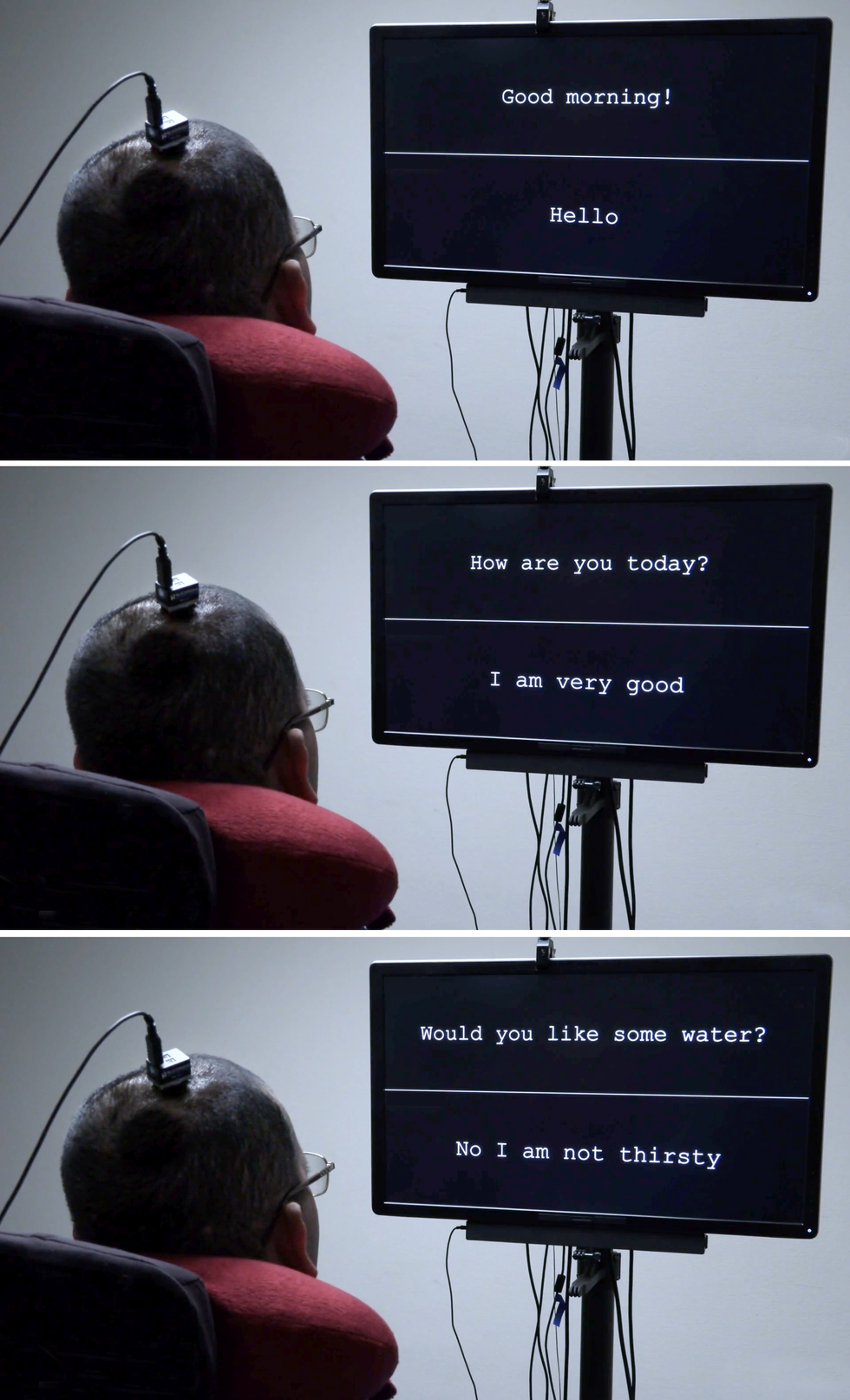

A computer screen shows the question “Would you like some water?” Underneath, three dots blink, followed by words that appear, one at a time: “No I am not thirsty.”

It was brain activity that made those words materialize—the brain of a man who has not spoken for more than 15 years, ever since a stroke damaged the connection between his brain and the rest of his body, leaving him mostly paralyzed. He has used many other technologies to communicate; most recently, he used a pointer attached to his baseball cap to tap out words on a touchscreen, a method that was effective but slow. He volunteered for my research group’s clinical trial at the University of California, San Francisco in hopes of pioneering a faster method. So far, he has used the brain-to-text system only during research sessions, but he wants to help develop the technology into something that people like himself could use in their everyday lives.

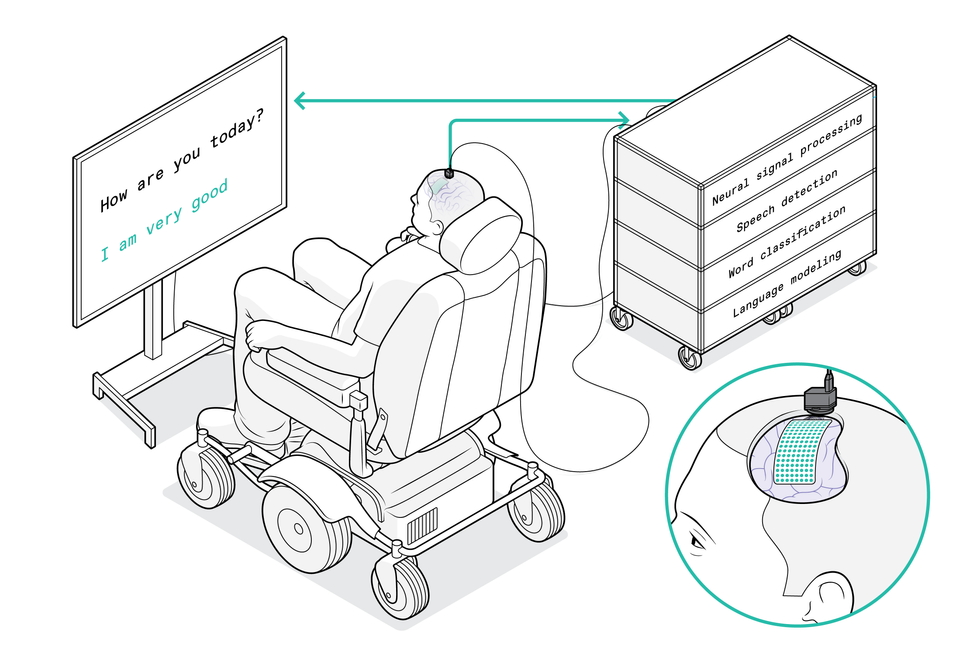

In our pilot study, we draped a thin, flexible electrode array over the surface of the volunteer’s brain. The electrodes recorded neural signals and sent them to a speech decoder, which translated the signals into the words the man intended to say. It was the first time a paralyzed person who couldn’t speak had used neurotechnology to broadcast whole words—not just letters—from the brain.

That trial was the culmination of more than a decade of research on the underlying brain mechanisms that govern speech, and we’re enormously proud of what we’ve accomplished so far. But we’re just getting started. My lab at UCSF is working with colleagues around the world to make this technology safe, stable, and reliable enough for everyday use at home. We’re also working to improve the system’s performance so it will be worth the effort.

How neuroprosthetics work

The first version of the brain-computer interface gave the volunteer a vocabulary of 50 practical words. University of California, San Francisco

The first version of the brain-computer interface gave the volunteer a vocabulary of 50 practical words. University of California, San Francisco

Neuroprosthetics have come a long way in the past two decades. Prosthetic implants for hearing have advanced the furthest, with designs that interface with the cochlear nerve of the inner ear or directly into the auditory brain stem. There’s also considerable research on retinal and brain implants for vision, as well as efforts to give people with prosthetic hands a sense of touch. All of these sensory prosthetics take information from the outside world and convert it into electrical signals that feed into the brain’s processing centers.

The opposite kind of neuroprosthetic records the electrical activity of the brain and converts it into signals that control something in the outside world, such as a robotic arm, a video-game controller, or a cursor on a computer screen. That last control modality has been used by groups such as the BrainGate consortium to enable paralyzed people to type words—sometimes one letter at a time, sometimes using an autocomplete function to speed up the process.

For that typing-by-brain function, an implant is typically placed in the motor cortex, the part of the brain that controls movement. Then the user imagines certain physical actions to control a cursor that moves over a virtual keyboard. Another approach, pioneered by some of my collaborators in a 2021 paper, had one user imagine that he was holding a pen to paper and was writing letters, creating signals in the motor cortex that were translated into text. That approach set a new record for speed, enabling the volunteer to write about 18 words per minute.

In my lab’s research, we’ve taken a more ambitious approach. Instead of decoding a user’s intent to move a cursor or a pen, we decode the intent to control the vocal tract, comprising dozens of muscles governing the larynx (commonly called the voice box), the tongue, and the lips.

The seemingly simple conversational setup for the paralyzed man [in pink shirt] is enabled by both sophisticated neurotech hardware and machine-learning systems that decode his brain signals. University of California, San Francisco

The seemingly simple conversational setup for the paralyzed man [in pink shirt] is enabled by both sophisticated neurotech hardware and machine-learning systems that decode his brain signals. University of California, San Francisco

I began working in this area more than 10 years ago. As a neurosurgeon, I would often see patients with severe injuries that left them unable to speak. To my surprise, in many cases the locations of brain injuries didn’t match up with the syndromes I learned about in medical school, and I realized that we still have a lot to learn about how language is processed in the brain. I decided to study the underlying neurobiology of language and, if possible, to develop a brain-machine interface (BMI) to restore communication for people who have lost it. In addition to my neurosurgical background, my team has expertise in linguistics, electrical engineering, computer science, bioengineering, and medicine. Our ongoing clinical trial is testing both hardware and software to explore the limits of our BMI and determine what kind of speech we can restore to people.

The muscles involved in speech

Speech is one of the behaviors that sets humans apart. Plenty of other species vocalize, but only humans combine a set of sounds in myriad different ways to represent the world around them. It’s also an extraordinarily complicated motor act—some experts believe it’s the most complex motor action that people perform. Speaking is a product of modulated air flow through the vocal tract; with every utterance we shape the breath by creating audible vibrations in our laryngeal vocal folds and changing the shape of the lips, jaw, and tongue.

Many of the muscles of the vocal tract are quite unlike the joint-based muscles such as those in the arms and legs, which can move in only a few prescribed ways. For example, the muscle that controls the lips is a sphincter, while the muscles that make up the tongue are governed more by hydraulics—the tongue is largely composed of a fixed volume of muscular tissue, so moving one part of the tongue changes its shape elsewhere. The physics governing the movements of such muscles is totally different from that of the biceps or hamstrings.

Because there are so many muscles involved and they each have so many degrees of freedom, there’s essentially an infinite number of possible configurations. But when people speak, it turns out they use a relatively small set of core movements (which differ somewhat in different languages). For example, when English speakers make the “d” sound, they put their tongues behind their teeth; when they make the “k” sound, the backs of their tongues go up to touch the ceiling of the back of the mouth. Few people are conscious of the precise, complex, and coordinated muscle actions required to say the simplest word.

Team member David Moses looks at a readout of the patient’s brain waves [left screen] and a display of the decoding system’s activity [right screen]. University of California, San Francisco

Team member David Moses looks at a readout of the patient’s brain waves [left screen] and a display of the decoding system’s activity [right screen]. University of California, San Francisco

My research group focuses on the parts of the brain’s motor cortex that send movement commands to the muscles of the face, throat, mouth, and tongue. Those brain regions are multitaskers: They manage muscle movements that produce speech and also the movements of those same muscles for swallowing, smiling, and kissing.

Studying the neural activity of those regions in a useful way requires both spatial resolution on the scale of millimeters and temporal resolution on the scale of milliseconds. Historically, noninvasive imaging systems have been able to provide one or the other, but not both. When we started this research, we found remarkably little data on how brain activity patterns were associated with even the simplest components of speech: phonemes and syllables.

Here we owe a debt of gratitude to our volunteers. At the UCSF epilepsy center, patients preparing for surgery typically have electrodes surgically placed over the surfaces of their brains for several days so we can map the regions involved when they have seizures. During those few days of wired-up downtime, many patients volunteer for neurological research experiments that make use of the electrode recordings from their brains. My group asked patients to let us study their patterns of neural activity while they spoke words.

The hardware involved is called electrocorticography (ECoG). The electrodes in an ECoG system don’t penetrate the brain but lie on the surface of it. Our arrays can contain several hundred electrode sensors, each of which records from thousands of neurons. So far, we’ve used an array with 256 channels. Our goal in those early studies was to discover the patterns of cortical activity when people speak simple syllables. We asked volunteers to say specific sounds and words while we recorded their neural patterns and tracked the movements of their tongues and mouths. Sometimes we did so by having them wear colored face paint and using a computer-vision system to extract the kinematic gestures; other times we used an ultrasound machine positioned under the patients’ jaws to image their moving tongues.

The system starts with a flexible electrode array that’s draped over the patient’s brain to pick up signals from the motor cortex. The array specifically captures movement commands intended for the patient’s vocal tract. A port affixed to the skull guides the wires that go to the computer system, which decodes the brain signals and translates them into the words that the patient wants to say. His answers then appear on the display screen. Chris Philpot

The system starts with a flexible electrode array that’s draped over the patient’s brain to pick up signals from the motor cortex. The array specifically captures movement commands intended for the patient’s vocal tract. A port affixed to the skull guides the wires that go to the computer system, which decodes the brain signals and translates them into the words that the patient wants to say. His answers then appear on the display screen. Chris Philpot

We used these systems to match neural patterns to movements of the vocal tract. At first we had a lot of questions about the neural code. One possibility was that neural activity encoded directions for particular muscles, and the brain essentially turned these muscles on and off as if pressing keys on a keyboard. Another idea was that the code determined the velocity of the muscle contractions. Yet another was that neural activity corresponded with coordinated patterns of muscle contractions used to produce a certain sound. (For example, to make the “aaah” sound, both the tongue and the jaw need to drop.) What we discovered was that there is a map of representations that controls different parts of the vocal tract, and that together the different brain areas combine in a coordinated manner to give rise to fluent speech.

The role of AI in today’s neurotech

Our work depends on the advances in artificial intelligence over the past decade. We can feed the data we collected about both neural activity and the kinematics of speech into a neural network, then let the machine-learning algorithm find patterns in the associations between the two data sets. It was possible to make connections between neural activity and produced speech, and to use this model to produce computer-generated speech or text. But this technique couldn’t train an algorithm for paralyzed people because we’d lack half of the data: We’d have the neural patterns, but nothing about the corresponding muscle movements.

The smarter way to use machine learning, we realized, was to break the problem into two steps. First, the decoder translates signals from the brain into intended movements of muscles in the vocal tract, then it translates those intended movements into synthesized speech or text.

We call this a biomimetic approach because it copies biology; in the human body, neural activity is directly responsible for the vocal tract’s movements and is only indirectly responsible for the sounds produced. A big advantage of this approach comes in the training of the decoder for that second step of translating muscle movements into sounds. Because those relationships between vocal tract movements and sound are fairly universal, we were able to train the decoder on large data sets derived from people who weren’t paralyzed.

A clinical trial to test our speech neuroprosthetic

The next big challenge was to bring the technology to the people who could really benefit from it.

The National Institutes of Health (NIH) is funding our pilot trial, which began in 2021. We already have two paralyzed volunteers with implanted ECoG arrays, and we hope to enroll more in the coming years. The primary goal is to improve their communication, and we’re measuring performance in terms of words per minute. An average adult typing on a full keyboard can type 40 words per minute, with the fastest typists reaching speeds of more than 80 words per minute.

Edward Chang was inspired to develop a brain-to-speech system by the patients he encountered in his neurosurgery practice. Barbara Ries

Edward Chang was inspired to develop a brain-to-speech system by the patients he encountered in his neurosurgery practice. Barbara Ries

We think that tapping into the speech system can provide even better results. Human speech is much faster than typing: An English speaker can easily say 150 words in a minute. We’d like to enable paralyzed people to communicate at a rate of 100 words per minute. We have a lot of work to do to reach that goal, but we think our approach makes it a feasible target.

The implant procedure is routine. First the surgeon removes a small portion of the skull; next, the flexible ECoG array is gently placed across the surface of the cortex. Then a small port is fixed to the skull bone and exits through a separate opening in the scalp. We currently need that port, which attaches to external wires to transmit data from the electrodes, but we hope to make the system wireless in the future.

We’ve considered using penetrating microelectrodes, because they can record from smaller neural populations and may therefore provide more detail about neural activity. But the current hardware isn’t as robust and safe as ECoG for clinical applications, especially over many years.

Another consideration is that penetrating electrodes typically require daily recalibration to turn the neural signals into clear commands, and research on neural devices has shown that speed of setup and performance reliability are key to getting people to use the technology. That’s why we’ve prioritized stability in creating a “plug and play” system for long-term use. We conducted a study looking at the variability of a volunteer’s neural signals over time and found that the decoder performed better if it used data patterns across multiple sessions and multiple days. In machine-learning terms, we say that the decoder’s “weights” carried over, creating consolidated neural signals.

University of California, San Francisco

Because our paralyzed volunteers can’t speak while we watch their brain patterns, we asked our first volunteer to try two different approaches. He started with a list of 50 words that are handy for daily life, such as “hungry,” “thirsty,” “please,” “help,” and “computer.” During 48 sessions over several months, we sometimes asked him to just imagine saying each of the words on the list, and sometimes asked him to overtly try to say them. We found that attempts to speak generated clearer brain signals and were sufficient to train the decoding algorithm. Then the volunteer could use those words from the list to generate sentences of his own choosing, such as “No I am not thirsty.”

We’re now pushing to expand to a broader vocabulary. To make that work, we need to continue to improve the current algorithms and interfaces, but I am confident those improvements will happen in the coming months and years. Now that the proof of principle has been established, the goal is optimization. We can focus on making our system faster, more accurate, and—most important— safer and more reliable. Things should move quickly now.

Probably the biggest breakthroughs will come if we can get a better understanding of the brain systems we’re trying to decode, and how paralysis alters their activity. We’ve come to realize that the neural patterns of a paralyzed person who can’t send commands to the muscles of their vocal tract are very different from those of an epilepsy patient who can. We’re attempting an ambitious feat of BMI engineering while there is still lots to learn about the underlying neuroscience. We believe it will all come together to give our patients their voices back.

Reference: https://ift.tt/uq5GSc6Friday, October 28, 2022

Will Elon Musk Be Able to Keep Twitter’s Advertisers Happy?

Elon Musk Starts Putting His Imprint on Twitter

Elon Musk Moves to Form Content Moderation Council. Here’s What We Know.

Here’s How Twitter Users Are Reacting to Musk’s Takeover

Trump Says He’s ‘Very Happy’ About Musk’s Twitter Takeover

Thursday, October 27, 2022

Amazon Earnings: Return to Profitability But Slow Growth Signaled Ahead

How This Startup Cut Production Costs of Millimeter Wave Power Amplifiers

Diana Gamzina is on a mission to drastically reduce the price of millimeter-wave power amplifiers. The vacuum-electronics devices are used for communication with distant space probes and for other applications that need the highest data rates available.

The amplifiers can cost as much as US $1 million apiece because they’re made using costly, high-precision manufacturing and manual assembly. Gamzina’s startup, Elve, is using advanced materials and new manufacturing technologies to lower the unit price.

It can take up to a year to produce one of the amplifiers using conventional manufacturing processes, but Elve is already making about one per week, Gamzina says. Elve’s process enables sales at about 10 percent of the usual price, making large-volume markets more accessible.

Launched in June 2020, the startup produces affordable systems for wireless connections that deliver optical fiber quality, or what Gamzina calls elvespeed connectivity. The company’s name, she says, refers to atmospheric emission of light and very low frequency perturbations due to electromagnetic pulse sources. Elves can be seen as a flat ring glowing in Earth’s upper atmosphere. They appear for just a few milliseconds and can grow to be up to 320 kilometers wide.

Based in Davis, Calif., Elve employs 12 people as well as a handful of consultants and advisors.

For her work with amplifiers, Gamzina, an IEEE senior member, was recognized with this year’s Vacuum Electronics Young Scientist Award from the IEEE Electron Devices Society. She received the award in April at the IEEE International Vacuum Electronics Conference, in Monterey, Calif.

“Dr. Gamzina’s innovation and contributions to the industry are remarkable,” IEEE Member Jack Tucek, general chair of the conference, said in a news release about the award.

Interactions between millimeter-wave signals and electrons

In addition to running her company, Ganzima works as a staff scientist at the SLAC National Accelerator Laboratory, in Menlo Park, Calif.—a U.S. Department of Energy national lab operated by Stanford University. It was in this role that she began speaking to industry representatives about how to expand the market for high-performance millimeter-wave amplifiers.

From those discussions, she found that lowering the price was key.

“Customers are paying between $250,000 and $1 million for each individual device,” she says. “When you hear these numbers, you realize why people don’t think this price is anywhere close to affordable for growing the market.”

Elve’s millimeter-wave power amplifier system weighs 4 kilograms, measures 23 centimeters by 15 cm by 8 cm, and is powered by a 28-volt DC input bus. The heart of the amplifier system is a set of traveling-wave tubes. TWTs are a subset of vacuum electronics that can amplify electromagnetic signals by more than a hundredfold over a wide bandwidth of frequencies. They’re commonly used for communications and radar imaging applications, Gamzina says.

“Our ultimate goal is to be part of the terrestrial market such that the amplifiers are installed on cellphone towers, enabling high-data-rate communication in remote and rural locations all over the world.”

The TWT takes a millimeter-wave signal and makes it “interact with a high-energy electron beam so that the signal steals the energy from the electron beam. That’s how it gets amplified,” Gamzina says. “In some ways, it’s a simple concept, but it requires excellence in RF design, manufacturing, vacuum science, electron emission, and thermal management to make it all work.”

Elve’s millimeter-wave amplifiers enable communication with satellite networks and create long-distance ground-to-ground links.

“This allows enormous amounts of data to be sent, leading to higher data rates that are comparable to fiber or laser-type communication devices,” Gamzina says. “Another advantage is that the amplifier can operate in most inclement weather.”

The initial market for Elve’s amplifiers is the communications field, she says, especially for backhaul: the connections between base stations and the core network infrastructure.

“Our ultimate goal is to be part of the terrestrial market such that the amplifiers are installed on cellphone towers,” Gamzina says, “enabling high-data-rate communication in remote and rural locations all over the world.”

The company plans to develop millimeter-wave amplifiers for imaging and radar applications as well.

Elve’s power amplifier containers house a traveling-wave tube, electronic power conditioner, and a cooling system.Elve

Elve’s power amplifier containers house a traveling-wave tube, electronic power conditioner, and a cooling system.Elve

Building on research done at the SLAC Lab

Gamzina’s work at Elve is related to research she is conducting at the SLAC lab as well as previous work she did as a development engineer with the millimeter-wave research group at the University of California, Davis. There she studied vacuum devices that operate at terahertz frequencies for use as power sources in particle accelerators, broadcast transmitters, industrial heating, radar systems, and communication satellites. She also led research programs in additive manufacturing techniques. She holds three U.S. patents related to the manufacture of vacuum-electronics devices.

To learn how to launch a startup, she took a class at Stanford on the subject and she attended a 10-week training course for founders offered by 4thly, a global startup accelerator. She also says she learned a few things as a youngster while helping out at her father’s hydraulic equipment manufacturing company.

Raising funds to keep one’s business afloat is difficult for many startups, but it wasn’t the case for Elve, Gamzina says.

“We have good market traction, a good product, and a good team,” she says. “There’s been a lot of interest in what we’re doing.”

What has been a concern, she says, is that the company is growing so rapidly, it might be difficult to scale up production.

“Making hundreds or thousands of these has been a challenge to even comprehend,” she says. “But we are ready to go from the state we’re in to the next big jump.”

Reference: https://ift.tt/8OVKn5kWhy is Hydroelectricity So Green, and Yet Unfashionable?

I live in Manitoba, a province of Canada where all but a tiny fraction of electricity is generated from the potential energy of water. Unlike in British Columbia and Quebec, where generation relies on huge dams, our dams on the Nelson River are low, with hydraulic heads of no more than 30 meters, which creates only small reservoirs. Of course, the potential is the product of mass, the gravitational constant, and height, but the dams’ modest height is readily compensated for by a large mass, as the mighty river flowing out of Lake Winnipeg continues its course to Hudson Bay.

You would think this is about as “green” as it can get, but in 2022 that would be a mistake. There is no end of gushing about China’s cheap solar panels—but when was the last time you saw a paean to hydroelectricity?

Construction of large dams began before World War II. The United States got the Grand Coulee on the Columbia River, the Hoover Dam on the Colorado, and the dams of the Tennessee Valley Authority. After the war, construction of large dams moved to the Soviet Union, Africa, South America (Brazil’s Itaipu, at its completion in 1984 the world’s largest dam, with 14 gigawatts capacity), and Asia, where it culminated in China’s unprecedented effort. China now has three of the world’s six largest hydroelectric stations: Three Gorges, 22.5 GW (the largest in the world); Xiluodu, 13.86 GW; and Wudongde, 10.2 GW. Baihetan on the Jinsha River should soon begin full-scale operation and become the world’s second-largest station (16 GW).

But China’s outsize drive for hydroelectricity is unique. By the 1990s, large hydro stations had lost their green halo in the West and come to be seen as environmentally undesirable. They are blamed for displacing populations, disrupting the flow of sediments and the migration of fish, destroying natural habitat and biodiversity, degrading water quality, and for the decay of submerged vegetation and the consequent release of methane, a greenhouse gas. There is thus no longer a place for Big Hydro in the pantheon of electric greenery. Instead, that pure status is now reserved above all for wind and solar. This ennoblement is strange, given that wind projects require enormous quantities of embodied energy in the form of steel for towers, plastics for blades, and concrete for foundations. The manufacture of solar panels involves the environmental costs from mining, waste disposal, and carbon emissions.

In 2020 the world’s hydro stations produced 75 percent more electricity than wind and solar combined and accounted for 16 percent of all global generation

And hydro still matters more than any other form of renewable generation. In 2020, the world’s hydro stations produced 75 percent more electricity than wind and solar combined (4,297 versus 2,447 terawatt-hours) and accounted for 16 percent of all global generation (compared with nuclear electricity’s 10 percent). The share rises to about 60 percent in Canada and 97 percent in Manitoba. And some less affluent countries in Africa and Asia are still determined to build more such stations. The largest projects now under construction outside China are the Grand Ethiopian Renaissance Dam on the White Nile (6.55 GW) and Pakistan’s Diamer-Bhasha (4.5 GW) and Dasu (4.3 GW) on the Indus.

I never understood why dams have suffered such a reversal of fortune. There is no need to build megastructures, with their inevitable undesirable effects. And everywhere in the world there are still plenty of opportunities to develop modest projects whose combined capacities could provide not only excellent sources of clean electricity but also serve as long-term stores of energy, as reservoirs for drinking water and irrigation, and for recreation and aquaculture.

I am glad to live in a place that is reliably supplied by electricity generated by low-head turbines powered by flowing water. Manitoba’s six stations on the Nelson River have a combined capacity slightly above 4 GW. Just try to get the equivalent here from solar in January, when the snow is falling and the sun barely rises above the horizon!

This article appears in the November 2022 print issue as “Hydropower, the Forgotten Renewable.”

Reference: https://ift.tt/28YuSsUApple clarifies security update policy: Only the latest OSes are fully patched

Enlarge / The default wallpaper for macOS 11 Big Sur. (credit: Apple)

Earlier this week, Apple released a document clarifying its terminology and policies around software upgrades and updates. Most of the information in the document isn't new, but the company did provide one clarification about its update policy that it hadn't made explicit before: Despite providing security updates for multiple versions of macOS and iOS at any given time, Apple says that only devices running the most recent major operating system versions should expect to be fully protected.

Throughout the document, Apple uses "upgrade" to refer to major OS releases that can add big new features and user interface changes and "update" to refer to smaller but more frequently released patches that mostly fix bugs and address security problems (though these can occasionally enable minor feature additions or improvements as well). So updating from iOS 15 to iOS 16 or macOS 12 to macOS 13 is an upgrade. Updating from iOS 16.0 to 16.1 or macOS 12.5 to 12.6 or 12.6.1 is an update.

"Because of dependency on architecture and system changes to any current version of macOS (for example, macOS 13)," the document reads, "not all known security issues are addressed in previous versions (for example, macOS 12)."

Elon Musk Reaches Out to Advertisers Ahead of Deadline for Twitter Deal

Chip Makers, Once in High Demand, Confront Sudden Challenges

Wednesday, October 26, 2022

Ford Lost Money in the Third Quarter as Costs Surged

Feds say Ukrainian man running malware service amassed 50M unique credentials

Enlarge (credit: Getty Images | Charles O'Rear)

Federal prosecutors have charged a 26-year-old Ukrainian national with operating a malware service that was responsible for stealing sensitive data from more than 2 million individuals around the world.

Prosecutors in Texas said on Tuesday that Mark Sokolovsky, 26, of Ukraine helped operate “Raccoon,” an info stealer program that worked using a model known as MaaS, short for malware-as-a-service. In exchange for about $200 per month in cryptocurrency, Sokolovsky and others behind Raccoon supplied customers with the malware, digital infrastructure, and technical support. Customers would then use the service to infect targets with the malware, which would surreptitiously harvest credentials for email and bank accounts, credit cards, cryptocurrency wallets, and other private information.

First seen in April 2019, Raccoon was able to extract sensitive data from a wide range of applications, including 29 separate Chromium-based browsers, Mozilla-based apps, and cryptocurrency wallets from Exodus and Jaxx. Written in C++, the malware can also take screenshots. Once Raccoon has extracted all data from an infected machine, it uninstalls and deletes all traces of itself.

How Is Your Company Responding to Labor Organizing? We Want to Hear.

“Too much and too soon”—Steven Sinofsky looks back at Windows 8, 10 years later

Enlarge / A billboard showing Windows 8 in Times Square in New York at the Microsoft Store in October 2012. (credit: Personal photo from Steven Sinofsky)

On October 26, 2012, Microsoft released Windows 8, a hybrid tablet/desktop operating system that took bold risks but garnered mixed reviews. Ten years later, we've caught up with former Windows Division President Steven Sinofsky to explore how Windows 8 got started, how it predicted several current trends in computing, and how he feels about the OS in retrospect.

In 2011, PC sales began to drop year over year in a trend that alarmed the industry. Simultaneously, touch-based mobile comping on smartphones and tablets dramatically rose in popularity. In response, Microsoft undertook the development of a flexible operating system that would ideally scale from mobile to desktop seamlessly. Sinofsky accepted the challenge and worked with many others, including Julie Larson-Green and Panos Panay, then head of the Surface team, to make it happen.

Windows 8 represented the most dramatic transformation of the Windows interface since Windows 95. While that operating system introduced the Start menu, Windows 8 removed that iconic menu in favor of a Start screen filled with "live tiles" that functioned well on touchscreen computers like the purpose-built Microsoft Surface, but frustrated desktop PC users. It led to heavy pushback from the press, and PC sales continued to decline.

Tuesday, October 25, 2022

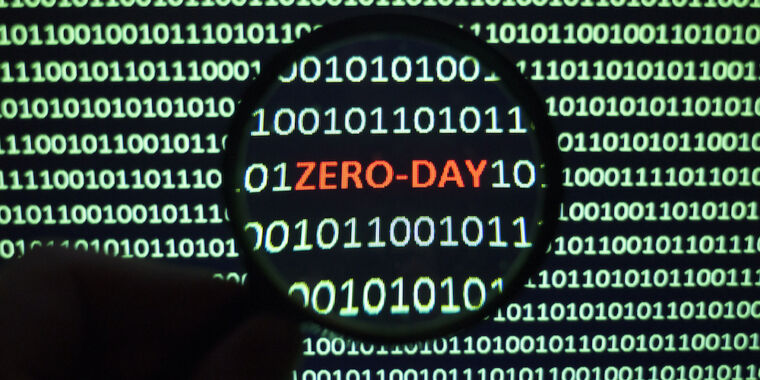

Apple rushes out patch for iPhone and iPad 0-day reported by anonymous source

Enlarge (credit: Getty Images)

Apple on Monday patched a high-severity zero-day vulnerability that gives attackers the ability to remotely execute malicious code that runs with the highest privileges inside the operating system kernel of fully up-to-date iPhones and iPads.

In an advisory, Apple said that CVE-2022-42827, as the vulnerability is tracked, “may have been actively exploited,” using a phrase that’s industry jargon for indicating a previously unknown vulnerability is being exploited. The memory corruption flaw is the result of an “out-of-bounds write,” meaning Apple software was placing code or data outside a protected buffer. Hackers often exploit such vulnerabilities so they can funnel malicious code into sensitive regions of an OS and then cause it to execute.

The vulnerability was reported by an “anonymous researcher,” Apple said, without elaborating.

Russia’s Uunsupported ‘dirty bomb’ claims reverberate in right-wing U.S. communities.

Build a Passive Radar With Software-Defined Radio

Normally, when it comes to radio-related projects, my home of New York City is a terrible place to be. If we could see and hear radio waves, it would make an EDM rave feel like a sensory deprivation tank. Radio interference plagues the metropolis. But for once, I realized I could use this kaleidoscope of electromagnetism to my advantage—with a passive radar station.

Unlike conventional radar, passive radar doesn’t send out pulses of its own and watch for reflections. Instead, it uses ambient signals. A reference antenna picks up a signal from, say, a cell tower, while a surveillance antenna is tuned to the same frequency. The reference and surveillance signals are compared. If a reflection from an object is detected, then the time it took to arrive at the surveillance antenna gives a range. Frequency shifts indicate the object’s speed via the Doppler effect.

I was interested in passive radar because I wanted to put a new software defined radio (SDR) through its paces. I’ve checked in with amateur SDR developments for IEEE Spectrum since 2006, when SDR become something remotely within a maker’s budget. The biggest leap forward happened in 2012 when it was discovered that USB stick TV tuners using the RTL2832U demodulator chip could be tapped to make very cheap but effective SDR receivers. An explosion of interest in SDRs followed. Building off the demand stimulated by this activity, a number of manufacturers have started making premium, but still relatively cheap, SDRs. This includes RTLx-based USB sticks built with better supporting components and designs versus the original TV tuners, and completely new receivers such as the RSPDx. Some of these new SDRs can transmit as well as receive, such as the HackRF One or Lime Mini.

I was researching diving back into SDR with one of these devices when I spotted the CrowdSupply campaign for the US $399 KrakenSDR. It’s receive only, but it boasts not one or two tuners, but five! The tuners are based on the RTL R820T2/R860 chip, and they are combined with hardware that can automatically do coherence synchronization among them.

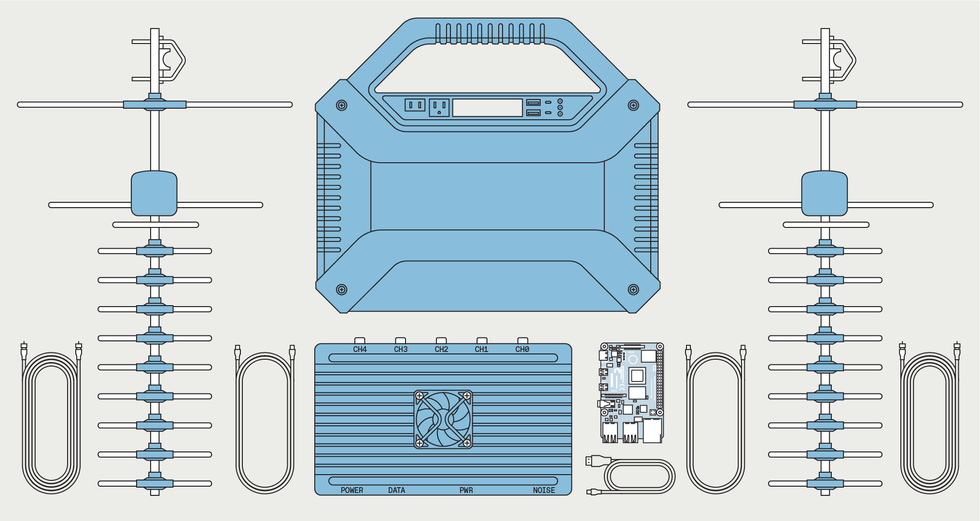

Both the KrakenRF SDR and the Raspberry Pi 4 [middle bottom] require a fair amount of power via USB C cables, so a battery pack [top middle] is needed for mobile operation. The Pi is connected to the SDR via a data link, and in turn the SDR is connected via coaxial cables to two directional TV antennas [right and left]. James Provost

Both the KrakenRF SDR and the Raspberry Pi 4 [middle bottom] require a fair amount of power via USB C cables, so a battery pack [top middle] is needed for mobile operation. The Pi is connected to the SDR via a data link, and in turn the SDR is connected via coaxial cables to two directional TV antennas [right and left]. James Provost

What that means is that, for example, you can arrange five antennas in a circle, and do radio direction finding by looking at when a transmission arrives at each antenna. Normally, an amateur looking to do direction finding would have to wave around a directional antenna, something difficult to do while, for example, driving a car.

But it was the KrakenSDR’s ability to do passive radar that really caught my eye as a new capability in lowish-cost radio tech , so I plonked down the money. The next step was to get suitable antennas. The radio’s manufacturer, KrakenRF, recommends directional Yagi TV antennas for two reasons. First, while the KrakenSDR can work with many signals including FM radio or cell-tower transmissions, digital TV signals are better to work with because they are fairly evenly distributed across the channel’s broadcast band, unlike the narrower and more variable signals from an FM station. (KrakenRF notes that if you must use an FM signal, pick a heavy metal station “since heavy metal is closer to white noise.”) The second reason is that pointing a directional antenna away from the reference source means that it’s less likely to be swamped by the reference signal.

I ordered two small and light $19 TV antennas. Portability was important because I needed to carry my entire setup to and from my apartment building’s roof, where my particular location in an outer borough of the city provided more advantages. First, the sky above has a regular supply of aircraft landing and taking off from NYC’s airports—and large metal assemblies moving against an uncluttered background are perfect radar test objects. Second, my roof has a line of sight to the Empire State Building, giving me the ability to choose as a reference signal any one of more than half a dozen TV channels transmitted from its spire.

I deployed my rig: a heavy-duty battery pack, the KrakenSDR, cables and antennas, along with a Raspbery Pi 4 to process data from the SDR. KrakenRF offers an SD card image for the Pi that bundles an operating system configured to work with its preinstalled open-source software. It also sets up the Pi as a Wi-Fi access point with a Web interface. I really wish more companies would adopt this approach, as installing open-source software is often a frustrating exercise in trying to replicate the precise system environment it was developed in. Even if you want to ultimately install the KrakenSDR software somewhere other than a Pi, having a known-good setup is useful as a reference, and allows you to test the hardware.

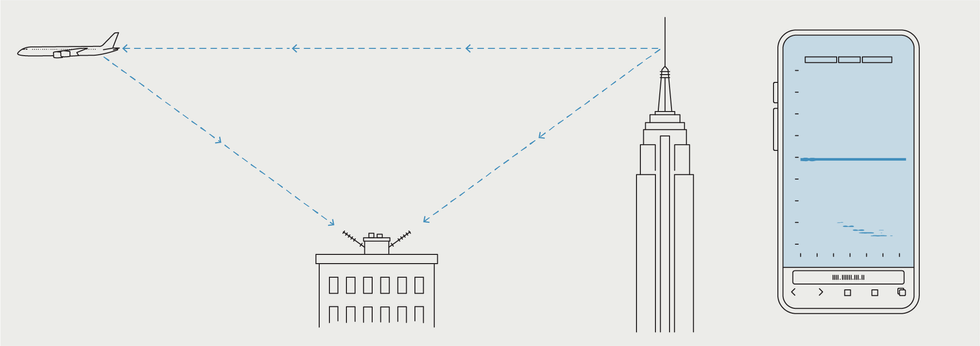

Comparing the time between the arrival of a signal from a broadcast transmitter and the arrival of a reflection of that signal lets you detect objects such as airplanes and estimate their range. Frequency shifts between the two signals allow you to plot the speed of the object away or toward the antennas along with the range. The trace on the right shows a plane moving away as it increases its speed.James Provost

Comparing the time between the arrival of a signal from a broadcast transmitter and the arrival of a reflection of that signal lets you detect objects such as airplanes and estimate their range. Frequency shifts between the two signals allow you to plot the speed of the object away or toward the antennas along with the range. The trace on the right shows a plane moving away as it increases its speed.James Provost

I pointed the reference antenna toward the Empire State Building and retreated with the surveillance antenna behind the superstructure of my building’s stairwell. This was in a bid to shield the antenna from the reference signal and myself from the wind. Checking the feed from the antennas using the Web interface’s built-in spectrum analyzer, I discovered I was almost too successful in choosing the Empire State’s transmitter tower as a source of radio illumination: The reference signal was saturating the receiver with the default gain setting of 27 decibels, so I dropped it down to 2.7 dB.

But intense illumination means bright reflections. With one hand I pointed the surveillance antenna at the overcast skies and held my phone in the other. Gratifyingly, I almost instantly started seeing a blip on the speed-versus-range radar plot, matched a few moments later by the rumble of an approaching jet. (The plot updates about once every 3 seconds.) Because of the strength of the echoes, I was able to raise the signal-cutoff threshold significantly, giving me radar returns uncluttered with noise, and often with multiple aircraft. A win for SDR!

Admittedly, my passive radar setup doesn’t have much everyday value. But as a demonstration of how far and fast inexpensive SDR technology is advancing, it’s a clear signal.

This article appears in the November 2022 print issue as “Passive Radar With the KrakenSDR.”

Reference: https://ift.tt/MN9Fc0oGM Reports Increased Profits in Third Quarter Amid High Inflation

Shutterstock partners with OpenAI to sell AI-generated artwork, compensate artists

Enlarge / The Shutterstock logo over an image generated by DALL-E. (credit: Shutterstock / OpenAI)

Today, Shutterstock announced that it has partnered with OpenAI to provide AI image synthesis services using the DALL-E API. Once the service is available, the firm says it will allow customers to generate images based on text prompts. Responding to prevailing ethical criticism of AI-generated artwork, Shutterstock also says it will compensate artists "whose works have contributed to develop the AI models."

DALL-E is a commercial deep learning image synthesis product created by OpenAI that can generate new images in almost any artistic style based on text descriptions (called "prompts") by the person who wants to create the image. If you type "an astronaut riding a horse," DALL-E will create an image of an astronaut on a horse.

DALL-E and other image synthesis models, such as Midjourney and Stable Diffusion, have ignited a passionate response from artists who fear their livelihoods might be threatened by the new technology. In addition, the image synthesis models have "learned" to generate images by analyzing the work of human artists found on the web without artist consent.

Can Alt-Fuel Credits Accelerate EV Adoption?

The United States is home to the world’s largest biofuel program. For the past decade and a half, the U.S. federal government has mandated that the country’s government-operated planes, trains, and automobiles run on a fuel blend partly made from corn- and soybean-based biofuels.

It’s a program with decidedly mixed results. Now, it might get a breath of new life.

Earlier this month, Reuters reported that the program could be expanded to provide power for charging electric vehicles. It would be the biggest change in the history of a program that has, in part, failed to live up to its designers’ ambitious dreams.

In one way, the program in question—the Renewable Fuel Standard (RFS)—is a relic from a bygone era. U.S. lawmakers established the RFS in 2005 and expanded it in 2007, well before solar panels, wind turbines, and electric vehicles became the stalwarts of decarbonization they are today.

The RFS, in essence, mandated that the blend powering engines in the nation’s official service vehicles run on a certain amount of renewable fuel. Petroleum refiners have to put a certain amount of renewable fuel—such as ethanol derived from corn or cellulose—into the U.S. supply. If a refiner couldn’t manage it, it could buy credits, called RINs, from a supplier who did.

From 2006, the RFS set a schedule of yearly obligations through 2022, with annually rising RIN targets. The long-term targets were more ambitious than the actual amount of biofuel the U.S. ever actually produced. (It didn’t help that fossil fuel producers fought tooth and nail to reduce their obligations. Meanwhile, agriculture industry lobbyists fought just as hard against those reductions.)

By the mid-2010s, the U.S. Environmental Protection Agency (EPA), which stewards the RFS, had repeatedly downsized the targets by nearly 25 percent. In 2016, a U.S. government report stated, quite bluntly, that “it is unlikely that the goals of the RFS will be met as envisioned.” A more recent study found that, since the program coaxed farmers into using more land for corn cultivation, RFS biofuel wasn’t actually any less carbon-intensive than gasoline.

Now, it is 2022. Amidst a backdrop of rising fuel prices, the Biden administration might bring the RFS its greatest shakeup yet.

The proposed changes aren’t set in stone. The EPA is under orders to propose a 2023 mandate by 16 November. Any electric vehicle add-on would likely debut by then. Reuters previously reported that the Biden administration has reached out to electric vehicle maker Tesla to collaborate on crafting the mandates.

The changes might bolster the RFS with a new type of credit, an “e-RIN,” which would mark an amount of energy used for charging electric vehicles. The changes might nudge the RFS away from corn and oil: Car charging companies and power plant biogas suppliers might become eligible, too.

It wouldn’t be this administration’s first attempt at boosting electric vehicles. While California leads state governments in slating a 2035 target for ending most internal combustion vehicle sales, the federal government’s ambitious Inflation Reduction Act allocated funds for tax credits on electric vehicles. That plan, however, has proven contentious due to an asterisk: A $7500-per-vehicle credit would only apply to cars where most battery material and components come from North America.

Many analysts believe that the plan could actually slow electric vehicle takeup rather than accelerate it. And although the plan seeks to reduce U.S. electric vehicle supply chains’ reliance on Chinese rare earths and battery components, U.S.-friendly governments in Europe, Japan, and South Korea have criticized the plan for purportedly discriminating against non-U.S. vehicles, potentially breaching World Trade Organisation rules.

Nunes says it’s currently unclear whether federal government action via a fuel standard would be more effective than direct investment. It’s not the only question with an answer that is still in flux.

“How much cleaner are electric vehicles relative to internal combustion engines that are powered by fuels that fall under the RFS?” says Nunes. “Because that’s really the comparison that you care about.”

What that means is that any electric vehicle standard will only be as carbon-free as the supply chains that go into making the vehicles and the electrical grid from which they draw power; and that puts the pressure on governments, electricity providers, and consumers alike to decarbonize the grid.

Meanwhile, in a future U.S. where electric vehicles come to dominate the roads, sidelining internal combustion engines and liquefied fuels for good, do biofuels and the RFS’s original purpose still have a place?

Nunes believes so. “There are certainly areas of the economy where electrification does not make a lot of sense,” he says.

In the world of aviation, for instance, battery tech hasn’t quite advanced to a point that would make electric flights feasible. “That’s where, I think, using things like sustainable aviation fuels and biofuels, et cetera, makes a lot more sense,” Nunes says.

Reference: https://ift.tt/oWIa84PNew AirSnitch attack breaks Wi-Fi encryption in homes, offices, and enterprises

It’s hard to overstate the role that Wi-Fi plays in virtually every facet of life. The organization that shepherds the wireless protocol s...

-

Enlarge (credit: Getty ) After reversing its positioning on remote work, Dell is reportedly implementing new tracking techniques on ...

-

In the 1980s and 1990s, online communities formed around tiny digital oases called bulletin-board systems. Often run out of people’s home...

-

In April, Microsoft’s CEO said that artificial intelligence now wrote close to a third of the company’s code . Last October, Google’s CEO...